Researchers at Princeton University, UCLA, and the University of Pennsylvania have developed an approach that gives AI agents persistent worlds to explore. Standard web code defines the rules, while a language model fills these worlds with stories and descriptions.

Web world models split the world into two layers. The first is pure code written in TypeScript. This code defines what exists, how things connect, and which actions are allowed. It enforces logical consistency, preventing players from walking through locked doors or spending money they don’t have.

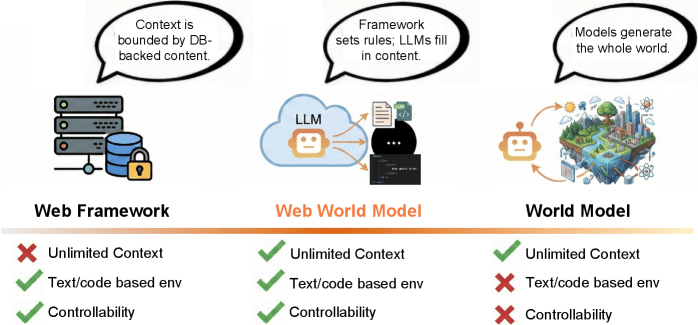

Web frameworks offer controllability but limited context. Pure world models allow unlimited context but lose controllability. Web world models combine both, code defines the rules, and LLMs fill in the content. | Image: Feng et al.

Web frameworks offer controllability but limited context. Pure world models allow unlimited context but lose controllability. Web world models combine both, code defines the rules, and LLMs fill in the content. | Image: Feng et al.

The second layer comes from a language model. It brings the framework to life, generating environment descriptions, NPC dialogs, and aesthetic details. Crucially, the model can only work within the boundaries the code sets. When a player tries something, the code first checks whether the action is allowed. Only then does the AI describe what happens. This means the language model can’t break any rules, no matter how creative it gets.

Hash functions create “infinite” universes without storage

Perhaps the cleverest idea involves storage. How do you save a nearly infinite universe? The researchers skip storage entirely and recalculate each location from its coordinates on demand. When a player visits a particular planet, its coordinates run through a hash function, a formula that always produces the same output for the same input. That output then sets the random parameters for the language model, ensuring the planet looks identical every time.

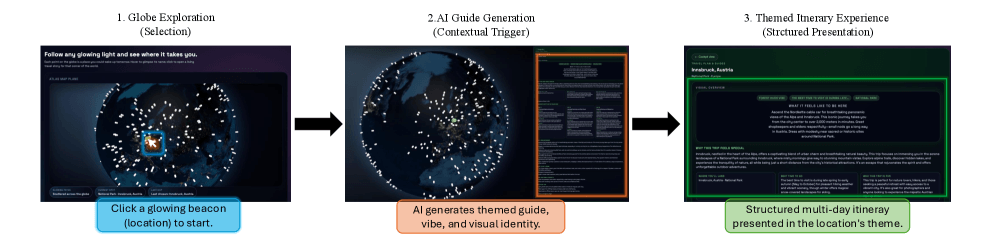

Users select a location on the globe (1), the system uses an LLM to generate a themed travel guide (2), which appears as a structured multi-day itinerary (3). Geographic data comes from the code, descriptions from the language model. | Image: Feng et al.

Users select a location on the globe (1), the system uses an LLM to generate a themed travel guide (2), which appears as a structured multi-day itinerary (3). Geographic data comes from the code, descriptions from the language model. | Image: Feng et al.

A player can visit a planet, leave, come back later, and find the same planet. Not because anyone saved it, but because the math always works out the same way. The researchers call this “object permanence with no storage cost.”

The system also works without AI. If the language model responds slowly or crashes, the system falls back on pre-made templates. The world loses its descriptive richness, but the rules still work. This sets the approach apart from purely generative systems, where a language model outage would take down the entire application.

From travel guides to card games

To show the concept works across different domains, the researchers built seven applications. An “Infinite Travel Atlas” turns Earth into an explorable globe. Click anywhere, and the system generates information about places, routes, and stories. Geographic data comes from the code, and descriptions come from the language model.

A “Galaxy Travel Atlas” does the same for a fictional sci-fi universe. The code generates galaxies, star systems, and planets according to defined rules. The language model adds missions, characters, and educational content. Visitors to any planet get a briefing on terrain, sky, signals, and dangers.

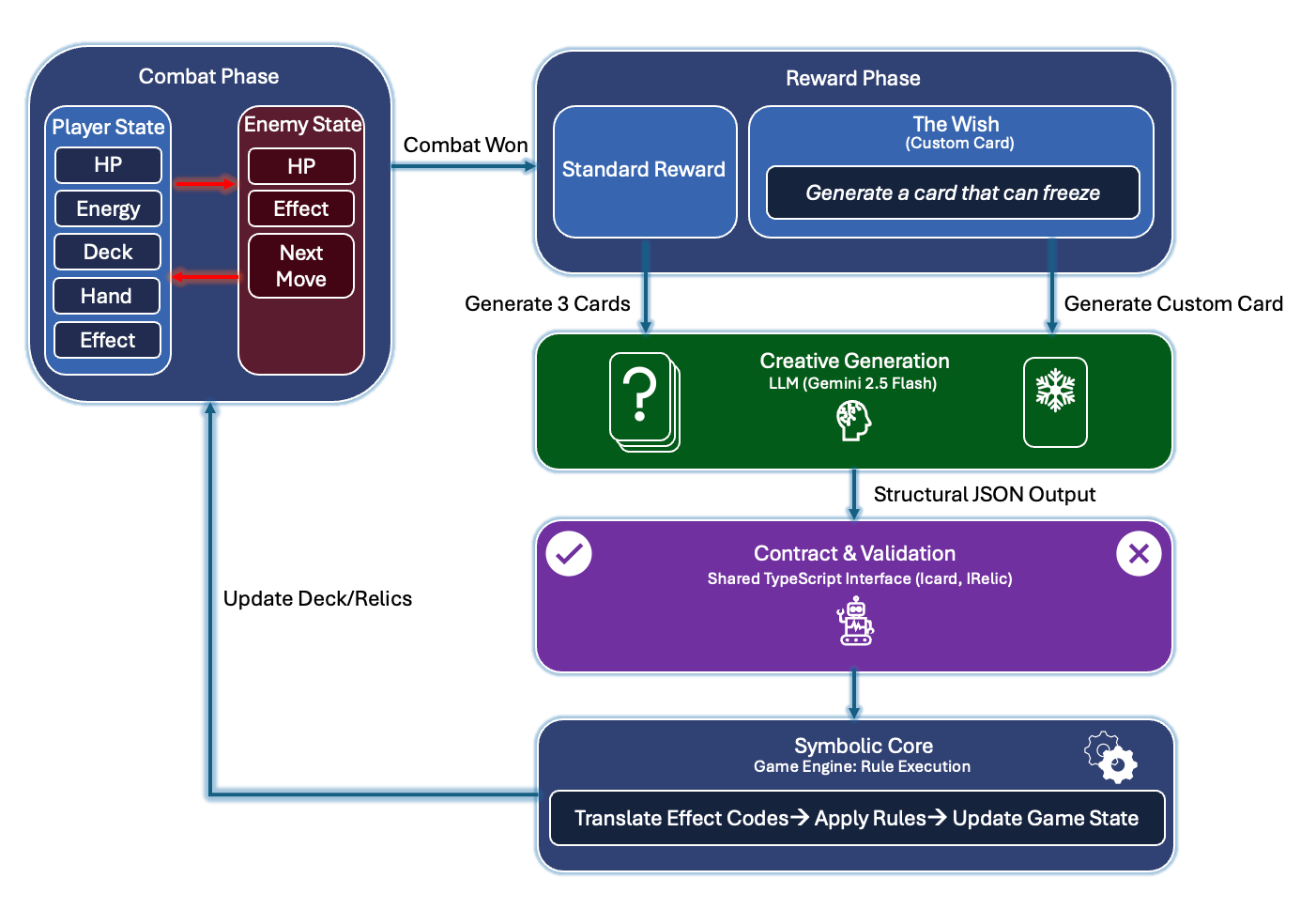

A card game called “AI Spire” lets players request custom cards. Type “a fireball that does a lot of fire damage but also freezes your opponent,” and the system generates a matching card. The code uses schema validation to make sure the card follows game rules and stays within limits for things like costs and card types.

After winning a battle, an LLM (Gemini 2.5 Flash) generates reward cards, either as a standard selection or based on player input. TypeScript interfaces validate the output before the game engine applies the effects. | Image: Feng et al.

After winning a battle, an LLM (Gemini 2.5 Flash) generates reward cards, either as a standard selection or based on player input. TypeScript interfaces validate the output before the game engine applies the effects. | Image: Feng et al.

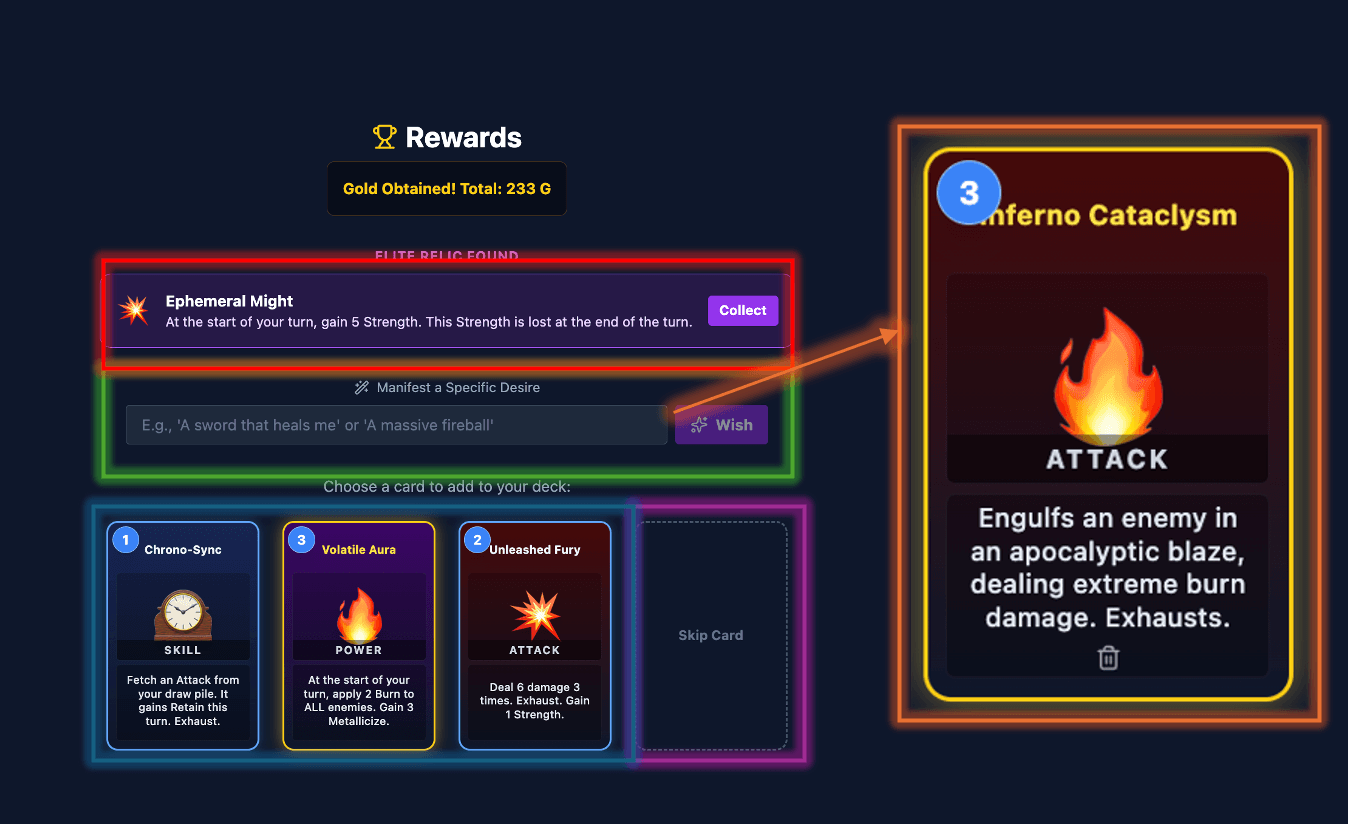

Players can collect an AI-generated elite relic, request a wish card through free text input (e.g., “a massive fireball”), or choose from three generated standard cards. The system turns natural language wishes into playable card effects. | Image: Feng et al.

Players can collect an AI-generated elite relic, request a wish card through free text input (e.g., “a massive fireball”), or choose from three generated standard cards. The system turns natural language wishes into playable card effects. | Image: Feng et al.

Other demos include a sandbox simulation called “AI Alchemy” where elements react with each other and the AI suggests new reaction rules, a 3D planet explorer (“Cosmic Voyager”) with ongoing AI commentary, a generator for Wikipedia-style articles (“WWMPedia”), and a system for generative long-form literature (“Bookshelf”).

What this could mean for AI agent training

The researchers position their work as a middle ground between rigid database applications and uncontrollable generative systems. Web world models aim to combine the reliability of classic web development with the flexibility of language models.

This could prove relevant for AI agent development. Agents that perform tasks on their own need training environments that are consistent enough for meaningful learning, yet flexible enough to handle unforeseen situations.

How well the approach scales with more complex interactions remains unclear. The demos are impressive but relatively straightforward. The researchers don’t show whether web world models work when many agents act at the same time or when rules need to change on the fly.

Research into training environments for AI agents is picking up steam. A recent study by Microsoft Research and US universities shows that fine-tuned LLMs can fill this role and predict environmental conditions with over 99 percent accuracy. Turing Award winner Richard Sutton sees such world models as key to experience-based learning for future AI agents.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive “AI Radar” Frontier Report 6× per year, access to comments, and our complete archive.