SC25 is less than two months away, which means it’s a good time to take a look at the market for HPC systems and solutions. Since AI is the driving force in HPC these days, a peek at the HPC numbers invariably means analyzing AI, too.

There are several good sources of public information about the HPC market. One of those is Hyperion Research, which spun out of IDC several years ago. According to figures it shared earlier this year, the market for HPC and technical computing is growing quickly at the moment, thanks to AI, and was worth about $60 billion in 2024.

Figures Hyperion shared earlier this year indicate on-prem server revenues accounted for about 42% of that $60-billion figure (about $25 billion), while services were 21% ($12.6 billion), storage accounted for 17% (about $10 billion), the cloud accounted for 15% ($9 billion), and software moved 5% ($3 billion).

Spending for on-prem servers was moving faster than spending in the cloud, according to Hyperion, which found the on-prem segment grew at 23.4% while the cloud segment grew at a 21.3% clip, which is also healthy. Hyperion pointed out that the 23.4% increase in spending for on-prem systems was the highest one-year increase in more than two decades. The AI boom is lifting all HPC boats, but it appears to be lifting on-prem boats just a little bit more.

What’s interesting is that the cloud/on-prem split has not changed much over the past three years, which stretches past the start of the GenAI boom. Back in 2022, as John Russell noted in this HPCwire story, Hyperion found that on-prem servers accounted for 41% in spending, while cloud spending was 17% and storage accounted for another 17%. Those figures are within the rounding error for 2025. The biggest difference, of course, is the denominator. Going from $37.3 billion in worldwide HPC-AI spending to $60 billion is a big change.

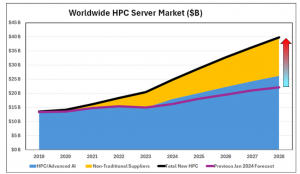

Worldwide HPC server revenue (Source: Hyperion Research)

Worldwide HPC server revenue (Source: Hyperion Research)

Another good source of market data in the HPC world is Intersect360 Research. Addison Snell and company gather their own data and slice it a little bit differently. According to research it released in July, the combined HPC-AI and enterprise AI segments accounted for $60.1 billion in spending in 2024, which represented a 24.1% increase over the prior year.

The individual buckets that make up the combined HPC-AI and enterprise AI pool include hyperscale AI, AI-focused cloud services, national sovereign AI data centers, HPC-AI (non-cloud), and enterprise AI (non-HPC, non-cloud). Intersect360 adds two additional non-accelerated buckets–which it dubs hyperscale and enterprise–to complete its market analysis.

Intersect360 found that on-premises HPC-AI and Enterprise AI servers (excluding hyperscale) accounted for $19.2 billion in spending 2024, a 36.8% increase over 2023. “This sharp rise was driven by a wave of GPU-enabled system refreshes and strong enterprise demand across industries,” Snell and company wrote.

They added: “The growth was particularly concentrated in the supercomputing and high-end server classes, which benefited from large-scale AI model training initiatives and infrastructure modernization efforts. Meanwhile, entry-level and midrange server classes also saw meaningful gains, suggesting a widening base of AI adoption across organizational sizes.”

Hyperion found that the middle of the HPC/AI market was the fastest growing in 2024. Large HPC systems, or those that cost from $1 billion to $10 million, accounted for just over $7 billion in spending. That was followed closely by supercomputer class systems, which Hyperion defines as systems that cost between $10 million and $150 million; this sector accounted for $6.9 billion in spending. Entry-level HPC systems (less than $250,000) totaled $6.2 billion in spending, while the medium HPC sector ($250,000 to $1 million systems) moved had $4.0 billion in spending. Leadership class machines, or those that cost more than $150 million, was the smallest sector, and only accounted for $1.2 billion in spending.

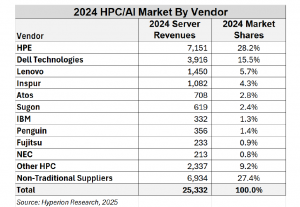

Worldwide HPC server vendor share (Source: Hyperion Research)

Worldwide HPC server vendor share (Source: Hyperion Research)

There is a considerable amount of attention focused on the extreme high-end of the HPC/AI market, dubbed exascale. Over the next few years, we should see one or two exascale systems go online in the US, each with a value ranging from $275 million to $350 million, Hyperion predicts. At the same time, Europe will see two or three such systems going online, while China will see an average of two per year, the research firm says. Japan may kick in two or three exascale systems, Hyperion says, while two or three will be installed per year in other countries, accounting for anywhere from 28 to 39 exascale systems installed through 2028, driving anywhere from $7 billion to $10.3 billion in spending.

In terms of vendors, HPE and its HPE Cray Supercomputing subsidiary accounted for the biggest chunk of the HPC and AI market, with more than $7.1 billion in 2024 revenues, which was better than a 28% share, according to Hyperion’s analysis. That was followed by Dell Technologies ($3.9 billion, 15.5% share); Lenovo ($1.5 billion, 5.7% share); and Inspur ($1.1 billion, 4.3% share). Rounding out the bottom were Atos, Sugon, IBM, Penguin Solutions, Fujitsu, and NEC. “Other HPC:” moved $2.3 billion worth of servers, equal to a 9.2% share, while “non-traditional suppliers” sold $6.9 billion worth of servers, or 27.4% of the overall market.

So, who are these non-traditional suppliers? Nvidia arguably is the biggest one, as it makes the GPUs that are essential for running both AI and traditional modeling and simulation workloads. Nvidia increasingly also is a system provider in its own right, thanks to its DGX and NVL72 platforms. Supermicro is another non-traditional supplier, as are big microchip developers like Cerebras and SambaNova.

As Hyperion points out, the servers built by these non-traditional suppliers still represent just a portion of the overall market. They haven’t traditionally been accounted for in the overall HPC market numbers (hence the term “non-traditional”). However, they are growing quite fast, thanks to the generative AI boom sparked by OpenAI’s launch of ChatGPT in late November 2022, which morphed into the agentic AI boom in early 2025 that we are currently working our way through.

It shouldn’t be surprising that AI accounts for much of the growth in the HPC-AI market. The portion of the overall market that’s focused on traditional HPC workloads is still bigger than the AI-focused portion, according to Hyperion. However, the AI portion is growing much more quickly.

Nvidia NV72 is an example of “non-traditional” HPC gear

Nvidia NV72 is an example of “non-traditional” HPC gear

According to Hyperion’s figures, AI-focused HPC drove perhaps $1 billion in spending in 2020. That figure is projected to pass the traditional HPC workload portion sometime in the next year or so, the firm predicts, and cross the $20 billion threshold perhaps in 2027. By 2028, it should account for more than $22 billion in spending, while traditional HPC-focused servers move perhaps $18 billion in value.

The growth rate for AI-focused HPC servers corresponds with a 47% compound annual growth rate (CAGR). The market for traditional HPC-focused servers, on the other hand, is forecast to go from roughly $13 billion in 2020 to roughly $18 billion in 2028, which corresponds with a 4% CAGR. Clearly, AI is the focus for HPC now, and it will continue to be for the foreseeable future.

The growth in AI led Intersect360’s Snell to conclude that AI has eclipsed HPC in importance. Bac at the beginning of the AI boom, HPC people started calling it the HPC-AI market, he said. “We gave talks about how HPC would enable AI, and AI would enhance HPC in this beautiful, symbiotic relationship,” he wrote in a June HPCwire story.

However, that happy story once predicted may turn out to be something from a fairy tale. “HPC claims this relationship with AI, but AI doesn’t necessarily make the same claim about HPC,” Snell wrote. “AI isn’t part of the HPC market. Not anymore. It’s the other way around.”

With that said, there does appear to be considerable cross-over between the two sub-sectors of the market. According to Hyperion, it’s not unusual to find AI-focused HPC systems running traditional HPC workloads some of the time. And at the same time, some AI-focused systems are running HPC workloads.

As government labs, universities, energy companies, manufacturers, and others begin bringing agentic AI into more traditional HPC workloads as part of the push for AI for science and engineering, the AI-focused segment of the overall pie will invariably grow. The folks at the Trillion Parameter Consortium (TPC) and others certainly see a future for AI helping to accelerate traditional HPC workloads.

Could unleashing the productivity of scientists–that is, unleashing the productivity of science–through agentic AI lead to a boom in traditional HPC workloads? It’s not entirely outside the realm of possibility. Predictions and forecasts are imperfect, and have a habit of being wrong. Perhaps this will be one of those cases.