Quantum computing represents uncharted territory for high-performance computing centers, with few, if any, definitive waypoints to guide deployment. But in their pioneering pursuit to connect quantum and classical supercomputers, HPC facilities have an opportunity to lean on some mainstay HPC to bridge the gap.

One such time-tested technology is InfiniBand, which emerged in 1999 as a data interconnect in the HPC sector. An InfiniBand backplane offers high bandwidth and low latency in the HPC environment. It’s often used to connect compute nodes to each other and to storage systems.

Those InfiniBand attributes now stand to play a role in emerging fields such as AI and quantum computing.

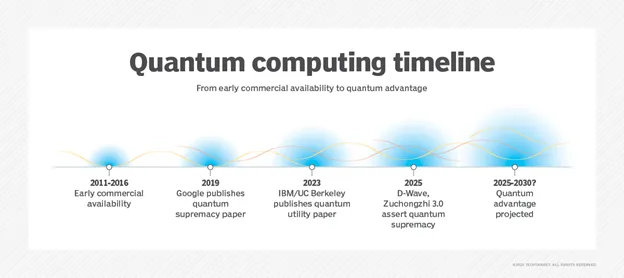

(Source: TechTarget) HPC centers are exploring quantum integration amid the expected arrival of quantum advantage and early fault-tolerant systems.

An unexpected twist

InfiniBand came up, somewhat unexpectedly, in Oak Ridge National Laboratory’s on-premises quantum computing deployment. In July, the Department of Energy lab worked with hardware maker Quantum Brilliance to install and integrate its diamond-based quantum computers.

At the start of the deployment, the issue of storage arose in the lab’s discussions with Quantum Brilliance, said Travis Humble, director of the Quantum Science Center at Oak Ridge. The lab had previously accessed quantum computers in the cloud, rather than on-site, so storage wasn’t a prime consideration.

“Everybody just kind of looked at each other: ‘Do we need storage?'” Humble recalled. “That’s the kind of question that you don’t really ask yourself until you are forced to think about putting things together that you’ve never tried before.”

The lab pointed to its existing storage infrastructure, which is based on InfiniBand and a couple of other technologies, knowing, Humble said, that InfiniBand might prove more than what the lab needed — at least initially.

“The quantum computers themselves are generating relatively small amounts of data, certainly by our modern standards,” Humble said. “So, InfiniBand and even 10 gigabit Ethernet are kind of overkill for the bandwidth requirements that we have at the moment.”

AI boosts InfiniBand technology sales

While the lab assesses how InfiniBand might fit into its quantum strategy, the interconnect technology is experiencing something of a renaissance amid the AI boom. The technology’s prospects in that market could carry over into quantum computing.

A September report from market researcher Dell’Oro Group noted a second-quarter surge in InfiniBand switch sales within AI back-end networks. According to Dell’Oro, demand for those switches increased due to customer adoption of Nvidia’s Blackwell Ultra platform, a high-end AI data center GPU.

Sameh Boujelbene, a vice president analyst at Dell’Oro, noted that InfiniBand was originally purpose-built for classic HPC applications, which is why it now meets the stringent speed, latency and losslessness requirements for AI workloads.

“For the same reason, we expect InfiniBand to also be well-positioned for quantum computing because its ultra-low latency and RDMA [Remote Direct Memory Access] features fit the tight-coupling quantum workloads demand,” she said.

In the long term, however, InfiniBand may face competition from Ethernet/Ultra Ethernet Consortium technologies and custom interconnects, Boujelbene said.

For more insights from John Moore on quantum computing, check out the article: Oak Ridge Puts Quantum Supercomputer Integration to the Test.