The Ray-Ban Meta Display has been the most discussed device at Meta Connect, but another piece of technology that was announced at Connect impressed me much more than the new smartglasses. I’m talking about Meta Horizon Hyperscape Capture, which is probably one of the most mindblowing things I’ve tried lately… and I’m not someone easy to impress.

You can watch this video to see my integral capture and render of a place using Hyperscape Capture!

It is not the first time I say that something in the Meta Horizon Hyperscape suite impressed me: last year, Meta released the Meta Horizon Hyperscape beta, and I already reviewed it very favorably. Meta Horizon Hyperscape beta let you teleport in six test environments that were scanned by Meta employees and that were rendered using Gaussian Splatting. Rendering was cloud-streamed from Meta servers via the Avalanche service, and thanks to this, Meta was able to provide a high-quality render of the environments. In my review, I highlighted how the environments were not perfect, but were very well scanned, and there were some points of view from which I really had the impression of being there. It really felt like a magical teleport.

These paintings I saw using Horizon Hyperscape looked amazing. If I told you that this is an image I took in a physical place, you would believe me

These paintings I saw using Horizon Hyperscape looked amazing. If I told you that this is an image I took in a physical place, you would believe me

After that test, I tried other similar services like Gracia or Varjo Teleport, which are also good. Both services allow users to do their own scans, and often speaking with other people in the community, the feedback about the Meta Horizon Hyperscape thing was something along the lines of “yes, it is cool, but there are only 6 environments, and for sure they have been scanned by Meta employees with super-advanced tools”.

Say no more: at Meta Connect, Mark Zuckerberg announced the release in beta of Meta Horizon Hyperscape Capture, an app to scan your places directly with your Quest 3 headset. And after the scan, you can visit them exactly as they were, as it was possible to do with the six demo environments. The vision, said Zuck, is to let people scan their environments and then upload them in the future in Horizon Worlds or any other multiplayer experiences so that you can visit them with your peers.

I went hands-on with the beta of Meta Horizon Hyperscape Capture on my Quest 3, and in the remainder of this article, I’m going to tell you everything about it.

Prerequisites

To try Meta Horizon Hyperscape Capture, you must satisfy these prerequisites:

You must have a Quest 3/3S

You must be in the US. Actually, I’ve seen people from other countries being able to download it, too. Worst-case scenario, you can still use a VPN to get it

You must have at least runtime v81 of Quest. At the time of writing, v81 is still in Beta, so you need to join the PTC (Public Test Channel) to be able to download it

You must have enough free storage on your device. A redditor reported that the app, still in Beta, saturated the storage and made the Quest crash

The capture process

Once you are in the app, you can choose to scan a new place, view a place you have already scanned, or enjoy some preset scans made by Meta. I chose to perform a new Scan.

The capture process consists of three steps.

During the first step of the capture, the room mesh is created live while you walk (Image by Meta)

During the first step of the capture, the room mesh is created live while you walk (Image by Meta)

In the first step, you go around your room, and you scan it by looking at it with your headset. The more you go around, the more you see the system creating a better mesh of the environment around you. This step reminds me a bit of the one you do to perform the Room Setup. The system suggests you start by making the scan from the four corners of the room, going to every corner, and looking towards the center. During the whole scan process, the right controller vibrates to flag that it is recording images. After you have scanned enough parts of the room, the system allows you to click next and go on. Anyway, when it happened to me, there were still huge holes in the mesh, so I continued scanning until the system had created a satisfying version of the mesh of the room. I suggest you do the same. This step took me around 4 minutes.

While I was re-scanning the room for the second step, the mesh disappeared to show me the places that I had re-scanned (Image by Meta)

While I was re-scanning the room for the second step, the mesh disappeared to show me the places that I had re-scanned (Image by Meta)

In the second step, the system takes care of the details and suggests that you scan some important objects from different points of view. This is something I was also suggested to do at Varjo when trying Varjo Capture. The more you scan a certain object from different points of view, the better that object will be represented in the final reconstruction of the room. So I went around the table, the computer, the chair, some bags with souvenirs, and other relevant items in the room. I walked around them and looked at them from different points of view. I also crouched to scan the lower parts of them. While in the first phase, you have the reconstructed mesh as a visual indicator of the quality of your scan, in the second phase, you don’t have a visual presentation that is useful to tell how well you are doing. The system shows you the mesh of the room, and as soon as you are framing some parts of it, the mesh in that place disappears, which is a good reminder of the places you are visiting, but not of how well you are scanning the details of various parts of the room. A better gizmo would be needed. When you believe you’ve done enough scanning of the details, you can go to the final part. This step took me around 9 minutes, because I accurately scanned multiple objects.

Scanning the ceiling for the third phase. Notice the straight lines around the edges of the walls. At this point, the system had already detected what was the shape of my room

Scanning the ceiling for the third phase. Notice the straight lines around the edges of the walls. At this point, the system had already detected what was the shape of my room

In the third and last step, you have to look at your ceiling and scan it again. Probably Meta noticed in its tests that people usually forget to look up and scan the ceiling, so it provided a dedicated step just for it. Ceilings are also hard to reconstruct because they are mostly monochrome, so it’s better to provide more scan frames of them. In this step, you can see how Meta already detected the walls and the desks in the room (like after Room Setup), and there is an indicator to show you which parts of the ceiling you still have to scan. You can also scan other things beyond the ceiling, if you think you need to scan other objects better. This step took me around 3 minutes.

The whole process took me around 15-16 minutes. I appreciated that you are guided during the whole process with visual indicators and with explanatory videos at every step.

Uploading the data after the capture

Uploading the data after the capture

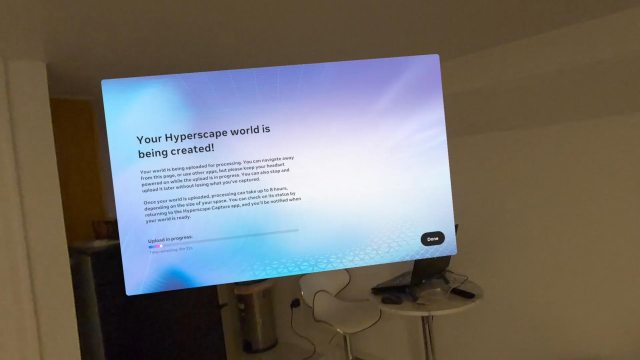

After the scan is completed, the system asks you to give it a name and a description. After you provide this data, the upload starts. The previous steps happened on the device, and the Quest took a lot of “pictures” of my room while I was moving around. Now, all this data should have been uploaded to Meta’s servers to transform these captures into my fully reconstructed room. Making environment reconstruction with Gaussian Splats can’t happen on the device, unless you want your Quest to explode, so this data should be uploaded to the cloud. The upload took around 10 minutes: I could let the Quest go into stand-by during this time and do other activities.

After the upload, the reconstruction process usually takes around 4 hours, but people in the community reported longer or shorter times depending on the dimension and reconstruction of the room.

How to ensure a good capture

A user scanning his room with his Quest (Image by Meta)

A user scanning his room with his Quest (Image by Meta)

From my experience, to ensure a good capture, you must:

Scan your room accurately. Elements with small details should be scanned up close and from different points of view. In general, the more you scan an object, the better it will be rendered

Move slowly: the faster you move, the blurrier the capture, because the pictures the system is going to take will be blurred. Notice that it is hard to follow this advice, because we are used to rotating our head pretty quickly, and this is a problem for the capture process. Multiple times, I had the pop-up warning me about moving slowly during my capture. So if you can, move and rotate your head slowly

Do not put your hands in front of the camera: you should not scan your body, but the room. So keep your arms and hands along your body. If you don’t do that, a pop-up warning will appear. To be honest, the pop-up also appeared when I was not doing that

Have a proper illumination: elements that are too bright or too dim usually do not come up very well

Avoid reflective surfaces like mirrors

Have a space that is not fully monochrome, but has some textures. Having some portions of white walls is not an issue, though

Keep your objects still: if you move an object during the scan process, it is going to have halos and artifacts around it

Privacy concerns

Many people went on social media to complain that the terms of service of Horizon Hyperscape allow Meta to have possession of your capture, hence of the data of your room. I think on one side, this is good, and on the other, it is bad.

It is good because… Meta must do that. If you upload your data to its servers, and the servers should elaborate on the scan of your room, and then retain your scan to cloud-stream it, Meta should have the right to own the data. Otherwise, it simply can’t provide the service. Whatever company is going to offer a similar service (Apple included) will have similar terms and conditions. You can’t escape from it.

It is bad because, once they have this scan, they can potentially do what they want, like analyze what objects you have and provide you with a dedicated advertisement. Meta is still an ad company, and in the past, it has proven to be pretty aggressive about its data collection, so we must be aware of this risk.

I think that the best compromise for this kind of service would be to have the right to have the mesh and the photos of the room, but only in relation to providing the reconstruction and rendering service, and nothing else. Otherwise, the privacy concerns can be enormous, and we must be careful about it.

Just to be sure, I didn’t scan my home, but the BNB I rented while I was in New Jersey…

Hands-on with the resulting capture visualization

I did my scanning test while I was in Jersey City on my last day in the US. I scanned my rented apartment, then took the plane. Notice that I did just one scan; I did not do many until I learned how to be a pro at the tool. And I’m not even used to doing photogrammetry or environment reconstruction or things like that. Maybe I did a couple of those in my whole life. So I think I’ve been a good guinea pig: I was just a random VR dude scanning his room for the first time.

Today, since I’m back in Italy, I decided to see how the scan was, and also show the people around me what my apartment in the US looks like. I reopened Hyperscape Capture, and I could see that the reconstruction of my place was, of course, completed. I clicked on it to enter it and… holy moly.

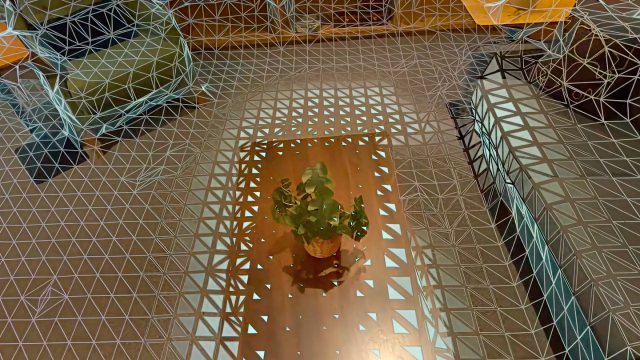

This is not a frame from a video I shot in my apartment. It is a frame of a video I shot inside the VR reconstruction of my apartment

This is not a frame from a video I shot in my apartment. It is a frame of a video I shot inside the VR reconstruction of my apartment

The environment reconstruction was fucking good. As soon as I entered there, I could not avoid saying “Wow”, because I felt like I was back in that place I was in a few days ago. I was genuinely impressed. Again, it was my first scan, just did it in 15 minutes before running to the airport. And it was THAT good.

Being the precise guy that I am, I started going around and seeing things up close and noticing the imperfections. As I told you before, some areas had artifacts: in particular, the dimmer ones, the ones around the bright lines, the ones I did not scan with enough accuracy, and the mirrors. The ceiling was also not that good, so I probably should have been more careful in the third step.

These surfaces look “painted”. These are typical artifacts of Gaussian Splats

These surfaces look “painted”. These are typical artifacts of Gaussian Splats

Notice that apart from the mirror, no area of the house was very bad. It’s just that some were more precise, and others showed some artifacts. The backpack that I accidentally kicked while I was scanning the place had a halo around it. The Razer logo on my laptop was very blurry since I never scanned it up close. Usually, artifacts showed up as blurred areas or as “brushes” around some parts of the world.

What you see around my backpack is not its shadow, but a halo, because I moved it by mistake during the scanning procedure

What you see around my backpack is not its shadow, but a halo, because I moved it by mistake during the scanning procedure

But some parts I scanned really well were almost real: the welcome mat was very realistic, like the plastic plants in the room. The bed was like I left it. I could even peek inside the bag with the souvenirs inside! Some reflective surfaces, like the metallic base of the stool, were also reconstructed very well, and I could move my head to see the reflections change. I was then pleasantly surprised that the transparent table was also rendering well, and I could see through it. I could not believe I could really feel there… that I had such a good reconstruction with my first scan. Last year, I tried to scan a room using an iPhone with Varjo Teleport, and the results were mediocre. This time, with Hyperscape, my first scan was already great… and on a beta version of the software.

The transparent table: there are no artifacts due to its transparency. I can move my head and see the objects through it

The transparent table: there are no artifacts due to its transparency. I can move my head and see the objects through it

I could teleport inside my room, not only on the floor, but also on top of the bed, and on the sofa, and see it from new angles. That was cool, too. It was a bit less cool than when I started the experience, and I found myself on the ceiling, with the floor height being completely wrong. I solved this small issue by recentering my view by long-pressing the Meta button on my controllers.

Just a final notice: since Horizon Hyperscape is cloud-rendered, ensure you have a good network connection. Without a good connection, your experience may not be as good.

Final impressions

I could really peek inside the bags with the souvenirs!

I could really peek inside the bags with the souvenirs!

Horizon Hyperscape Capture is nothing short of impressive. I would say one of the most impressive things I have tried in the last couple of years is in XR. When I tried Horizon Hyperscape last year, I thought that those amazing environments required very expensive professional hardware used by professionals. But now, actually, I realized that every one of us can obtain very good results using just the hardware we already own, that is, the Quest. I’ve heard of some people having bad scans with this software, but most people in our community had very positive results. This is a full democratization of environment scanning and reconstruction.

And the possibilities are endless. If Meta allows people in the future to upload these spaces to Horizon Worlds, or allows us to download the PLY file of the splat and use it elsewhere, we could create multiplayer experiences where people visit places around the world. I could, for instance, scan a house in China, invite my Italian friends there virtually, and show them what life is like there. Or I could scan my house now and show it to my future children in 20 years. And since the scans are so good, they will feel like being there. This can be revolutionary for tourism or real estate. It’s teleportation made real. And it can be a very good use case for selling virtual reality headsets (at least in the B2B space).

I think Meta created something incredible, and if I were them, I would give it much more spotlight than it is having, because I think this technology can really have important applications. And I suggest that you all who have the v81 of the runtime try it, because it is definitely good.

(Header image by Meta)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I’ll be very happy because I’ll earn a small commission on your purchase. You can find my boring full disclosure here.

Share this with other innovators

Related