Inefficient use of resources plagues modern GPU clusters, particularly those employing Multi-Instance GPU technology, as current scheduling methods treat tasks as inflexible units demanding dedicated time and memory. Michal Konopa, Jan Fesl, and Ladislav Beránek from the University of South Bohemia present a new approach, Scheduler-Driven Job Atomization, which fundamentally alters this interaction by enabling a dynamic exchange between the scheduler and individual jobs. This innovative paradigm allows the scheduler to advertise available processing windows, prompting jobs to respond by creating smaller, adaptable subtasks that precisely fit the offered capacity. By proactively shaping workloads before execution, rather than relying on costly migration or interruption, the team aims to dramatically increase GPU utilization, reduce waiting times, and minimise overhead, ultimately paving the way for more efficient and responsive high-performance computing. This work introduces the core concepts and building blocks of SJA, outlining a promising direction for future research in resource management.

slice until completion. The reliance on static peak memory estimates exacerbates fragmentation, underutilization, and job rejections. Scientists propose Scheduler-Driven Job Atomization (SJA), a new paradigm that establishes a bidirectional interaction between scheduler and jobs. In SJA, the scheduler advertises available execution gaps, and jobs respond by signaling interest if they can potentially generate a subjob that fits the offered time and capacity window. The scheduler selects which job receives the slot based on its allocation policy, such as fairness, efficiency, or service level agreement priorities. The core idea moves beyond traditional scheduling by establishing a bidirectional communication channel between the scheduler and the jobs themselves. This allows jobs to adapt to available resources, rather than the scheduler simply assigning them. Key Concepts and Benefits: * Adaptive Workloads: SJA decomposes workloads into smaller, adaptable subjobs designed to fit within the constraints of available MIG slices. This bidirectional communication is central to the approach. SJA prioritizes ensuring that each subjob can run successfully on its assigned slice, even if it means sacrificing some overall system-wide optimization. This reduces the need for complex planning and mid-run interventions. By focusing on local feasibility, SJA aims to minimize the overhead associated with migration, preemption, or complex scheduling algorithms.

The process involves: 1. Job Decomposition: A job is broken down into smaller, independent subjobs0. 2. Resource Profiling: Each subjob defines a probabilistic resource profile outlining its requirements0. 3.

Scheduler Offers: The scheduler identifies available MIG slices and offers them to jobs0. 4. Job Reply: Jobs evaluate the offer and determine if they can adapt their subjobs to fit the available resources, accepting the offer if possible0. 5. Execution: The subjob is executed on the assigned MIG slice.

Potential Advantages: * Higher GPU Utilization: By adapting to available resources, SJA can potentially fill more MIG slices. * Lower Wait Times: Jobs can be launched more quickly if they are willing to adapt to available resources. * Risk-Aware Scheduling: Ensuring local feasibility reduces the risk of job failures due to resource constraints. * Simplified Scheduling: Focusing on local feasibility reduces the complexity of the scheduling algorithm. Future Research Directions: The document outlines several areas for future research, including simulation-based validation, refined predictive models for resource needs, policy learning for subjob generation, practical prototyping in R/CUDA and Kubernetes, and extending the communication beyond prediction to include energy, accuracy, and quality of service considerations. This work defines a bidirectional communication model where the scheduler advertises available execution gaps and jobs signal interest in utilizing those gaps with self-contained subjobs. Unlike existing strategies that rely on costly state transfers or checkpointing, SJA proactively shapes workloads before execution, ensuring subjobs are tailored to allocated time windows. This approach avoids the overhead associated with migration, preemption, or moldable scheduling, which often require reassignment or reconfiguration of existing monolithic jobs.

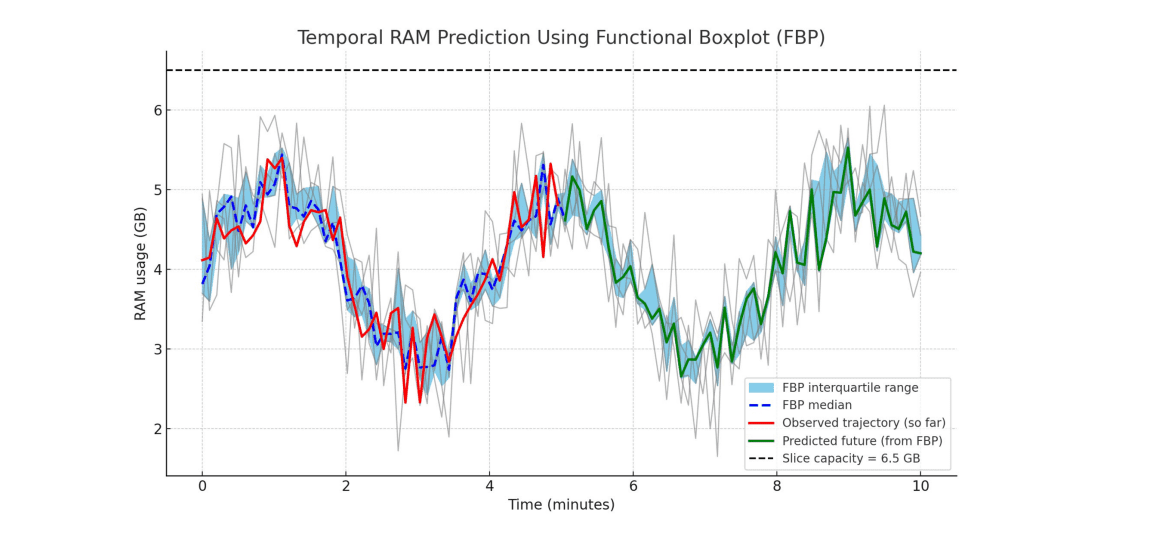

Researchers clarify key building blocks of SJA, including the concept of subjobs and the use of Temporal and Functional Memory Profiles to predict resource needs. This work introduces Temporal Resource Profiles as a general mechanism for probabilistic resource prediction, focusing specifically on Functional Memory Profiles as a primary instance. The team demonstrates how this bidirectional communication can convert fragmented GPU capacity into safe and efficient execution opportunities, allowing jobs to be decomposed into restartable subjobs. Recognizing inefficiencies caused by treating jobs as inflexible units, the researchers propose a bidirectional interaction between the scheduler and individual jobs. The scheduler advertises available execution windows, and jobs respond by creating smaller, self-contained subjobs that fit those specific opportunities. This proactive approach differs from traditional methods like migration or preemption by shaping workloads before execution, thereby avoiding costly interruptions and state transfers.

The key achievement of this research lies in establishing a protocol where jobs adaptively reshape themselves based on available resources, guided by the scheduler. By decomposing workloads into these dynamically sized subjobs, SJA aims to increase GPU utilization, reduce wait times, and improve overall scheduling efficiency. The researchers acknowledge that this initial work prioritizes local feasibility and responsiveness over global optimization, representing a deliberate trade-off to unlock substantial gains without excessive planning overhead. As a concept paper, this research deliberately focuses on establishing the principles of SJA rather than providing a full experimental evaluation. Future work will involve simulation-based validation, refinement of predictive models for subjob generation, and practical prototyping within existing software stacks like R/CUDA and Kubernetes. The team intends to explore policy learning to further optimize subjob creation and enhance the adaptability of the system.