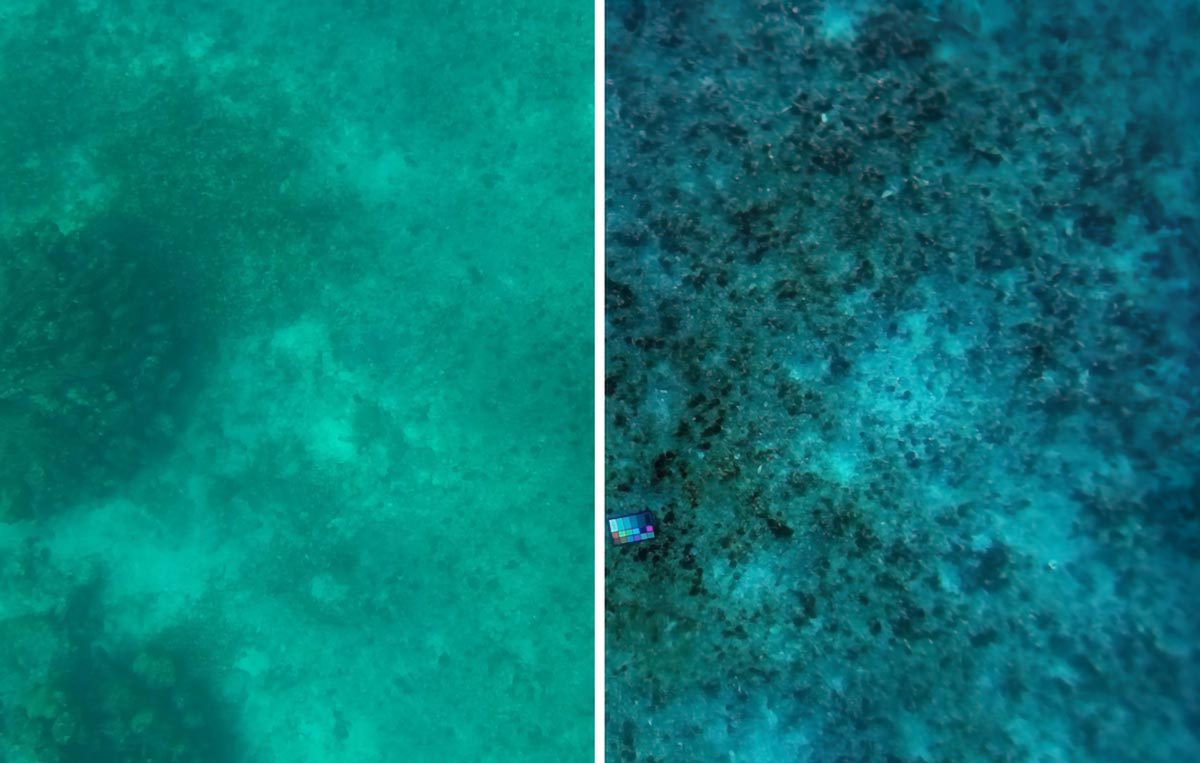

SeaSplat produces true color images of underwater scene, as captured by MIT team’s underwater robot. The original photo is in the left, and the color-corrected version made with SeaSplat is on the right. Credit: Courtesy of Daniel Yang, John Leonard, Yogesh Girdhar

SeaSplat produces true color images of underwater scene, as captured by MIT team’s underwater robot. The original photo is in the left, and the color-corrected version made with SeaSplat is on the right. Credit: Courtesy of Daniel Yang, John Leonard, Yogesh Girdhar

The color-correcting tool called “SeaSplat” shows underwater features in colors that appear more true to life.

The ocean is filled with life, yet much of it remains hidden unless observed at very close range. Water acts like a natural veil, bending and scattering light while also dimming it as it moves through the dense medium and reflects off countless suspended particles. Because of this, accurately capturing the true colors of underwater objects is extremely difficult without close-up imaging.

Researchers at MIT and the Woods Hole Oceanographic Institution (WHOI) have created an image-analysis system that removes many of the ocean’s optical distortions. The tool produces visuals of underwater scenes that appear as though the water has been removed, restoring their natural colors. To achieve this, the team combined the color-correction tool with a computational model that transforms images into a three-dimensional underwater “world” that can be explored virtually.

The team named the tool “SeaSplat,” drawing inspiration from both its underwater focus and the technique of 3D Gaussian splatting (3DGS). This method stitches multiple images together to form a complete 3D representation of a scene, which can then be examined in detail from any viewpoint.

“With SeaSplat, it can model explicitly what the water is doing, and as a result, it can in some ways remove the water, and produces better 3D models of an underwater scene,” says MIT graduate student Daniel Yang.

The researchers applied SeaSplat to images of the sea floor taken by divers and underwater vehicles, in various locations, including the U.S. Virgin Islands. The method generated 3D “worlds” from the images that were truer, more vivid, and varied in color, compared to previous methods.

Coral reefs and marine health

The researchers note that SeaSplat could become a valuable tool for marine biologists studying the condition of ocean ecosystems. For example, when an underwater robot surveys and photographs a coral reef, SeaSplat can process the images in real time and create a true-color, three-dimensional model. Scientists could then virtually “fly” through this digital environment at their own pace, examining it for details such as early signs of coral bleaching.

A new color-correcting tool, SeaSplat, reconstructs the true colors of an underwater image, taken in Curacao. The original photo is on the left, and the color-corrected version made with SeaSplat is on the right. Credit: Daniel Yang, John Leonard, Yogesh Girdhar

A new color-correcting tool, SeaSplat, reconstructs the true colors of an underwater image, taken in Curacao. The original photo is on the left, and the color-corrected version made with SeaSplat is on the right. Credit: Daniel Yang, John Leonard, Yogesh Girdhar

“Bleaching looks white from close up, but could appear blue and hazy from far away, and you might not be able to detect it,” says Yogesh Girdhar, an associate scientist at WHOI. “Coral bleaching, and different coral species, could be easier to detect with SeaSplat imagery, to get the true colors in the ocean.”

Girdhar and Yang will present a paper detailing SeaSplat at the IEEE International Conference on Robotics and Automation (ICRA). Their study co-author is John Leonard, professor of mechanical engineering at MIT.

Aquatic optics

Light behaves differently in water than in air, altering both the appearance and clarity of objects. Over the past several years, scientists have tried to design color-correcting methods to recover the original appearance of underwater features. Many of these efforts adapted techniques originally developed for use on land, such as those used to restore clarity in foggy conditions. One notable example is the algorithm “Sea-Thru,” which can reproduce realistic colors but requires enormous computing power, making it impractical for generating three-dimensional models of ocean scenes.

At the same time, researchers have advanced the technique of 3D Gaussian splatting, which allows images of a scene to be combined and filled in to create a seamless three-dimensional reconstruction. These models support “novel view synthesis,” enabling viewers to explore a 3D scene not only from the original vantage points of the images but also from any other angle or distance.

But 3DGS has only successfully been applied to environments out of water. Efforts to adapt 3D reconstruction to underwater imagery have been hampered, mainly by two optical underwater effects: backscatter and attenuation. Backscatter occurs when light reflects off of tiny particles in the ocean, creating a veil-like haze. Attenuation is the phenomenon by which light of certain wavelengths attenuates, or fades with distance. In the ocean, for instance, red objects appear to fade more than blue objects when viewed from farther away.

Out of water, the color of objects appears more or less the same regardless of the angle or distance from which they are viewed. In water, however, color can quickly change and fade depending on one’s perspective. When 3DGS methods attempt to stitch underwater images into a cohesive 3D whole, they are unable to resolve objects due to aquatic backscatter and attenuation effects that distort the color of objects at different angles.

“One dream of underwater robotic vision that we have is: Imagine if you could remove all the water in the ocean. What would you see?” Leonard says.

In their new work, Yang and his colleagues developed a color-correcting algorithm that accounts for the optical effects of backscatter and attenuation. The algorithm determines the degree to which every pixel in an image must have been distorted by backscatter and attenuation effects, and then essentially takes away those aquatic effects, and computes what the pixel’s true color must be.

Yang then worked the color-correcting algorithm into a 3D Gaussian splatting model to create SeaSplat, which can quickly analyze underwater images of a scene and generate a true-color, 3D virtual version of the same scene that can be explored in detail from any angle and distance.

Testing across oceans

The team applied SeaSplat to multiple underwater scenes, including images taken in the Red Sea, in the Caribbean off the coast of Curaçao, and the Pacific Ocean, near Panama. These images, which the team took from a pre-existing dataset, represent a range of ocean locations and water conditions. They also tested SeaSplat on images taken by a remote-controlled underwater robot in the U.S. Virgin Islands.

From the images of each ocean scene, SeaSplat generated a true-color 3D world that the researchers were able to virtually explore, for instance, zooming in and out of a scene and viewing certain features from different perspectives. Even when viewing from different angles and distances, they found objects in every scene retained their true color, rather than fading as they would if viewed through the actual ocean.

“Once it generates a 3D model, a scientist can just ‘swim’ through the model as though they are scuba-diving, and look at things in high detail, with real color,” Yang says.

For now, the method requires hefty computing resources in the form of a desktop computer that would be too bulky to carry aboard an underwater robot. Still, SeaSplat could work for tethered operations, where a vehicle, tied to a ship, can explore and take images that can be sent up to a ship’s computer.

“This is the first approach that can very quickly build high-quality 3D models with accurate colors, underwater, and it can create them and render them fast,” Girdhar says. “That will help to quantify biodiversity, and assess the health of coral reefs and other marine communities.”

Reference: “SeaSplat: Representing Underwater Scenes with 3D Gaussian Splatting and a Physically Grounded Image Formation Model” by Daniel Yang, John J. Leonard and Yogesh Girdhar, 25 September 2024, arXiv.

DOI: 10.48550/arXiv.2409.17345

This work was supported, in part, by the Investment in Science Fund at WHOI, and by the U.S. National Science Foundation.

Never miss a breakthrough: Join the SciTechDaily newsletter.