Mobile devices are fast becoming AI powerhouses, with users expecting instant responses, smarter personalization, and real-time processing without relying on cloud connectivity. However, as mobile AI experiences become more intelligent, immersive, and demanding – from ultra-responsive apps and low-latency AI assistants to advanced camera features and real-time speech processing – the need for high-performance, power-efficient compute at the edge has never been greater.

As part of a heterogeneous computing approach, CPUs play a pivotal role in the increasing shift to on-device AI. Arm CPUs feature in billions of mobile devices worldwide and are the preferred choice for millions of third-party apps due to the:

Exceptional performance across AI workloads;

Optimized inference for real-time AI apps;

Superior power-efficiency for constrained devices; and,

Scalability across ecosystems and markets.

As part of the new Arm Lumex compute subsystem (CSS) platform, the Arm C1 CPU cluster – the first built on the Armv9.3 architecture – is the next evolution of our highest performing CPU cluster for consumer devices, designed to unleash the full potential of on-device AI and elevate the user experience.

The High-Performance Arm C1 CPU Cluster for the AI Era

The highest performance C1 CPU cluster integrates the new Arm C1-Ultra CPU alongside a flexible combination of Arm C1-Premium, -Pro and -Nano CPU cores to deliver a series of performance and power-efficiency improvements that can be tailored to specific partner needs. This is alongside the Arm Scalable Matrix Extension 2 (SME2) that is directly built into the C1 CPUs via the Armv9 architecture – an absolute game-changer for accelerated AI experiences.

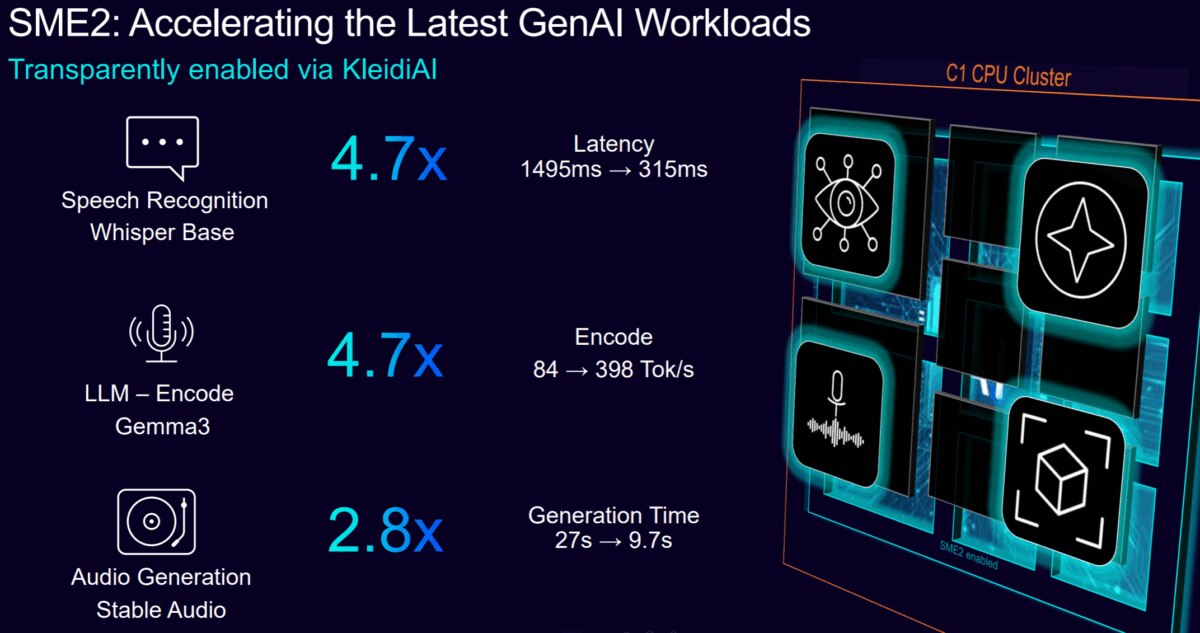

For generative AI, speech recognition, classic machine learning (ML) and computer vision (CV) workloads, the SME2-enabled Arm C1 CPU cluster achieves up to 5x AI speed-up compared to the previous generation CPU cluster under the same conditions. Meanwhile, through SME2, the CPU cluster delivers up to 3x improved efficiency. These AI performance and efficiency gains directly translate into more seamless, responsive on-device user experiences.

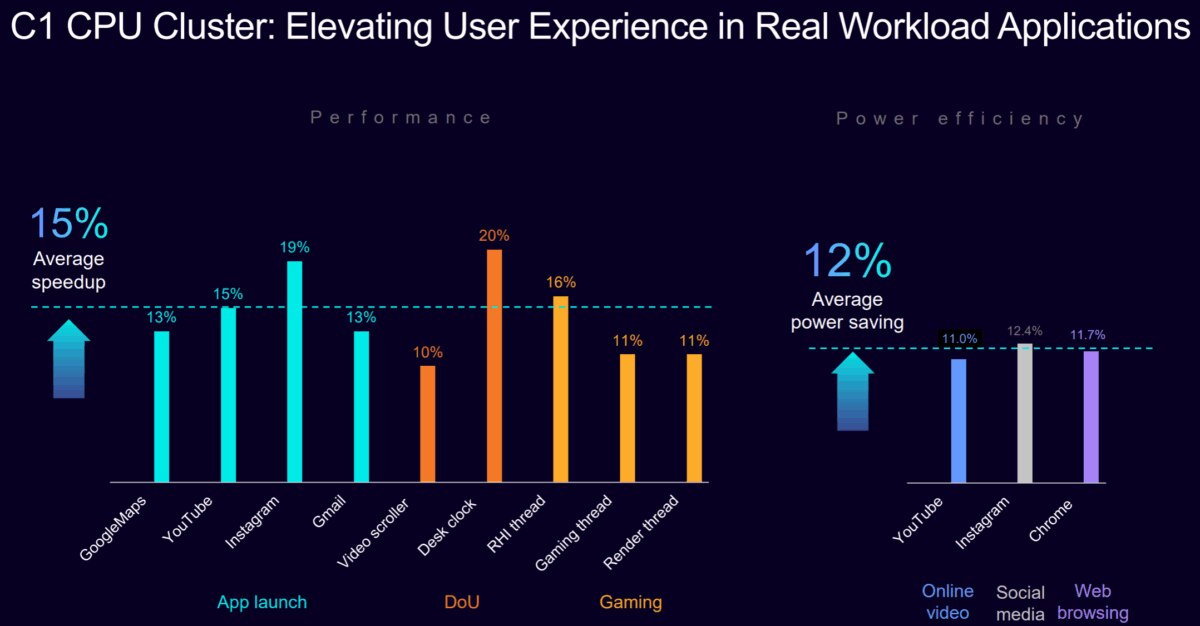

For real-world use cases, the Arm C1 CPU cluster shines, with an average 30 percent performance uplift across industry-leading performance benchmarks and an average 15 percent speed-up across applications like gaming and video streaming compared to the previous generation CPU cluster under the same conditions. These performance improvements come alongside power savings, with an average 12 percent less power used across daily mobile workloads, like video playback, social media, and web browsing, compared to the previous generation CPU cluster under the same conditions.

The Arm C1 CPU Family: Performance and Efficiency Where It Matters

Beyond the highest performance Arm C1 CPU cluster, the new C1 CPUs can scale to meet every consumer and mobile device tier, with different levels of performance, power, and area efficiency for the variety of on-device workloads.

Built-in AI Acceleration With SME2

Thanks to the in-built matrix extensions of SME2, AI capabilities are accelerated on the C1 CPUs, including the matrix-heavy operations behind large language models (LLMs), media processing (image and video), speech recognition, CV, real-time apps (AI assistants, computational photography, and AI filters) or multimodal apps. SME2 builds on the foundation of SME, but with new, smart upgrades that help boost performance, reduce memory use, and make on-device AI feel smoother, especially for apps with real-time constraints like audio generation, camera inference, CV, or on-chat interactions.

For our ecosystem of partners and developers, the improvements transparently accelerate AI performance across different workloads and use cases compared to hardware without SME2 enablement, including:

4.7x lower latency for speech-based workloads on Whisper Base;

4.7x uplift in AI performance when running on-chat interactions on the Google Gemma 3 model; and

2.8x faster audio generation time on the Stability AI Stable Audio model.

Mobile developers can directly access SME2 performance benefits for their apps with no code changes through Arm KleidiAI integrations across leading AI frameworks, including Alibaba MNN, Google LiteRT and MediaPipe, Meta llama.cpp and Microsoft ONNX Runtime, and runtime libraries, including Google XNNPACK. These integrations mean that SME2 is embedded within developers’ software stacks, provided their apps use the supported AI frameworks and runtime libraries.

Certain Google apps are enabled for SME2, so they are ready to benefit from improved AI features when SME2 hardware lands in the next generation of Android smartphones. Moreover, SME2 is not just targeted for flagship and premium smartphones, with future mid-tier devices set to integrate SME2-enhanced hardware to boost AI compute performance.

Arm C1-Ultra and C1-Premium for Best-in-Class Peak and Sustained Performance

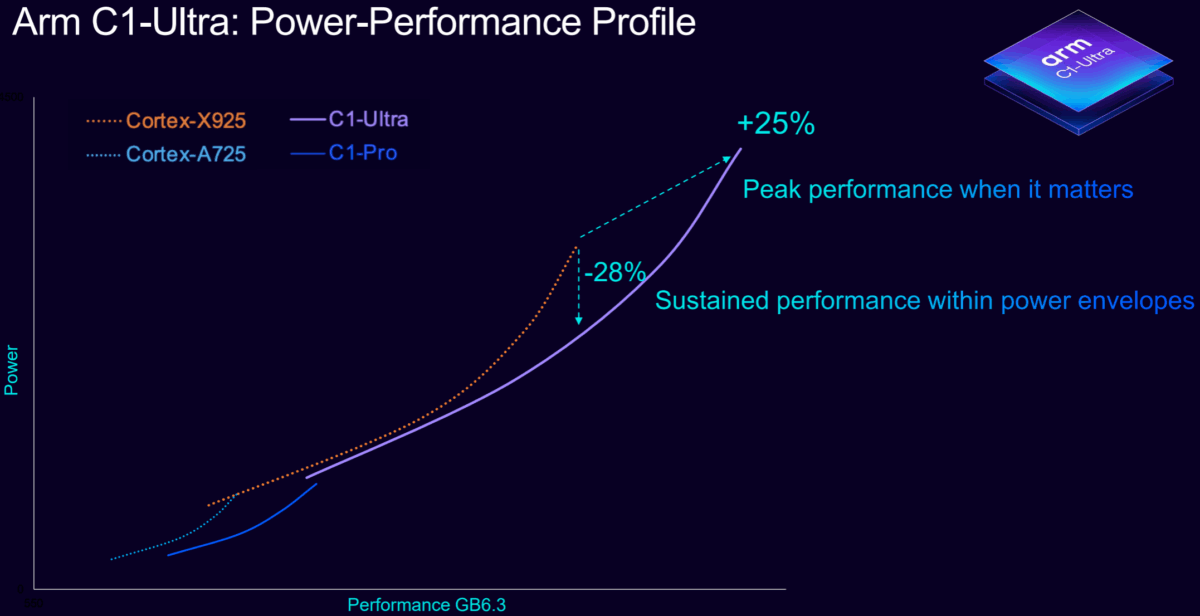

Designed for demanding AI tasks and workloads, the Arm C1-Ultra CPU is the new high-performance, flagship CPU. Through the C1-Ultra, Arm delivers a six-year trend of double-digit performance improvements, with an incredible 25 percent single-thread uplift for peak performance compared to the previous generation Arm Cortex-X925 CPU. A big driver for this performance gain is delivered through double-digit IPC (Instructions Per Cycle) gains. As explained in this blog, IPC is important for real-world mobile use cases because it delivers:

Peak performance when and where it matters;

Improved performance within the power envelope of mobile devices; and,

Reduced power consumption for fixed compute needs.

Alongside the new single-thread performance uplift, C1-Ultra unleashes improvements across various benchmarks, AI workloads, and real-world applications compared to the Cortex-X925. These are made possible by design enhancements across every aspect of the C1-Ultra, including:

A best-in-class front-end, which is optimized for real workloads;

The widest, highest-throughput micro-architecture in the industry; and,

Best-in-class pre-fetchers to optimize performance within area constraints.

Meanwhile, the Arm C1-Premium CPU is the first ever Arm sub-flagship CPU, and 35 percent smaller than the C1-Ultra core including private L2 cache. The CPU delivers best-in-class area efficiency through maintaining similar performance levels on benchmarks like the SPEC suites, but at a much smaller area footprint.

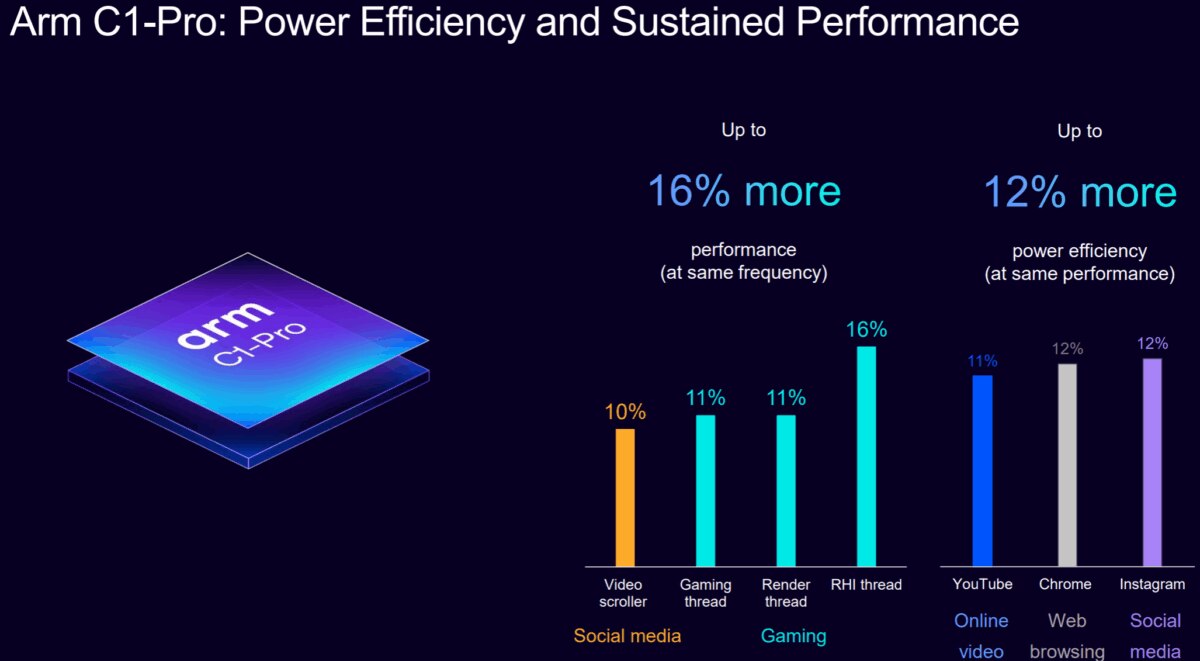

Arm C1-Pro for Sustained Efficiency

The Arm C1-Pro CPU is built to push performance while keeping power in check, with higher performance per watt across the entire power range. It excels for workloads like gaming, providing a 16 percent uplift in sustained performance at the same frequency compared to the previous generation Arm Cortex-A725 CPU. Meanwhile, for video, web browsing, and social media use cases, the C1-Pro is up to 12 percent more power efficient than the Cortex-A725 CPU at the same performance. This delivers next-gen power efficiency without any performance trade-offs.

At a micro-architecture level, the C1-Pro introduces enhanced branch prediction and memory system updates, making it ideal for real-world multitasking. The CPU also has an area-optimized configuration that partners can integrate to harness the performance benefits from SME2 in a more compact footprint.

Arm C1 Nano for the Highest Levels of Power and Area Efficiency

The Arm C1-Nano CPU brings all the benefits of the C1 family of CPUs in the smallest area footprint. It is 26 percent more power efficient – with the new Arm DynamIQ Shared Unit (DSU) – than the previous generation Arm Cortex-A520 CPU, while delivering performance improvements within two percent less of the core area, making it ideal for wearables and compact consumer devices.

C1-DSU for Platform Flexibility and Scalability

The C1 CPU cluster provides a scalable compute foundation for mobile, with the new C1-DSU at its center. Designed to support the latest architecture and new low-power features, the C1-DSU enables up to 26 percent power savings compared to the previous generation DSU-120, alongside improved bandwidth scaling to support a range of new AI workloads across different consumer and mobile device markets. Whether powering flagship and premium smartphones, mid-tier mobile devices or wearables, the C1 CPU cluster can adapt. For example, a C1-Pro and C1-Nano CPU cluster delivers 2x compute density improvements over the previous generation Cortex-A725 and A520 CPU cluster, bringing powerful AI capabilities to mid-tier mobile.

The Heart of the On-Device AI Revolution

The Arm C1 CPU cluster provides everything that the future of on-device AI demands: performance, efficiency, scalability, and built-in intelligence. With built-in SME2 and a flexible range of options from Ultra to Nano, Arm delivers accelerated AI experiences that are accessible to everyone.

The new Arm C1 CPUs are designed to enable a world where AI is showing up everywhere, powering the apps, devices, experiences and features that are defining the next era of mobile computing. We couldn’t be more excited about seeing our new CPUs in consumer devices in the very near future!