There was a further shift away from complexity in web development this year, with frontend frameworks like Astro and Svelte gaining popularity as more developers looked for solutions beyond the React ecosystem. Meanwhile, native web platform features proved they’re up to the job of building sophisticated web applications — with CSS in particular improving over 2025.

That all said, perhaps the biggest web development trend of this year was the rise of AI-assisted coding — which, it turned out, is prone to defaulting to React and the leading React framework, Next.js. Because React dominates the frontend landscape, large language models (LLMs) have had a lot of React code to train on.

Let’s look in more detail at five of the biggest web development trends of 2025.

1. The Rise of Native Web Features

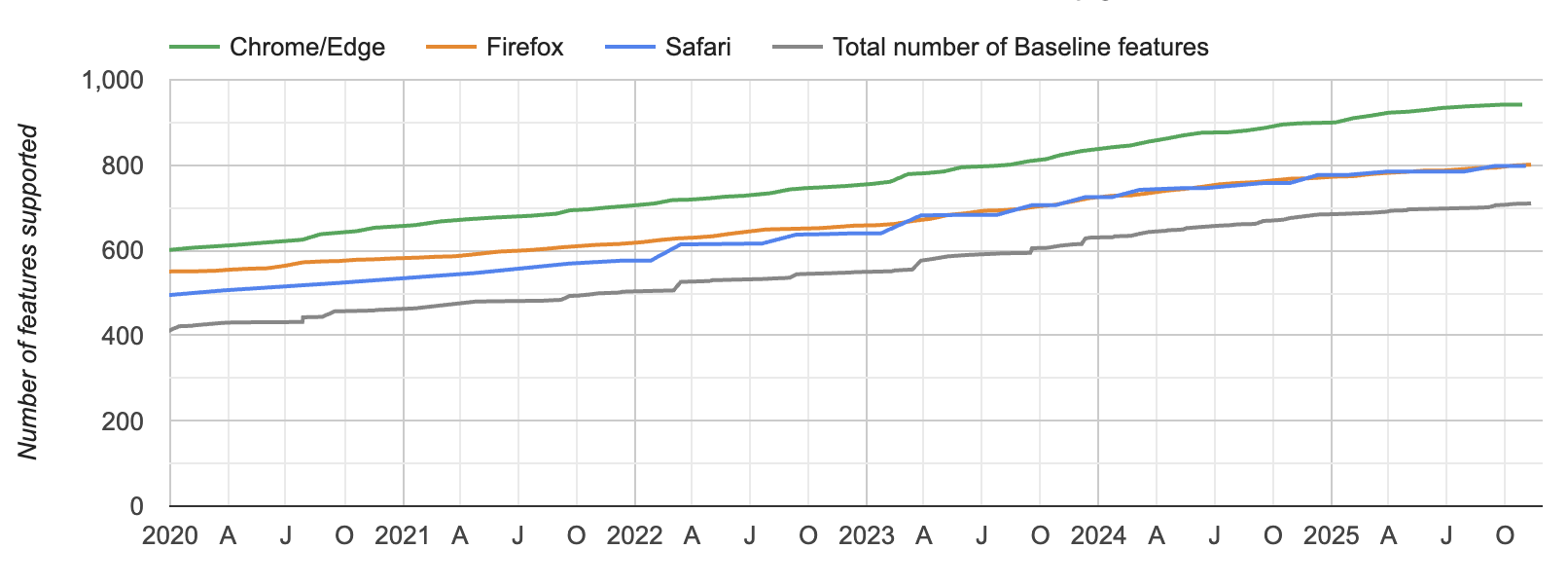

Over 2025, a number of native web features quietly caught up to the functionality offered by JavaScript frameworks. For instance, the View Transition API — which enables your website to animate smoothly between pages — became part of the Baseline 2025 index of cross-browser support. So it’s now widely available for web developers to use.

Baseline is a project coordinated by the WebDX Community Group at the W3C, which includes representatives from Google, Mozilla, Microsoft and other organizations. It’s only been running since 2023, but this year it really came into its own as a useful resource for practicing web developers.

The steady annual growth of Baseline features, via the Web Platform Status site.

As The New Stack’s Mary Branscombe reported in June, there are plenty of ways to keep track of what’s becoming part of baseline:

“Google’s Web.Dev has a monthly update on Baseline features and news, the WebDX features explorer lets you view features that are Limited Availability, Newly Available or Widely Available; and the monthly release notes cover what features have reached a new baseline status.”

From a web functionality perspective, there’s really no excuse any more to not use native web features. As long-time web developer Jeremy Keith put it recently, frameworks are “limiting the possibility space of what you can do in web browsers today.” In a follow-up post, Keith urged developers to especially stop using React in the browser because of the file size cost to the user. Instead, he encouraged devs to “investigate what you can do with vanilla JavaScript in the browser.”

2. AI Coding Assistants Default to React

This year, AI became a standard part of the web development toolchain (albeit one not always endorsed by developers, especially those who socialize on Mastodon or Bluesky instead of X or LinkedIn). Whether or not you’re an AI fan in application development, there is one big concern: the propensity of LLMs to default to React and Next.js.

When OpenAI’s GPT-5 was released in August, one of its purported strengths was coding. GPT-5 initially got very mixed reviews from developers, so at that time, I reached out to OpenAI to ask them about the coding features. Ishaan Singal, a researcher at OpenAI, responded by email.

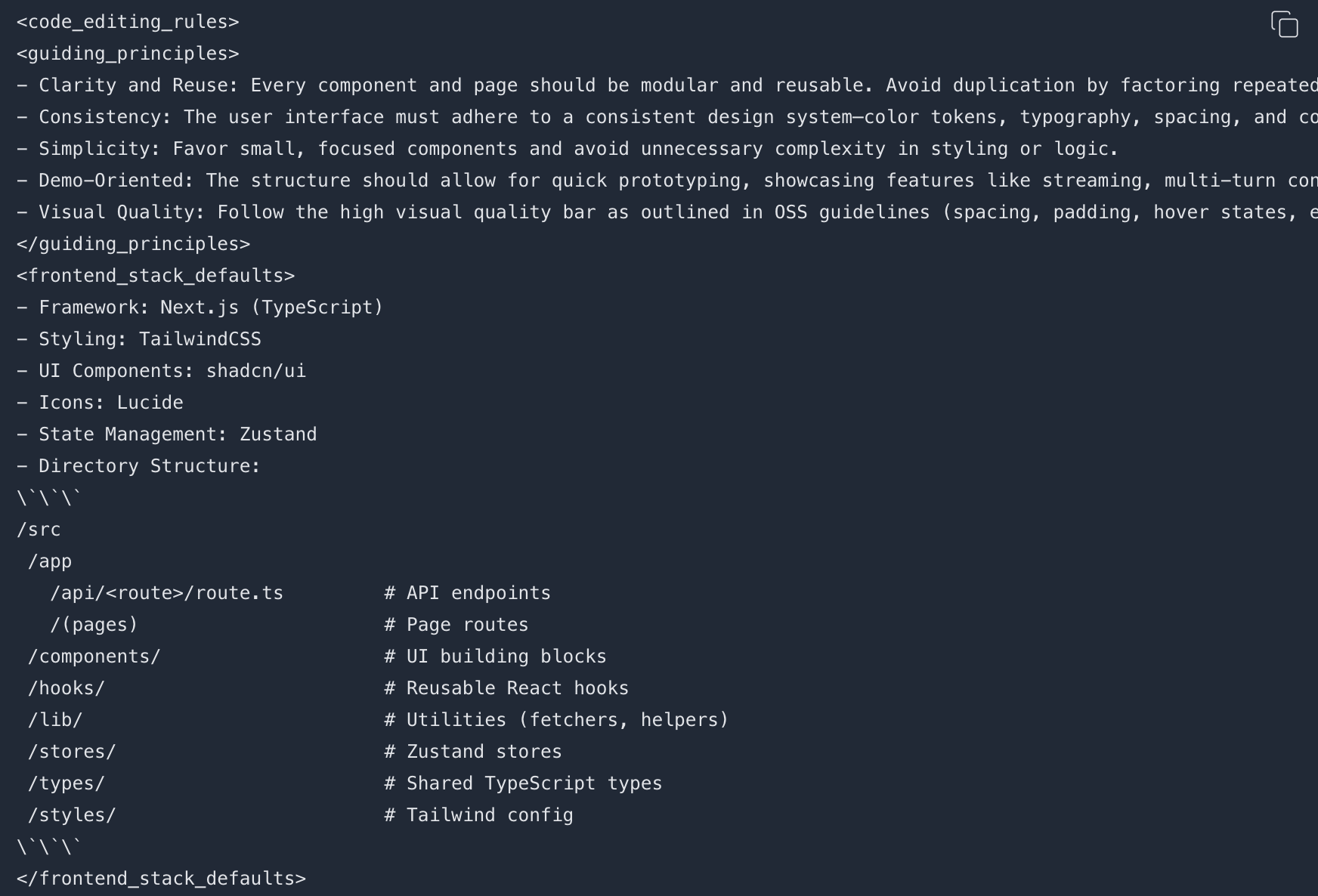

I noted to Singal that in the GPT-5 prompting guide, there are three recommended frameworks: Next.js (TypeScript), React and HTML. Was there any collaboration with the Next.js and React project teams, I asked, to optimize GPT-5 for those frameworks?

“We picked these frameworks based on their popularity and commonality, but we did not collaborate directly with the Next.js or React teams on GPT-5,” he replied.

An example of “organizing code editing rules for GPT-5” from OpenAI’s GPT-5 prompting guide.

We know that Vercel, the company that shepherds the Next.js framework, is a fan of GPT-5. On launch day, it called GPT-5 “the best frontend AI model.” So there is a nice quid pro quo happening here — GPT-5 was able to become an expert in Next.js because of its popularity, which presumably increases its popularity even more. That helps both OpenAI and Vercel.

“At the end of the day, it is the developer’s choice,” Singal concluded, regarding which web technologies a dev wants to use. “But established repos have better support from the community. This aids developers in self-serve maintenance.”

3. Emergence of Web Apps in AI Agents and Chatbots

This year, we saw the emergence of mini-web applications inside AI chatbots and agents.

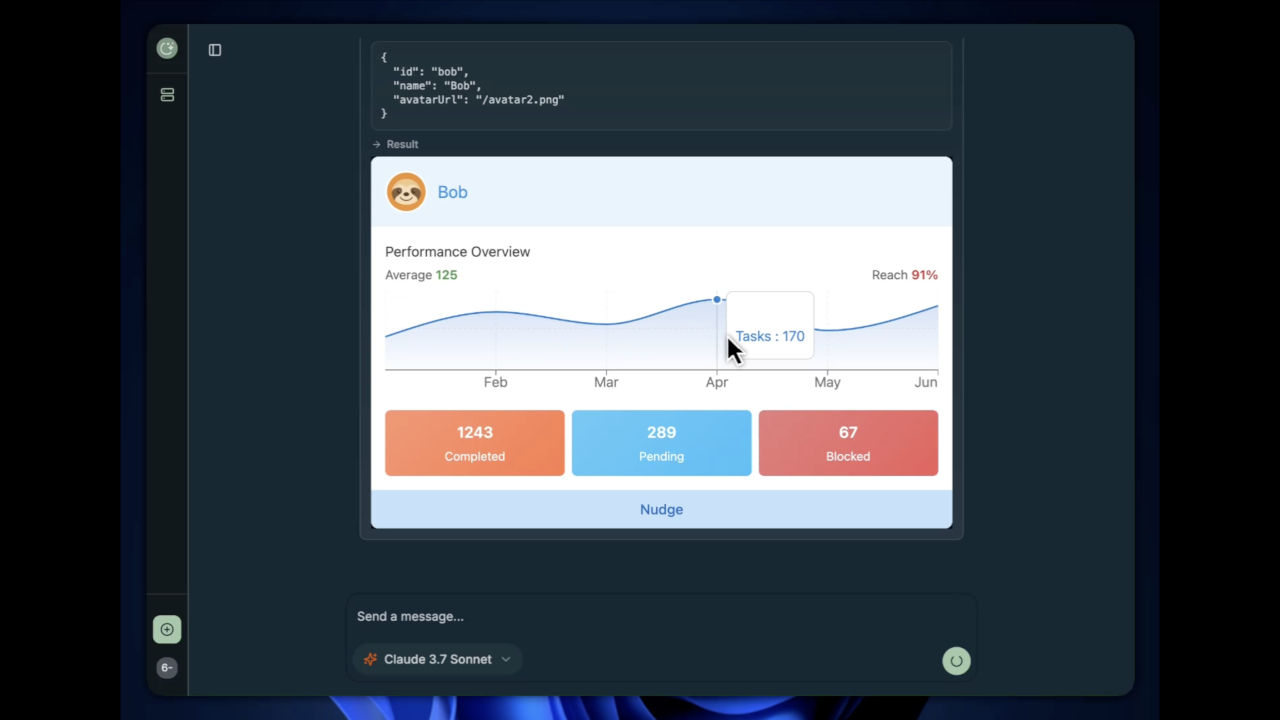

MCP-UI was the first sign that the web would be a key part of AI agents. As the name suggests, MCP-UI uses the popular Model Context Protocol as a communication foundation. The project “aims to standardize how models and tools can request the display of rich HTML interfaces within a client application.”

In an interview in August, the two founders (one of whom worked at Shopify at the time) explained that there are two types of SDKs for MCP-UI: a client SDK and a server SDK to connect to MCP servers. The server SDK is available in TypeScript, Ruby and Python.

MCP-UI demo of a UI being inserted into a Claude 3.7 Sonnet chat.

MCP-UI sounded promising, but it was quickly overshadowed by OpenAI’s Apps SDK, which launched in early October. Apps SDK allows third-party developers to build web-based applications that run as interactive components inside ChatGPT conversations — reminding many of us of when Apple launched its App Store in 2008.

The defining trait of Apps SDK is its web-based UI model (similar to MCP-UI). A ChatGPT app component is a web UI that runs in a sandboxed iframe inside a ChatGPT conversation. ChatGPT acts as the host of the app. You can think of a third-party ChatGPT app as a “mini web app” embedded directly into ChatGPT’s interface.

By the end of October, industry heavyweights like Vercel had figured out how to use their JavaScript frameworks to build ChatGPT apps. Vercel’s quick integration of Next.js with the ChatGPT apps platform shows that AI chatbots won’t just be limited to lightly interactive widgets — sophisticated web apps will live on these platforms, too.

4. Web AI and On-Device Inference in the Browser

A parallel development over 2025 was the rise in running client-side AI in the browser, which allows LLM inference to happen on-device. Google was especially prominent in this trend; and its term for it is “Web AI.” Jason Mayes, who leads these initiatives at Google, defines Web AI as “the art of running any machine learning model or service entirely client-side on the user’s device via the web browser.”

In November, Google held an invitation-only event called the Google Web AI Summit. Afterwards, I spoke to Mayes, the event’s organizer and MC. He explained that a key technology is LiteRT.js, Google’s Web AI runtime that targets production web applications. It builds on LiteRT, which is designed to run machine learning (ML) models directly on devices (mobile, embedded, or edge) rather than relying on cloud inference.

In a keynote presentation at the Web AI Summit, Parisa Tabriz, vice president and general manager for Chrome and the web ecosystem at Google, highlighted the built-in AI APIs that were added to Chrome last August, along with the release of Gemini Nano — Google’s main on-device model — as a built-in feature in Chrome last June. These and other web technologies are driving the current Web AI trend.

Parisa Tabriz at Web AI Summit.

Another innovation that Google was involved in, alongside Microsoft, was the release of WebMCP, which lets devs control how AI agents interact with websites using client-side JavaScript. In a September interview with Kyle Pflug, group product manager for the web platform at Microsoft Edge, he explained that “the core concept is to allow web developers to define ‘tools’ for their website in JavaScript, analogous to the tools that would be provided by a traditional MCP server.”

Web AI isn’t just being promoted by commercial companies. The World Wide Web Consortium (W3C) is also exploring building blocks for “the agentic web,” which includes using MCP-UI, WebMCP and another emerging standard called NLWeb (developed at Microsoft).

5. The ‘Vite-ification’ of the JavaScript Ecosystem

It may sound like AI dominated web development this year — and it did, actually. But frontend tooling also saw its share of innovation. One product in particular stood out.

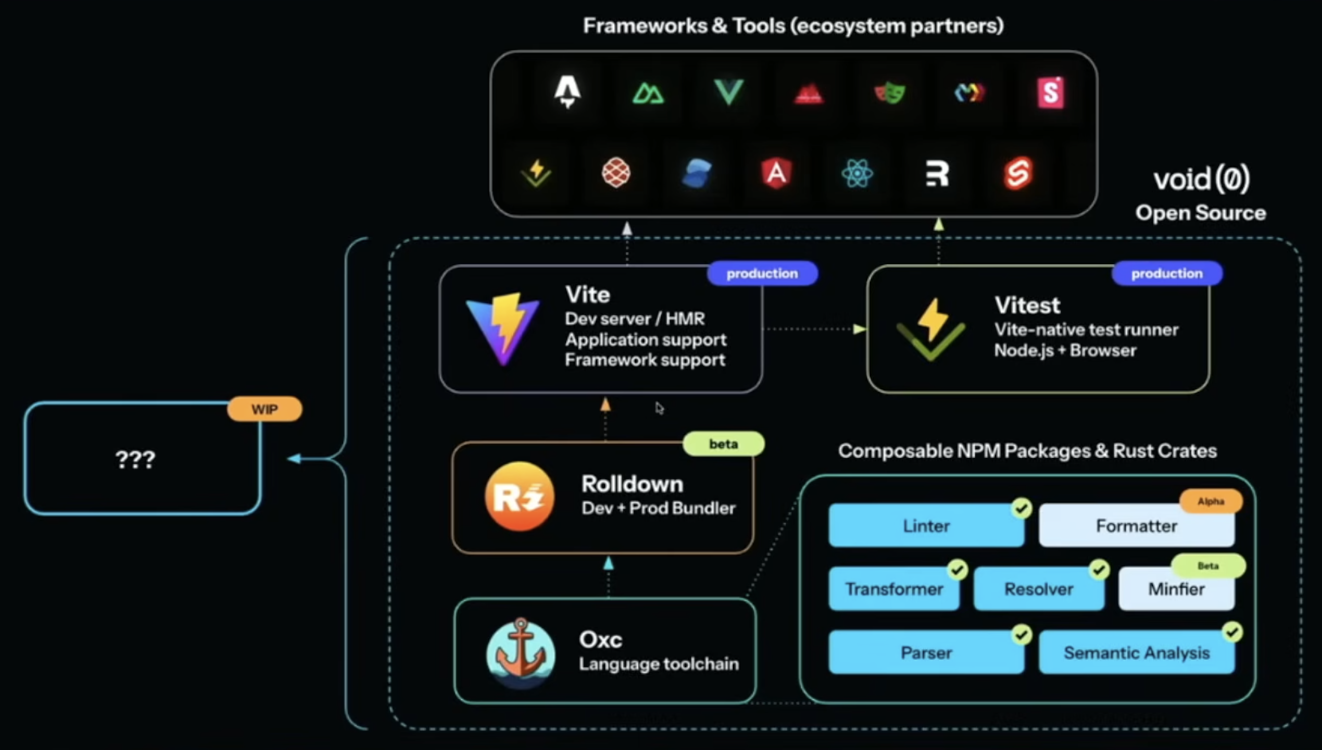

Vite, created by Evan You, has become the go-to build tool for modern frontend frameworks, including Vue, SvelteKit, Astro and React — with experimental support also from Remix and Angular. In an interview with The New Stack in September, You told me that the key to Vite’s success was its early use of ES Modules (ESM), a standardized JavaScript module system that allows you to “break up JavaScript code into different pieces, different modules that you can load.”

Vite ecosystem via Even You at ViteConf.

You and his company, VoidZero, are now building Vite+, a new unified JavaScript toolchain that aims to solve JavaScript fragmentation. At this year’s ViteConf event, You officially unveiled Vite+ and positioned it as an enterprise development toolkit. He said it includes “everything you love about Vite — plus everything you’ve been duct-taping together.”

A Crossroads for Web Development

At the end of 2025, it feels like we’re at a crossroads in frontend development. On the one hand, there’s a way out of the React complexity conundrum: Use native web features and tools like Astro that ease the burden on users. While that was indeed a trend this year, it’s in danger of being overshadowed in 2026 by our increasing reliance on AI tools for coding — which, as noted, tend to rely on React.

The fact is, most developers now — including the hundreds of thousands of “vibe coders” who previously had not been part of the developer ecosystem — will continue to be fed React code by AI systems. That makes it even more imperative for the web development community to continue to endorse and advocate for native web code next year.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTube

channel to stream all our podcasts, interviews, demos, and more.

SUBSCRIBE

Group

Created with Sketch.

Richard MacManus is a Senior Editor at The New Stack and writes about web and application development trends. Previously he founded ReadWriteWeb in 2003 and built it into one of the world’s most influential technology news sites. From the early…

Read more from Richard MacManus