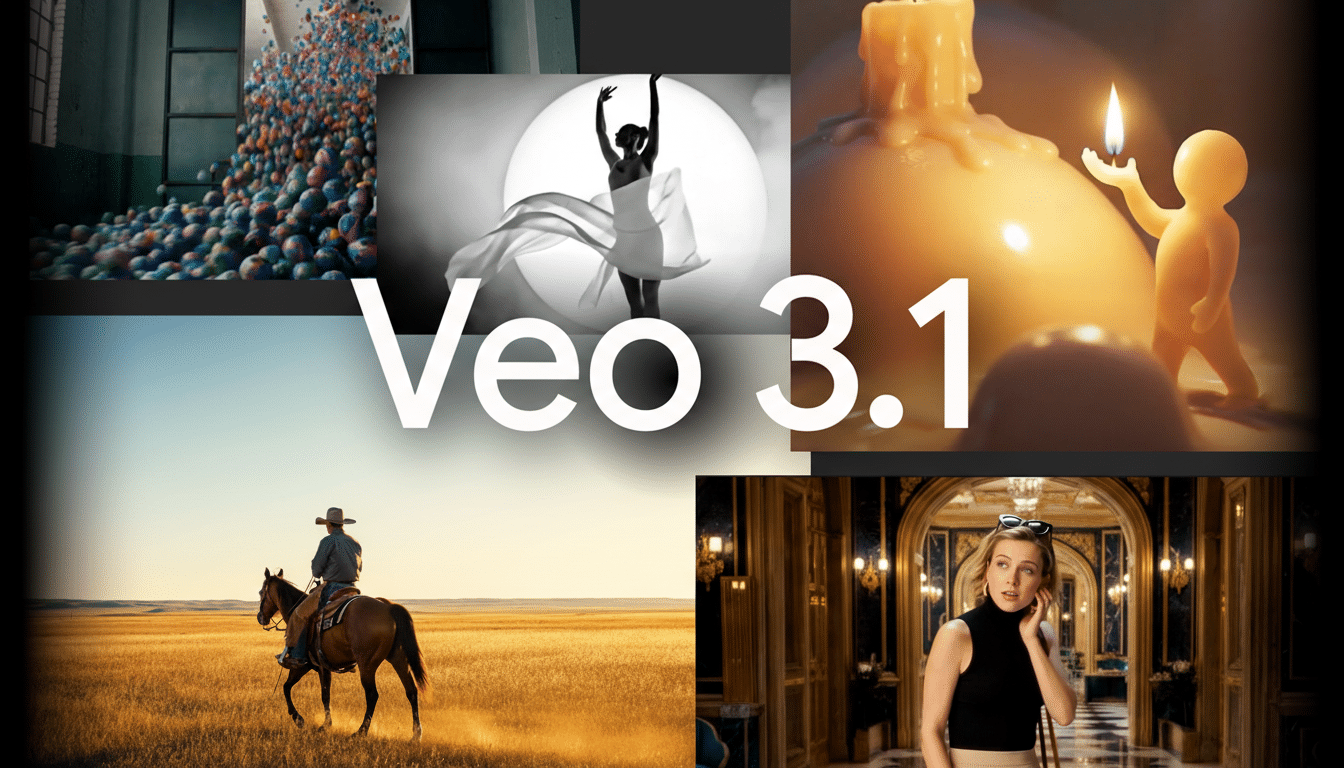

Google is pushing its video AI into creator-first territory. Veo 3.1 now generates native vertical clips guided by up to three portrait reference images, promising more faithful characters, backgrounds, and visual continuity in 9:16. The update also brings 4K upscaling and broader distribution across Google’s consumer and enterprise tools.

What’s New in Veo 3.1 for Native Vertical Video

The core change is image conditioning for vertical outputs. Users upload one to three reference photos—faces, objects, or environments—and Veo blends those elements into a cohesive clip while preserving key attributes. Google says character identities hold steady even as scenes shift, addressing a longstanding pain point where AI-generated subjects morph between shots.

Vertical is now native, not a crop. Creators can choose 9:16 at generation time, which improves framing for faces, hands, and tall subjects, and reduces artifacts from post-crop resizing. A new upscaler lifts results to 4K from a previous 1080p ceiling, useful for Shorts, TikTok, or signage where compression can magnify flaws.

How Reference Images Shape Vertical Clips

Reference images effectively “anchor” Veo’s imagination. One image can lock a protagonist’s face and attire; a second might define a product’s look; a third can set a background palette or architectural style. In practice, this behaves like a lightweight character bible: the model infers identity, texture, and color continuity from your photos, then applies them across prompts and scene transitions.

For creators, that means fewer reshoots and less post-editing to fix drifting details. A travel vlogger can maintain the same on-camera persona across a day-to-night montage. A retailer can keep packaging and logos consistent in a product reel. Even stylized content—think anime-inspired shorts—can keep proportions, hair, and wardrobe stable from frame to frame.

Built for Shorts, TikTok, and 4K Workflows

Google is aligning Veo with where attention already lives. You can generate in 9:16 and post directly to YouTube Shorts or TikTok without destructive cropping. YouTube has said Shorts reaches more than 2 billion logged-in monthly users, and the format’s fast loop favors crisp, high-contrast visuals. Pairing native vertical generation with 4K upscaling should reduce softness and motion artifacts that are amplified by social compression.

This release also tightens the edit pipeline. Outputs can be fine-tuned in YouTube Create, Google’s mobile editor, with transitions, captions, and audio tracks. For rapid iteration—say, testing three thumbnails or backgrounds from reference photos—creators can generate multiple variants and pick the best-performing look based on early engagement.

The features are rolling out in the Gemini mobile app and are accessible in YouTube Shorts and the YouTube Create app. For professional teams, Veo 3.1’s image-conditioned vertical generation and 4K upscaling are available via Flow, Gemini API, Vertex AI, and Google Vids, enabling more controlled, programmatic content pipelines.

On the safety and provenance front, Google has promoted watermarking and metadata approaches such as SynthID from Google DeepMind to help identify AI-generated media. YouTube has also introduced labeling tools for AI-assisted content. Those measures will be increasingly important as higher-fidelity, vertical-by-default outputs blend into Shorts feeds.

How It Stacks Up Against Rivals And Why It Matters

Rivals are converging on similar goals. OpenAI’s Sora showcases long-form scene coherence; Runway’s Gen-3 and Pika’s tools emphasize control over style and motion; Meta’s Emu Video leans into social-first creation. Google’s differentiator here is practicality: native 9:16, reference-image conditioning, 4K, and distribution across Gemini, YouTube, and Vertex AI make Veo 3.1 feel production-ready for everyday creators and brands.

The business implication is straightforward. Vertical video is now the default canvas for discovery, and identity consistency—faces, products, locations—is the currency of trust. By letting creators “lock” those elements with a few photos, Veo 3.1 reduces friction from idea to publish while keeping aesthetics on-brand. Expect to see reference-driven shorts in product marketing, educational explainers, and creator series where character continuity matters.

The open question is governance at scale. As tools make it easier to produce convincing vertical clips, platforms and publishers will rely more on watermarking, disclosures, and moderation to keep feeds useful and authentic. For now, Veo 3.1’s combination of reference-guided fidelity, native vertical framing, and 4K delivery is a notable step toward that future—one designed squarely around the realities of mobile video.