This summer, Stanford Health Care launched an AI application for its electronic health records system. Called “ChatEHR User Interface” the tool is an AI chat interface similar to OpenAI’s ChatGPT that allows Stanford’s medical staff to ask questions about each patient’s medical record.

Behind the ChatEHR interface is the ChatEHR Platform—a set of foundational capabilities that safely connect AI models with real-time clinical data at the point of care. It serves as the foundation for multiple AI-enabled applications across Stanford Health Care, including the chat experience and several workflow automations. By combining secure access to patient data, LLMs, and deep integration with clinical workflows, the platform represents a significant step forward in bringing AI capabilities to healthcare delivery.

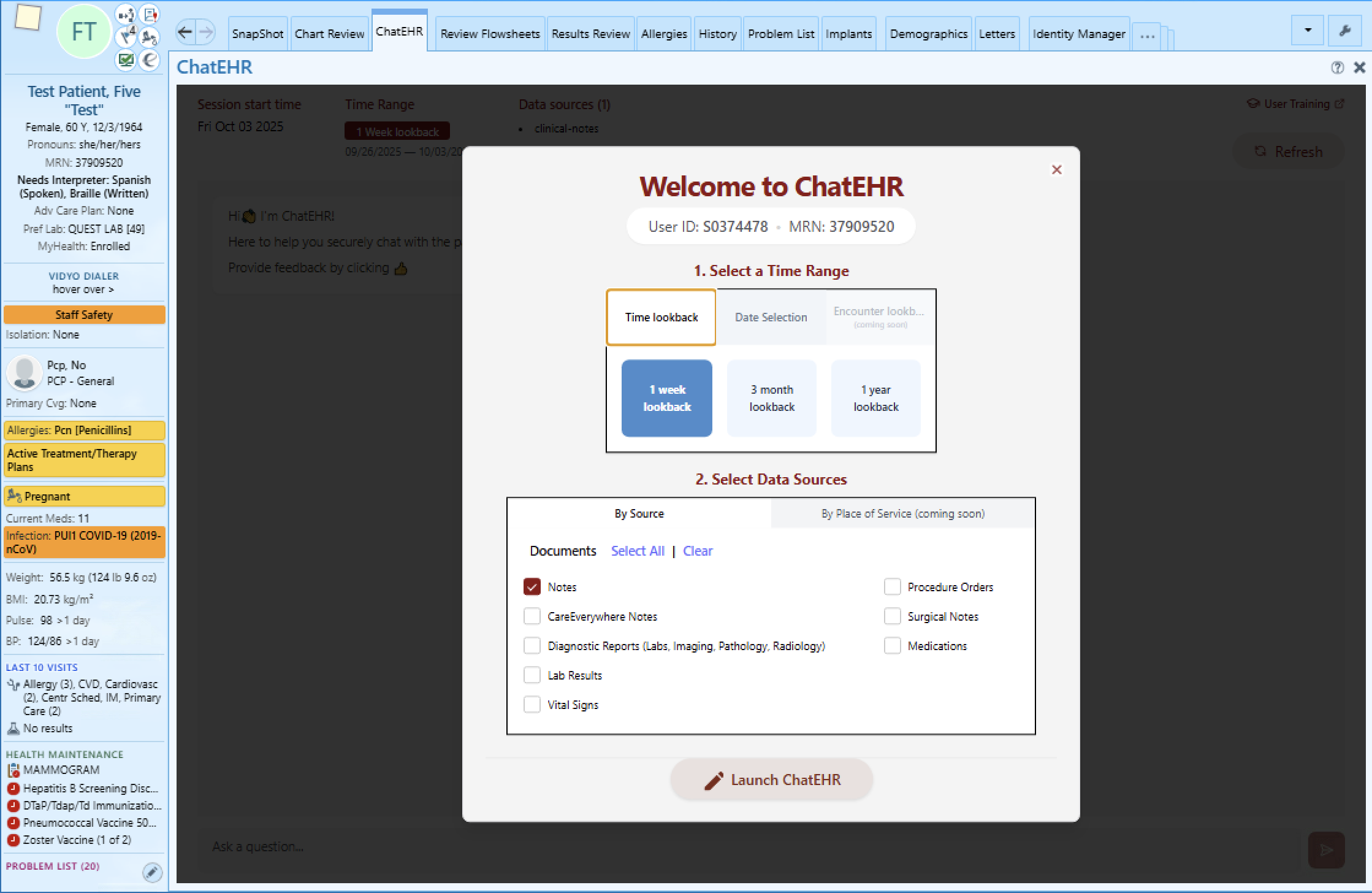

Figure 1: The ChatEHR User Interface (UI) is a custom chat interface for Stanford clinicians and staff. This UI is embedded directly in Epic and lets users “chat” with a patient’s chart. Users can narrow down the date range and data sources to focus on before beginning their chat session.

Figure 1: The ChatEHR User Interface (UI) is a custom chat interface for Stanford clinicians and staff. This UI is embedded directly in Epic and lets users “chat” with a patient’s chart. Users can narrow down the date range and data sources to focus on before beginning their chat session.

Bringing generative AI into healthcare introduces challenges beyond those of typical LLM systems. The platform must operate on real-time clinical data, uphold strict privacy and security standards, and integrate directly within existing EHR workflows. In this post, we share the ChatEHR Platform’s architecture and how we tackled these challenges.

The Challenges of Bringing AI to Healthcare

Building an AI platform for healthcare presents unique challenges that go beyond typical software development. Our journey through solving these challenges shaped the fundamental architecture of the ChatEHR Platform.

Real-time Data Access

The platform evolved through several iterations to achieve reliable real-time data access. We initially relied on our EHR’s reporting database for data, which uses a standard schema and is updated nightly. However, clinicians need current data to make decisions.

We tried combining the reporting database with real-time messages using the HL7v2 standards, but reconciling these two data sources proved complex to maintain. Then we landed on Fast Healthcare Interoperability Resources (FHIR)

FHIR is an effective standard for data retrieval in healthcare, as it facilitates seamless integration and exchange of information across diverse systems. Its vendor-neutral format ensures that data can be accessed and utilized consistently, regardless of the underlying technology or platform. While adopting FHIR addressed many interoperability and consistency goals, implementing it at enterprise scale and with the low latency required for clinical AI introduced several complex challenges. These included ensuring completeness, timeliness, and reliability of data across diverse clinical systems. Overcoming these hurdles required significant engineering effort and collaboration across teams—and ultimately enabled the near real-time data foundation that powers ChatEHR today.

Processing Data Quickly

Early versions explored various retrieval methods, but maintaining clinical accuracy and speed required a different approach. The current design applies optimized data transformations and distributed processing to ensure responsive, contextually accurate retrieval across diverse patient histories. Our ultimate solution combines two key innovations to achieve fast and accurate retrieval: First, we transform raw FHIR data into formats optimized for LLM processing while preserving data integrity. Second, we distribute processing across multiple concurrent LLM calls, each handling specific data domains like medications, labs, or procedures. This parallel architecture maintains sub-second response times even with extensive patient histories.

Translating Data into a Clinician-Friendly Format

Healthcare data exists in two fundamentally different worlds. In the technical world we work with FHIR resources, HL7v2 messages, and complex database schemas. However, clinicians think more holistically: in terms of episodes of care, treatment plans, and the all-encompassing note. To surface relevant information to clinicians, we translate system-level FHIR data into a clinician-friendly structure before displaying it. Behind the scenes, we preserve system metadata for traceability and auditing.

Securely Connecting LLMs to the EHR

Finally, we tackled the challenge of securely connecting a wide range of cutting-edge AI capabilities to the EHR. We deployed a self-hosted gateway for all large language model interactions, providing a single, secure access point that centralizes authorization, logging, and monitoring across model providers.

The Architecture of the ChatEHR Platform

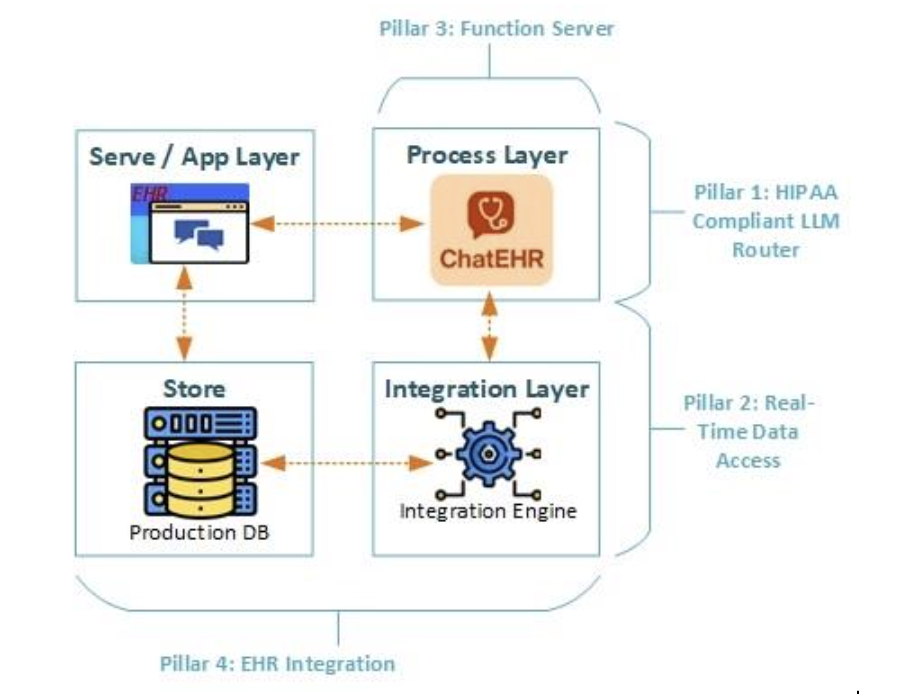

The ChatEHR Platform consists of four foundational pillars. Together these pillars constitute a robust and extensible AI platform capable of supporting several production applications while also expanding to support future innovations in healthcare AI.

Figure 2: The ChatEHR platform consists of four pillars: 1) an LLM router with access to a variety of models, 2) real-time read EHR data access via FHIR, 3) a general-purpose function server, and 4) robust integration with the EHR.

Figure 2: The ChatEHR platform consists of four pillars: 1) an LLM router with access to a variety of models, 2) real-time read EHR data access via FHIR, 3) a general-purpose function server, and 4) robust integration with the EHR.

Pillar 1: The LLM router serves as an access point for all LLMs and AI tools, regardless of vendor. This router selects the correct model for each query type, and routes it to a self-hosted server that standardizes all LLM calls into a standardized format. The LLM router also handles all logging, easing maintenance and making observability a built-in feature.

Pillar 2: Real-Time Data Access is achieved by a serverless function that fetches and organizes clinical information using FHIR. This service uses intelligent caching for frequently accessed patterns, and parallel processing that breaks complex queries into concurrent operations. The result is a system that can process millions of clinical data points while maintaining consistent performance.

Pillar 3: The Function Server provides task-specific endpoints that power our various applications. For the flagship ChatEHR UI, it includes specialized chat completion endpoints that combine LLM capabilities with clinical data access. This server transforms generic AI capabilities into healthcare-specific functions, handling everything from natural language processing to clinical workflow automation.

Pillar 4: EHR Integration is handled by our enterprise integration service, which manages secure connections between the ChatEHR platform, the EHR, and other IT systems. This service provides a secure and reliable connection with the EHR including authentication, rate limiting, and comprehensive logging. This integration service also includes process automation and scheduling, which we use to create business logic for applications built on the platform.

ChatEHR UI: the Flagship Application

The ChatEHR UI is the first application built on this platform. Embedded directly within the EHR, it allows clinicians to interact with a patient’s chart using natural language. The UI automatically inherits user credentials and patient context via the integration service (Pillar 4). When a user begins a chat, the application makes a request to a custom chat completion endpoint on our function server (Pillar 3); the server then makes calls to the LLM router (Pillar 1) and data orchestrator (Pillar 2) to fetch relevant data and generate a response.

Where Do We Go From Here?

As AI capabilities evolve, evaluating their performance and safety becomes increasingly important. Our teams are collaborating with Stanford’s Center for Biomedical Research (BMIR) and the Institute for Human-Centered AI to develop a fifth capability domain focused on responsible evaluation—extending the platform from implementation to continuous learning and oversight. This pillar will include a suite of evaluation tools based on the Holistic Evaluation of Large Language Models for Medical Applications framework, called MedHELM, which was developed as a collaboration between scholars at the Stanford HAI, BMIR, and the Stanford Healthcare Data Science team. This partnership goes both ways: researchers are analyzing anonymized logs from the ChatEHR UI to gain insights into the use cases and limitations of using LLMs in a real clinical setting.

Going forward, the ChatEHR platform is also expanding to standardize how we integrate vendor solutions with Gen AI capabilities. This approach solves two major challenges. First, it allows us to bring external AI tools into our ecosystem, ensuring identifiable patient data never leaves the enterprise. Second, it eliminates the need for costly, custom configurations for each new partner. Instead, vendors will leverage the platform’s existing standard integration, LLM, and evaluation capabilities (mentioned earlier) for a secure, scalable, and consistent approach.

Meanwhile, clinicians using the ChatEHR UI are already envisioning new possibilities, proposing innovative workflow automations that were previously impossible to implement.

Acknowledgments

Nerissa Ambers, Juan M. Banda, Timothy Keyes, Connor O’Brien, Abby Pandya, Carlene Lugtu, Dev Dash, Wencheng Li, Jarrod Helzer, Vicky Zhou, Bilal Mawji, Joshua Ge, Travis Lyons, Srikar Nallan, Vikas Kakkar, Patrick Sculley, Nigam Shah, Michael Pfeffer