The challenge of running complex artificial intelligence on quantum computers takes a step forward with research from Borja Aizpurua, Sukhbinder Singh, and Román Orús, all of Multiverse Computing, alongside colleagues. They investigate how to translate key components of existing classical neural networks onto quantum hardware, aiming to unlock a potential speed advantage on emerging quantum devices. The team achieves this by compressing complex layers into a more manageable format, then cleverly splitting the processing between a quantum computer and conventional hardware. This hybrid approach, validated on standard image classification tasks like MNIST and CIFAR-10, demonstrates a promising pathway towards integrating quantum computation with established machine learning techniques and could ultimately accelerate the development of more powerful artificial intelligence.

The team investigates a novel approach using tensor network disentanglers, offering a promising way to represent and process classical data using quantum resources more efficiently. This work stems from growing interest in variational quantum algorithms, which harness quantum computers as co-processors to enhance classical computation, optimising quantum circuit parameters to minimise a cost function. A key obstacle is the ‘quantum bottleneck’, the difficulty of loading classical data into quantum states without significant overhead in terms of quantum gates and circuit depth.

This work aims to alleviate this bottleneck by exploring a data encoding strategy that leverages the structure of tensor networks to reduce the quantum resources required. Specifically, the research focuses on image classification, demonstrating that a classical neural network can be effectively implemented on a quantum device using this approach, achieving comparable performance to its classical counterpart while utilising fewer quantum resources. The team represents the weights and activations of a classical convolutional neural network as a tensor network, then maps this network onto a quantum circuit using a disentangling procedure, establishing a pathway for deploying classical machine learning models on near-term quantum devices. Removing bottleneck layers from classical pre-trained neural networks on a quantum computer represents a pathway to achieving quantum advantage on near-term devices.

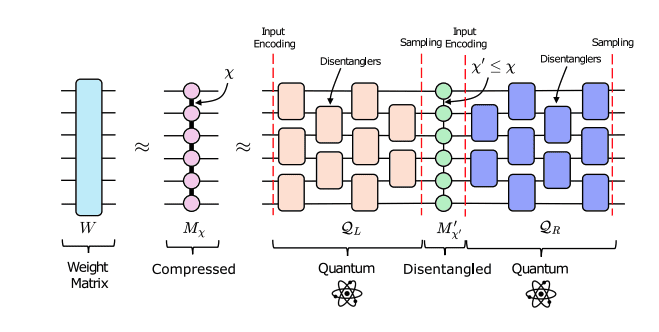

The approach begins with compression, representing the target linear layer as an effective matrix product operator (MPO) without degrading model performance. This MPO is then further disentangled into a more compact form, enabling a hybrid classical-quantum execution scheme where disentangling circuits are deployed on a quantum computer, while the disentangled MPO runs on classical hardware. The team introduces two complementary algorithms for MPO disentangling, designed to optimise the process and maximise efficiency.

Quantum Tensor Networks Compress Classical Models

This research explores the intersection of quantum computing and machine learning, focusing on how quantum techniques can enhance or compress classical machine learning models. A major emphasis is on using tensor networks, inspired by quantum entanglement, and quantum circuits to represent and manipulate data more efficiently, particularly for large models. Tensor networks provide a way to represent high-dimensional data in a more compact and efficient way, bridging the gap between quantum concepts and classical machine learning, compressing large models, representing data efficiently, and accelerating computations. The research also highlights the use of tensor networks to simulate quantum computations on classical computers, allowing researchers to explore the potential benefits of quantum machine learning without needing access to actual quantum hardware.

The team investigates compressing large language models (LLMs) using tensor networks, reducing their size and computational cost while preserving performance. Datasets like MNIST and CIFAR-10 are used to evaluate the performance of machine learning algorithms. The research acknowledges challenges in scaling tensor networks and quantum algorithms to handle very large datasets and models, and explores techniques like positive bias and sign-free contraction to improve efficiency. Hybrid quantum-classical approaches are likely to be crucial for realising the full potential of these techniques. The team explores specific algorithms and techniques, including hyperoptimized approximate contraction, sign-free tensor contraction, disentangled representations, and quantum circuit born machines. Quantum computing and tensor networks offer promising avenues for improving machine learning, but significant challenges remain in terms of scalability and algorithm development.

Quantum Bottleneck Layers for Hybrid Computing

This research demonstrates a method for translating components of classical neural networks into a hybrid classical-quantum computing framework. The team successfully compressed and converted bottleneck layers from pre-trained networks, originally used for image classification tasks, into quantum circuits, allowing these circuits to operate alongside classical hardware. This approach aims to reduce the computational burden on classical processors by offloading specific tasks to a quantum computer without sacrificing the overall accuracy of the neural network, highlighting the importance of efficient circuit design for realising practical quantum advantage. While the method successfully disentangles complex weight matrices into more manageable quantum circuits, further exploration of circuit structure and gate selection is necessary to minimise complexity. Future work could focus on optimising circuit geometry, investigating specific gate arrangements, and exploring methods to reduce the computational cost of measurement and tomography steps within the quantum workflow. Combining multiple layers into single circuits could offer further efficiency gains, potentially reframing measurement as a computational resource rather than a cost.

👉 More information

🗞 Classical Neural Networks on Quantum Devices via Tensor Network Disentanglers: A Case Study in Image Classification

🧠 ArXiv: https://arxiv.org/abs/2509.06653