Artificial intelligence (AI) is here to stay and transform the world of technology! With AI, new scientific discoveries are anticipated, and more solutions to human problems are expected to be found. How far can AI go in solving the problem of solar power generation? In this article, we will uncover the limitless power of AI by exploring the Suncatcher project.

The Project Suncatcher research and implications of the findings

The Sun is the greatest source of energy and can generate more than 100 trillion times human electricity production. If placed in the right orbit, a solar panel can be 8 times more productive than on Earth, making the need for batteries extinct. There is more than enough power that can be generated from space, and AI is looking to explore it. With the new research project, Project Suncatcher, the goal is to generate as much solar power from space as possible to reduce the need for terrestrial sources of energy.

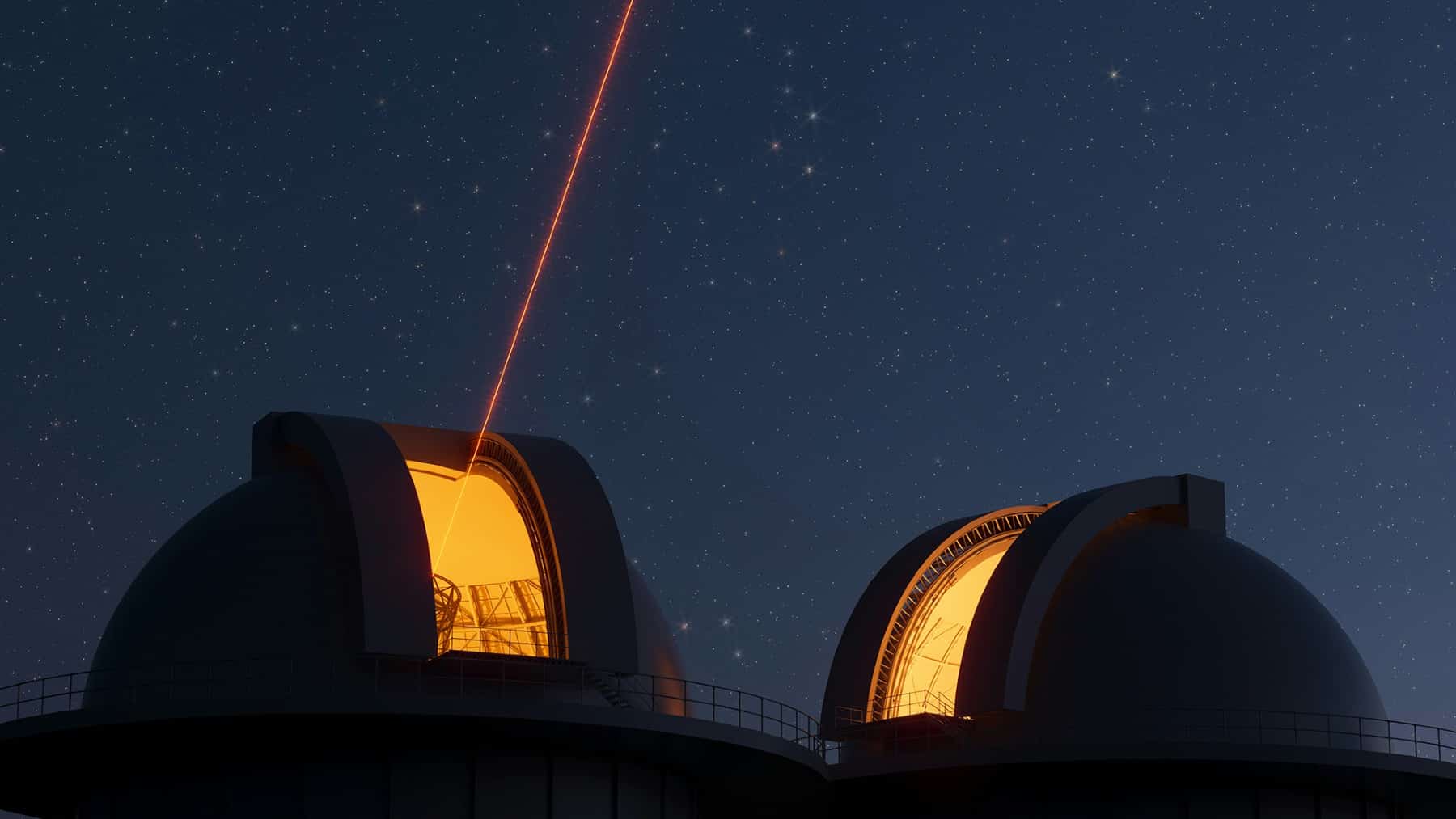

Project Suncatcher is hoping to improve machine learning in space. Researchers on this project are exploring how a network of solar-powered satellites, fortified with Tensor Processing Units (TPU), can channel the full power of the Sun and produce a tremendous amount of energy.

This research is one of Google’s traditions of taking on moonshots just to solve scientific problems. Of course, there will be unknowns, just like the journey of building a large-scale quantum computer a decade ago, before it became a realistic goal. However, these hurdles will be overcome.

Challenges with the Project Suncatcher system design

What is the proposed system for this project? This project’s design involves a constellation of networked satellites operating in a dawn-dusk sun synchronous low Earth orbit, with constant exposure to sunlight. The goal of this orbital choice is to allow for maximal solar energy collection and reduce the need for batteries. However, for this system to be a reality, there are certain hurdles that need to be overcome. Let’s take a look at some of these.

Achieving data center-scale inter-satellite links

Large-scale machine learning workloads require distributing tasks across numerous accelerators with high-bandwidth, low-latency connections. To have a performance comparable to the terrestrial data centers, links between the satellites that support tens of terabits are required. To achieve this level of bandwidth, power levels thousands of time higher than the typical is required. And since the received power is inversely proportional to the square of the distance, this challenge can be overcome by flying satellites in close formation.

Radiation tolerance of TPUs

The effectiveness of machine learning accelerators in space depends on surviving the environment of low-Earth orbit. A test carried out on Trillium, Google’s v6e Cloud TPU, showed promising signs. The High Bandwidth Memory (HBM) was considered to be the most sensitive component, but after a dose of 2 Krads (Si), which is close to three times the expected five-year mission dose of 750 rads, it started showing some irregularities. Also, there were no hard failures attributable to the Total Ionizing Dose (TID). Therefore, Trillium TPUs are considered to be the best, similar to this Google and NextEra partnership to shape the future of energy.

The future of this project

This project’s goal cannot be hindered by basic physics or economic barriers. However, the major engineering challenges need to be solved to make this dream a reality. To solve this problem, Google Research will partner with Planet to launch two prototype satellites by 2027.

With this launch, various models and TPU hardware will be tested in space, and the results will be used for further development, just as this historic artificial intelligence discovery is transforming Earth surveillance. Artificial Intelligence is improving the world of energy generation. If this project succeeds, most of the energy needed on Earth will be drawn from space, thereby reducing the need for terrestrial sources of energy.