What a ridiculous week for artificial intelligence…

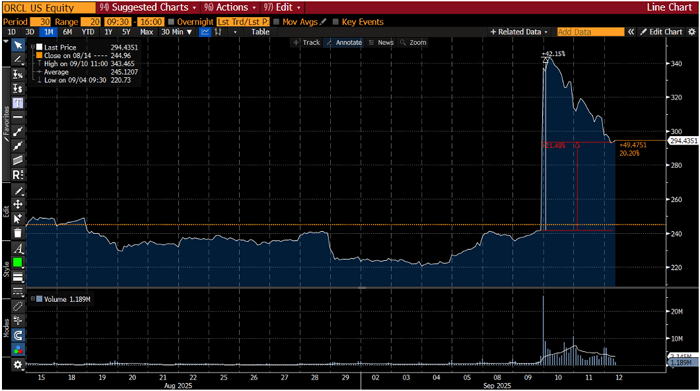

The big event of the week was Oracle’s earnings announcement, which resulted in the biggest one-day jump for the software giant since 1992.

Oracle’s total revenue for its fiscal first quarter 2026 (ending August 31, 2025) was $14.9 billion, up 12% year on year. That’s great growth for a company of that size, but it was a few other announcements that got everyone excited:

Growth in cloud infrastructure-related business was up 55% year on year

Oracle revealed it had a contract backlog of $45 billion

OpenAI signed a $300 billion five-year computing deal with Oracle starting in 2027

That sent the market cap of the company up about $240 billion sending Oracle over the $1 trillion valuation mark, and temporarily making Oracle executive chairman, Larry Ellison, the richest person in the world.

One-month stock chart of Oracle (ORCL)

The stock has since pulled back below the $1 trillion valuation mark, but the developments are remarkable, nonetheless.

Oracle has long been a “boring” enterprise software company that was late to the game in becoming a hyperscale cloud services provider. It was long known for its stable enterprise business with good margins and consistent free cash flow.

But after watching the success of Amazon Web Services, Google Cloud, and Microsoft Azure, Oracle got hungry to party like the rest of them. It now has more than $100 billion in debt and will burn through more than $8.2 billion in cash this fiscal year as it races to build AI data center infrastructure and participate in this boom.

The market didn’t care. It just wanted to know that Oracle was all in and would spend whatever it takes to build. And whatever capacity it can build, there is certainty that AI software companies will gladly pay for the computational resources.

Game on.

Have a great weekend,

Jeff

GPU-based Computing vs. Quantum Computing

Hi Jeff:

[Recently], you painted a dark picture of the energy consumption of AI, showing the site of Stargate at Abilene.

However, there is a possible solution offered by *QUBT with their TFLN chips, which reportedly consume only a fraction of the energy required by the traditional NVDA chips.

What does your research show?

Also, great strides have been made by the SC industry in making faster *memory,* demanding less energy. Do you have any details on that? Regards.

– Thomas D.

Hi Thomas,

I certainly wasn’t trying to paint a dark picture at all regarding the energy consumption of AI. My goal was simply to present the facts as they are, so that we can all better understand this massive trend underway that will require far more electricity production than most can understand.

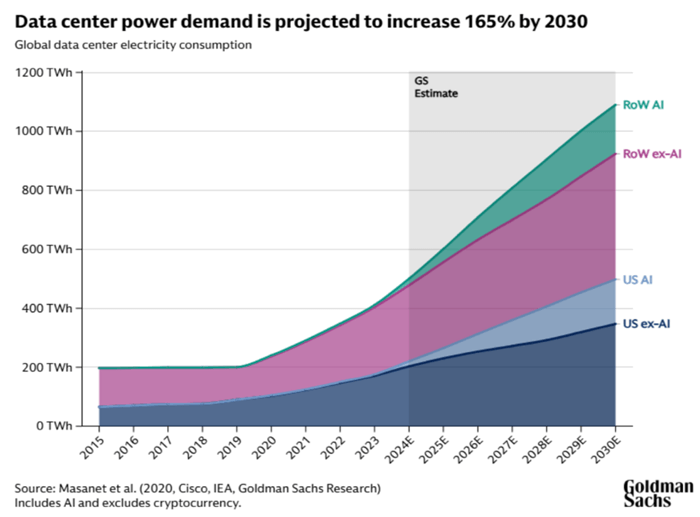

It doesn’t really matter which forecast we look at for data center electricity demand. They’re all sharply up and to the right. Below is a forecast from Goldman Sachs that presents a useful contrast between growth without AI and growth with AI. I believe the forecast is too conservative.

The reality is that electricity production is the single largest bottleneck in the industry right now.

It’s not a bad thing. In fact, I would argue it is a good problem to have.

Developing artificial general intelligence (AGI) is imperative so we can accomplish technological breakthroughs in medicine, science, clean energy production, materials, aerospace technology, and so many other areas.

Advanced AI will be the key to maintaining stable nuclear fusion plasmas for extended periods of time. Our short-term electricity needs – which can only be met right now by using natural gas or even coal – will ultimately lead to the replacement of all electricity developed from fossil fuels. The future will be small modular reactors (nuclear fission) and nuclear fusion reactors. AI will help us get there faster.

Your question about QUBT presents a good opportunity to contrast the kind of AI data centers for computational resources as compared to quantum computing technology.

For everyone’s benefit, QUBT is a company – Quantum Computing Inc. – that purports to be developing quantum photonics semiconductors built using thin film lithium niobate (TFLN) technology.

Now, before I get into Quantum Computing Inc., I’d like to make a more general comment about quantum computing versus AI data centers, which will specifically answer your question.

Yes, energy consumption is dramatically lower per unit of computing in a quantum computer compared to NVIDIA chips, regardless of whether or not we are considering Quantum Computing’s proposed TFLN photonics chip or a credible company like Rigetti Computing’s (RGTI) superconducting quantum computer.

Quantum computers are far more powerful than classical supercomputers as they leverage quantum mechanics to solve extremely complex computations. But, and here’s the key point, quantum computers can only be run for very short periods of time.

They are only able to maintain coherence for periods typically measured in milliseconds. Superconducting quantum computers will only run for a few milliseconds, and a trapped-ion superconductor will only run for a few seconds.

This is a long way of saying that quantum computers are completely impractical for training frontier AI models and running AI applications. They have very short coherence times and are plagued with errors. AI software requires 24/7/365 uptime and zero errors.

Importantly, both technologies are necessary. Quantum computers will be used for certain applications. AI data centers will keep the world advancing and running with certainty.

As for Quantum Computing Inc., fair warning, this company makes me very suspicious. Here is the company’s background, directly from the SEC:

Quantum Computing Inc., formerly known as Innovative Beverage Group Holdings, Inc., a Delaware corporation (the “Company”), was the surviving entity as the result of a merger between Ticketcart, Inc., and Innovative Beverage Group, Inc., both Nevada corporations. Innovative Beverage Group, Inc. was the surviving entity as the result of a merger between Kat-A-Tonic Distributing, Inc., a Texas corporation, and United European Holdings, Ltd., a Nevada Corporation.

Since going public, I’ve seen this company shift focus from one product area to another, always chasing what is hottest in an effort to run up its share price.

Making matters worse, there are some major class action lawsuits right now that suggest very problematic activity by the management at Quantum Computing Inc.

Here’s the case led by KesslerTopazMeltzerCheck:

Defendants failed to disclose to investors that: (1) QCI overstated the capabilities of the company’s quantum computing technologies, products, and/or services; (2) QCI overstated the scope and nature of its relationship with NASA, as well as the scope and nature of QCI’s NASA-related contracts and/or subcontracts; (3) QCI overstated the company’s progress in developing a TFLN foundry, the scale of the purported TFLN foundry, and orders for the company’s TFLN chips; (4) QCI’s business dealings with Quad M Solutions, Inc. and millionways, Inc. both qualified as related party transactions; (5) accordingly, QCI’s revenues relied, at least in part, on undisclosed related party transactions.

And here’s the case led by Robbins LLP:

Defendants failed to disclose to investors that: (i) defendants overstated the capabilities of QCI’s quantum computing technologies, products, and/or services; (ii) defendants overstated the scope and nature of QCI’s relationship with NASA, as well as the scope and nature of QCI’s NASA-related contracts and/or subcontracts; (iii) defendants overstated QCI’s progress in developing a TFLN foundry, the scale of the purported TFLN foundry, and orders for the Company’s TFLN chips; (iv) QCI’s business dealings with Quad M and millionways both qualified as related party transactions; (v) accordingly, QCI’s revenues relied, at least in part, on undisclosed related party transactions;

I’m very familiar with law firms that specialize in these kinds of class action lawsuits. Oftentimes, they are nonsense. But I’ve been tracking Quantum Computing Inc. for years and have seen some of the things mentioned in the lawsuits that make me very uncomfortable.

On top of that, the company is now trading at an enterprise value of 4,921 times 2025 sales and 1,197 times 2026 forecasted sales. It simply doesn’t make sense for a company that manufactures products. And the CEO of the company just dumped $14.4 million of shares on September 5. Red flags everywhere.

As for silicon carbide (SiC), which you referred to as SC, used in memory applications, there are no companies manufacturing memory using SiC.

SiC is primarily used for high-power applications because of its high thermal stability. It is a great material for electric vehicles and is also used widely in renewable energy applications.

There is some research using SiC for resistive RAM (ReRAM), but nothing has been commercialized. SiC is more expensive than current silicon-based memory and is not needed for almost all memory applications.

Two application areas that might make sense would be super high-temperature or something like a space-based application, where radiation-hardened memory might be good to have.

Thomas, we covered a lot of ground with your questions. Hopefully, this is all helpful.

Thoughts on Bittensor?

Jeff,

I have read many articles from you regarding artificial intelligence; however, I have not seen any articles from you regarding Bittensor, a cryptocurrency project focused on open source decentralized AI, which has many groups (subnets on the Bittensor chain) that are working on various parts of AI. I believe that the future of AI will be open source and decentralized. I think it would be good to discuss Bittensor with your readers, as I believe it will more than likely disrupt AI in much the same way as Bitcoin [disrupts] fiat currencies.

Thanks.

– Grant B.

Hi Grant,

Bittensor is a project we’re very familiar with. I did cover Bittensor a bit last August in The Bleeding Edge – The Intersection of AI and Blockchain Technology, as well as a few other names that are associated with this exciting combination of the two technologies.

If you’re ever curious about an equity or digital asset, you can jump to the search bar on the Brownstone Research website and type in what you’re looking for to see what was published about it.

It’s very quick and effective, and I’ll be making some more significant improvements to the search capabilities on the website later this year. I want to make it as easy as possible for subscribers, both free and paid, to find what they are looking for.

I have published more in-depth research concerning Bittensor in my buy-and-hold research service on digital assets – Permissionless Investor.

For the benefit of all readers, Bittensor is an interesting blockchain project focused on this cross-section between crypto and AI. It’s not a simple project to understand, a bit more complex than most. And it’s an ambitious project.

The concept is to create an economic ecosystem for decentralized AI, specifically the creation of digital commodity markets related to AI. These individual markets are referred to as subnets in Bittensor.

Each subnet has its own incentive mechanism to incentivize contribution to that subnet. For example, a subnet might be focused on data storage, pre-trained AI models, image generation, data collection, or hosting AI models.

The overarching goal is to incentivize the crowdsourcing of better machine learning and artificial intelligence models, primarily for the long tail of use cases (i.e., not what the big tech players are focused on).

For anyone interested, Bittensor’s native digital asset is TAO. It has about a $3.5 billion market cap and can be found on major exchanges like Binance, Kraken, and Coinbase.

Is There an Industry-Standard Definition of “Intelligence?”

Hi Jeff,

In the headlong rush to first AGI and then ASI, do you know of any “industry accepted” or “governmentally accepted” vocabulary standards and universal understandings for something as basic as the possible stages of Artificial Intelligence?

A common nomenclature should be the bare minimum when designing laws, safeguards, even intelligent conversation for something as monumental as A.I. For example, just the differences between sentience, consciousness, sapience, etc. should be thoroughly understood and recognized throughout the industry… Is there such recognition?

I know legislators, and the laws they institute, are typically far behind bleeding-edge technology, but the ramifications of AI can’t wait.

As always, thank you for your research and insight,

– Bernie G.

Hi Bernie,

To your point, we’d think that these concepts would have a clearly defined framework given their incredible importance, but I don’t think what you are envisioning exists yet.

In some ways, it’s not surprising because the world hasn’t created an AGI or an ASI yet. After it happens, the definitions and frameworks will evolve over time.

But there are still some useful categorizations available to us related to your questions. First off, we’ll highlight the levels of machine intelligence:

Artificial Narrow Intelligence (ANI) – this is AI as it applies to specific use cases or tasks. This category has been around for years in the form of machine learning and is usually referred to as narrow AI.

Artificial General Intelligence (AGI) – This is a more general-purpose, human PhD-level intelligence across all areas of human intelligence. AGI is capable of reasoning, self-directed research, self-learning, iteration, and adaptation.

Artificial Superintelligence (ASI) – This surpasses human-level expert intelligence in all areas of study. And ASI will likely be capable of exponential growth in intelligence. Humans will not have the ability to grasp an ASI’s level of intelligence.

At the moment, there are two prevailing benchmarks to determine how close an AI frontier model is to achieving AGI:

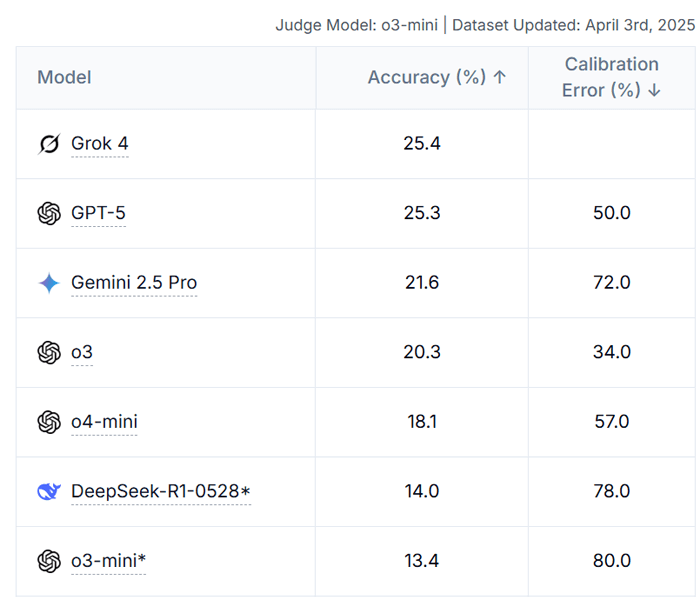

At the moment, xAI’s Grok 4, when it was released, has a significant lead over the rest of the industry on the ARC-AGI-2 benchmark seen below. Grok 4 has significantly improved since that first test, so I fully expect that it will be materially higher now than what is shown on the leaderboard.

ARC-AGI-2 Leaderboard | Source: ARC Prize

The ARC Prize organization is currently working on its next-generation benchmark, which will be called ARC-AGI-3. For anyone curious to play around with the test, you can find a preview of it here.

As for Humanity’s Last Exam, according to the leaderboard, xAI’s Grok 4 is on top but neck and neck with OpenAI’s GPT-5. The data, however, is not up to date, as it was last updated on April 3 this year.

And given how disappointing GPT-5 has been, and how much visible progress has been made with Grok 4 since its release, I expect a much larger gap between Grok 4 and the rest of the field once the leaderboard is updated.

Quantitative Results

Humanity’s Last Exam Leaderboard | Source: Humanity’s Last Exam

Something else that you might find interesting is a framework that the National Institute of Standards and Technology (NIST) put together at the beginning of 2023. It was put together from an AI risk perspective and is appropriately called Artificial Intelligence Risk Management Framework.

Aside from that, there is a general understanding of the levels of awareness and consciousness with regard to an AI. It’s not a framework that has been standardized or anything, but just an industry understanding:

Sentience – The AI would be capable of emotions and have the ability to have subjective experiences.

Consciousness – This would be an expanded sense of self-awareness capable of deep self-reflection.

Sapience – This is generally thought to be capable of abstract reasoning, self-determined agency, and what we might refer to as wisdom.

Clearly, there is a lot of nuance here, and it would be difficult to measure with certainty, but it’s just to show that there is a lot of thinking concerning this happening right now in the industry.

To your point, policymakers are way behind on bleeding-edge topics like this. They simply don’t have the background to be proactive about new technologies like this, and when things are happening so quickly, there is reluctance to assign definitions, develop a framework, or put regulations in place, because there is an acknowledgement that they would have to change.

The benchmarks, however, will be useful in determining when AGI is achieved. But it is still unclear when sentience and self-awareness will evolve in this journey to ASI.

But there is one thing that I’m sure of… I’ll be on top of it here at Brownstone Research, and my subscribers will be the first to know of any major developments related to this technology.

That’s all for this week’s AMA. If you have a question for me or the team for a future AMA, you can reach us right here.

Sincerely,

Jeff

Want more stories like this one?

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.