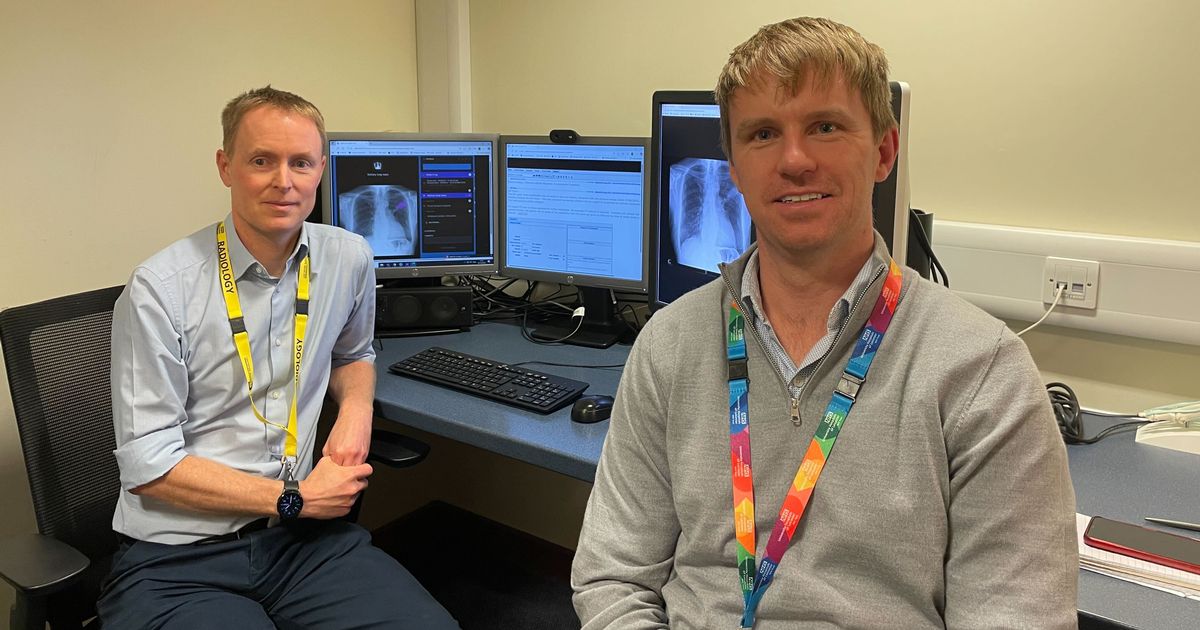

A number of trials are underway at the University Hospitals of Leicester NHS Trust Lead chest reporting radiographer Andy Creeden (left) and consultant radiographer Dan Togher(Image: LeicestershireLive)

Lead chest reporting radiographer Andy Creeden (left) and consultant radiographer Dan Togher(Image: LeicestershireLive)

From reading X-rays to writing letters, artificial intelligence is transforming care at Leicester hospitals. Developments in AI mean it is better placed than ever to support the NHS in delivering for patients and the city’s three hospitals are at forefront of that innovation.

A number of trials are underway at the University Hospitals of Leicester NHS Trust, which will drive improvements for both residents and staff. The technology is being used throughout the trust’s day-to-day work with the ultimate aim of freeing up doctors to spend more time focused on what they are really there for – looking after patients.

Some of those trials could become a permanent part of trust practices from next year. LeicestershireLive sat down with the hospitals’ chief digital information officer, William Monaghan, and one of the medical teams incorporating AI in their work to learn more about how the technology is being used and what it means for patients.

Mr Monaghan told us he hopes integrating AI into ways of working at the trust will “transform the experience of being a patient” at UHL and make it “more fun” to work there by allowing staff to focus more on patients and less on admin.

He said: “We really want AI to be part of the solution to make it easier and safer to deliver and receive care. We will be able to have far more support for clinicians to make decisions faster and better.

“I think it will mean that we can streamline the administration around the patient. It probably is quite frustrating when people need to contact us and they have to wait on the phone, they don’t know where they are in the waiting list, and they seem to get different information at different appointments. I think we can transform the experience of being a patient of UHL.”

UHL chief digital information officer William Monaghan(Image: LeicestershireLive)

UHL chief digital information officer William Monaghan(Image: LeicestershireLive)

He added that AI now serves three main uses at Leicester hospitals. Firstly, it is being employed to support clinical decision-making, including through training for those new to their roles.

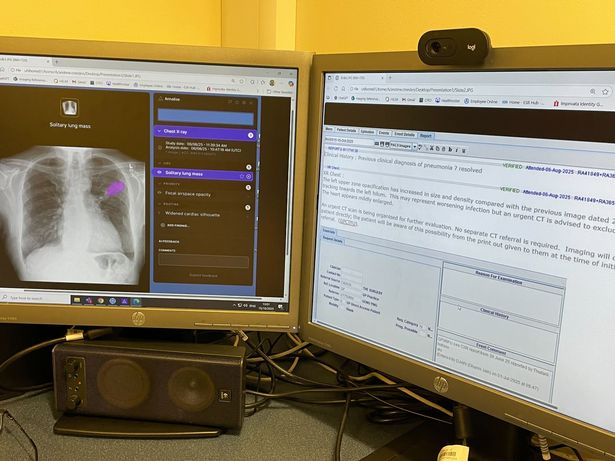

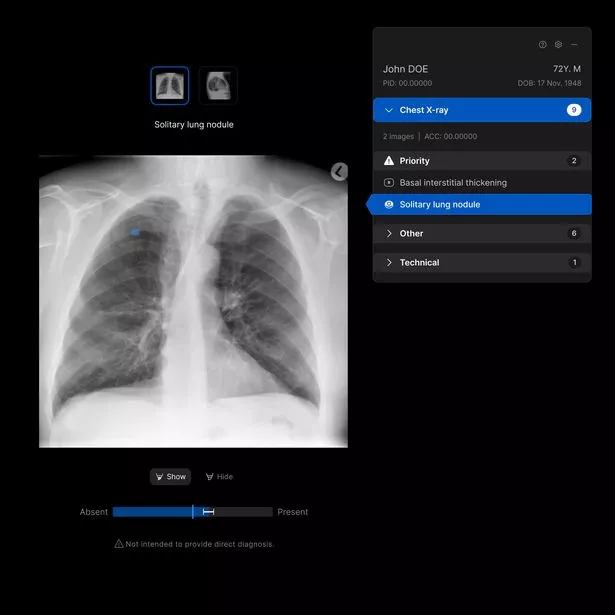

In radiology, a new way of working is being tried out, with AI being used to read lung X-rays. The technology seeks out abnormalities in the scans and highlights them for the clinician.

It is also being used to detect suspected strokes in scans, to analyse images to diagnose skin cancer, help clinicians visualise and plan treatments for complex heart conditions, and to integrate information around kidney filtration.

Lead chest reporting radiographer Andy Creeden and consultant radiographer Dan Togher are part of the team involved in the lung X-ray trial. They told LeicestershireLive that the technology is currently assisting with around 10,000 X-rays a month.

Mr Creeden said: “There’s a lot going on on the chest X-ray. There are all sorts of bits that might be normal or might not be normal, depending on the patient, and some lung nodules, particularly, can be really, really subtle, maybe you only see them in the benefit of hindsight once you know that they’re there.

“The system is helping us just to avoid anything slipping through the net. So, it’s a safety net. It’s not doing the report itself; we just remove some of that scope for human error.

“It’s that second set of eyes that maybe just catches that 1 in 1000 that might otherwise slip through the net.”

One of the biggest benefits of the technology is that it is able to prioritise cases based on potential urgency, where consultants typically work through them in date order, Mr Creeden added.

A mocked-up AI reading of an X-ray(Image: LeicestershireLive)

A mocked-up AI reading of an X-ray(Image: LeicestershireLive)

He said: “AI will look at it (the X-ray) and if it sees something that it thinks might be a critical finding, something that needs dealing with immediately, or that might be a suggestion of cancer, then it will pull that X-ray to the top of a reporting list.

“To get the results of the chest X-ray might take up to a week normally, but using the AI to pick out which patients might have cancer on the basis of their X-ray, then they’ll potentially be reported that day or the next day.”

There’s still scope for improvement, however, and the UHL team regularly feeds back to Harrison AI, the company behind the system, so changes can be made to future versions. Mr Togher told us he wanted to see the tool compare past and present patient X-rays as a possible next step.

He said: “I always say that the best tool for reporting a chest X-ray is having a previous chest X-ray because every patient is different, their anatomy is different. If they’ve got previous pathologies, that would explain current findings, for example.

“So, Leicester’s got high incidence of TB. Patients that come back that have had TB in the past, you’ll see scarring, for example, on the chest X-ray because that’s just part of the process.

“AI will pick that up as an abnormality because it’s looking at that chest X-ray as a standalone X-ray. So, I think having that previous [version] would maybe potentially help.”

The second use of AI at local hospitals is in care administration, with the trust investigating how it can be used to book appointments, schedule operations, and administer waiting lists. One of the big tests currently underway at Leicester hospitals is a system called Ambient Voice, which is being used to generate clinical letters following patient consultations.

Mr Monaghan said: “If you’ve ever had a hospital appointment, you’ll know at the end when you leave the room, previously the consultant would have sat and dictated the letter with ‘I saw this individual today, this was their complaint, this is my plan of management and these are the drugs or the procedures that I’m planning to do or change for that patient’.

“What the Ambient AI does is effectively that process. So, it listens to the consultation, it identifies who the doctor is, who the patient is, and then provides a version of that letter automatically for the clinician to say ‘yep, that’s what I would do’ or for them to make some tweaks.

“It’s doing something that previously the clinician would have had to do, and it’s saving them a significant amount of time by doing that.”

Mr Monaghan said the trust estimates the staff using it are saving around 30 minutes per appointment. He added: “People are actually able to have a better quality of life when they’re at work, as more of the administration is being done by technology. [And] that means that they can focus on the thing that they’re really good at, which is looking after patients, and worrying less about things like recording stuff on the system.”

Finally, the trust is also using AI for back office work such as managing payroll for staff and checking the thousands of invoices a week it receives from suppliers, helping them “speed up all of the processes that [the hospitals] have to do in order to function”.

A mocked up AI reading of an X-ray(Image: University Hospitals of Leicester NHS Trust)

A mocked up AI reading of an X-ray(Image: University Hospitals of Leicester NHS Trust)

While AI can improve care, it also comes with risks if safeguards are not in place. Mr Monaghan stressed that it was a tool to support doctor-led care rather than a replacement for this.

He said: “The AI is in a support role, not a decision-making role. So there’s always this functionality that we call ‘human in the loop’.

“There is always a human as part of that process, and that human is the person who has the clinical authority to make that decision. They hold a professional registration. This isn’t about us dumbing down the care that people get.”

The processes around deciding which AI to introduce are also rigorous, with the members of two organisations – Eurotrain and the AI Governance Office – which set “really high” standards around the technology’s use, Mr Monaghan added.

He continued: “Before anything goes live, it goes through a thing called a data privacy impact assessment. We absolutely understand and walk through where the data is held, how the data is used by the AI and what data is kept by the AI.

“If a supplier can’t transparently talk us through that process and we can’t be confident that the data resides within the UK and we can’t be confident that the use of that data fits in line with GDPR and some of that other kind of information governance stuff, we won’t progress with that trial.”

Mr Monaghan added: “We’re putting things in for sort of short pilot periods and then saying ‘yes, that works, let’s carry on’ or ‘no, that didn’t work, let’s try something else’. Probably about 40 per cent of our pilots that we’ve tried, we’ve decided not to carry on with, either because they didn’t save the clinicians as much time as we thought they would or the thing that we thought that bit of technology was doing wasn’t actually doing as well as the clinicians themselves were able to do.

“We rely a lot on our clinical team’s feedback to say ‘oh this AI is very effective in this area’ or ‘actually this isn’t working very well and, in effect, it’s slowing me down rather than speeding me up.”

Mr Monaghan said the trust is hoping to roll out some of the new AI uses across the hospitals as a whole from next year. This includes the Ambient Voice system, with UHL thought to have issued the “largest procurement” for the tool in the NHS. Patients may also see their appointments scheduled by AI from next year, as well as receive phone calls from the new systems.

He said: “For me, it’s really exciting and I think it genuinely will make it better to be a patient of UHL over the next one to five years.

“But it’s really important that we do it right and we do it responsibly. We’re going as fast as we can, as safely as we can.”