Recently, the 2025 Nobel Prize in Physics was officially announced, and quantum computing emerged as the biggest winner.

In this issue, let’s take a closer look at quantum computing.

Quantum computing is a very broad and fascinating topic. I’ll cover it in several issues. The content is relatively technical, so I suggest reading it patiently.

This issue is the first part of the quantum computing special. It mainly explains the basic principles of quantum computing, the latest technological breakthroughs, and a list of core targets.

In the second part, I’ll detail the six mainstream technological paths of quantum computing and the latest progress of leading companies in the primary and secondary markets.

(1) Three Stages of Quantum Computing Development: From NISQ to FTQC

The quantum computing industry is at a critical turning point from “scientific fantasy” to industrial implementation.

The core driving this transformation is the substantial breakthrough in quantum error correction (QEC) technology.

Currently, quantum computing is in the “Noisy Intermediate-Scale Quantum” (NISQ) stage.

Each quantum computer contains dozens to thousands of physical qubits. However, these qubits are susceptible to environmental noise, resulting in limited computational fidelity and an inability to execute large-scale algorithms requiring high precision.

Therefore, the industry focuses on two main paths: the commercialization of specialized machines and the application of hybrid algorithms.

Specialized quantum computers, represented by D-Wave’s quantum annealing machine, have achieved partial commercial implementation, providing significant efficiency improvements for industries such as finance, logistics, and manufacturing. Its revenue in Q1 2025 increased by over 500% year-on-year, validating the profitability of this path.

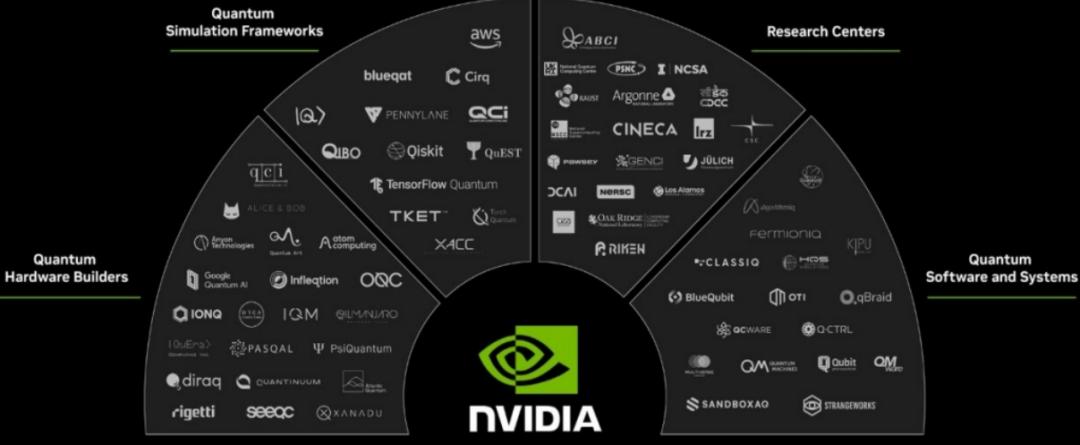

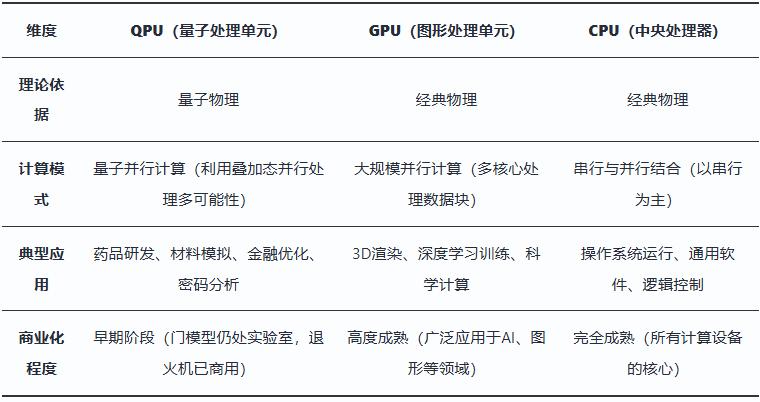

Quantum-classical hybrid computing is the most practical model at present. By combining a quantum processor (QPU) with classical high-performance computing (GPU), it can solve specific complex tasks.

Nvidia’s CUDA-Q platform and IBM’s Qiskit engine are accelerating the construction of this ecosystem, providing key infrastructure for the implementation of quantum computing power.

Figure: Nvidia’s quantum computing ecosystem

The medium-term goal of quantum computing (around 2030) is to achieve “practical quantum computing with error correction.” The core lies in encoding multiple noisy physical qubits into a high-fidelity logical qubit through quantum error correction codes (QEC), thereby significantly improving the reliability of computing.

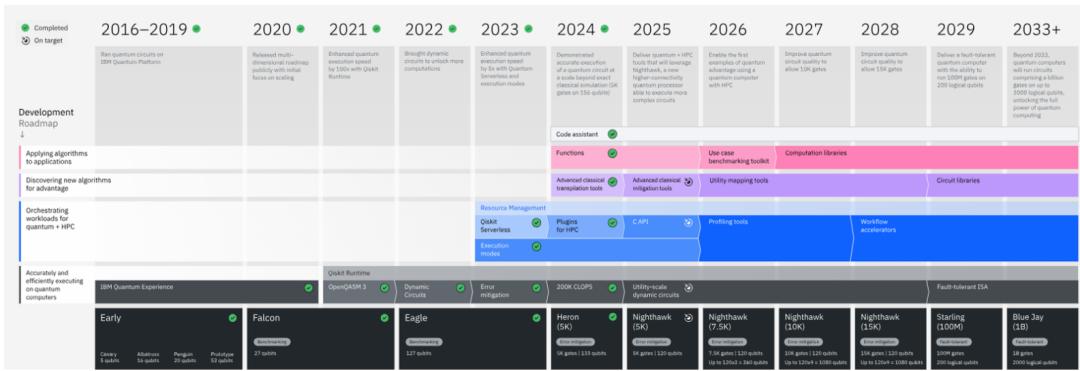

Industry leaders have released clear roadmaps for the development of logical qubits.

Quantinuum plans to achieve 100 logical qubits in 2027.

IBM plans to deliver the Starling system with 200 logical qubits in 2029 and launch the Blue Jay system with 2000 logical qubits in 2033.

Figure: IBM’s quantum computing roadmap

The long-term goal of quantum computing is to build a fully fault-tolerant quantum computer (Fault-Tolerant Quantum Computing, FTQC). Its operation error rate will be close to that of classical computers, capable of executing complex algorithms with extremely high computing power requirements, such as Shor’s algorithm, thereby solving major scientific and commercial problems that classical computers cannot handle.

The core of FTQC is to have a sufficient number and quality of logical qubits, capable of maintaining quantum coherence for a long time and executing deep and complex quantum circuits.

This will make it possible to crack the existing public-key cryptosystem (such as RSA encryption) and bring revolutionary breakthroughs to fields such as new material design and new drug research and development.

Tech giants are making long-term arrangements for this goal. For example, Google plans to achieve a fault-tolerant quantum computer with one million physical qubits in 2030.

Microsoft, through the disruptive route of topological quantum computing, hopes to fundamentally improve the stability of qubits and expand to the scale of one million qubits in the next few years.

(2) Basic Principle I of Quantum Computing: Quantum Superposition

To understand the industry development trend of quantum computing, it’s necessary to first understand its basic principles.

Quantum computing is a computing model based on the unique behaviors of quantum mechanics, with qubits as the basic information unit.

Quantum computing uses three basic characteristics of quantum mechanics, namely “quantum superposition,” “quantum entanglement,” and “quantum interference.” Let’s look at them one by one.

Quantum superposition is the core characteristic of quantum mechanics. It allows a microscopic particle to simultaneously exist in a linear combination of multiple possible states.

A classical bit can only be 0 or 1 at any time, like the heads or tails of a landed coin.

In contrast, a qubit can be in a superposition state of 0 and 1 simultaneously, like a spinning coin, containing all possibilities between “heads” and “tails.”

This uncertainty of state is an intrinsic physical property of the quantum system. The state will “collapse” to a definite classical value (0 or 1) only when a measurement occurs.

The principle of quantum superposition endows quantum computing with natural parallel processing capabilities.

Since a single qubit can represent a superposition of 0 and 1, a system composed of n qubits can simultaneously represent and store 2^n states.

This means that a single operation on n qubits is equivalent to performing operations on 2^n classical values simultaneously.

As the number of qubits increases, the computational space grows exponentially, far exceeding the linear computing power growth mode of classical computers.

This exponential parallel computing power enables quantum computers to explore a vast number of possibilities simultaneously when dealing with specific complex problems (such as large number factorization and quantum chemical simulation), thus breaking through the computing power bottleneck of classical computing and showing great potential advantages.

Note: Drawn by AlphaEngine FinGPT

(3) Basic Principle II of Quantum Computing: Quantum Entanglement

Quantum entanglement is a non-local strong correlation between two or more quantum systems, which Einstein called “spooky action at a distance.”

In this state, multiple qubits form an inseparable whole. The overall state is definite, but the state of a single qubit cannot be independently described.

Measuring one particle will instantaneously affect the state of another or multiple entangled particles, regardless of the distance between them. This instantaneous effect breaks through the locality limit of classical physics.

The non-local correlation characteristic of quantum entanglement endows quantum computing with powerful global collaboration capabilities, which is crucial for solving complex system problems.

Entangled states enable quantum computers to efficiently handle many-body system problems that are difficult for classical computers to address. For example, in fields such as quantum chemical simulation, new material design, and drug research and development, exponential acceleration can be achieved by simulating the complex interactions between molecules.

Quantum entanglement is also the foundation of security technologies such as quantum key distribution (QKD).

Any eavesdropping on the entangled channel will destroy its correlation, which will be immediately detected by both communicating parties, ensuring absolute security of information transmission.

(4) Basic Principle III of Quantum Computing: Quantum Interference

The physical essence of quantum interference stems from the wave nature of quantum states.

The superposition state of each qubit can be described by a wave function containing amplitude and phase.

When a quantum system evolves through different paths, the wave functions corresponding to these paths will interfere.

By precisely controlling the phase relationship of quantum states, constructive and destructive interference can be achieved.

When the phases of the wave functions of different paths are the same or similar, their probability amplitudes will be superimposed and enhanced, significantly increasing the probability of measuring that result.

Conversely, when the phases of the wave functions are opposite, their probability amplitudes will cancel each other out, reducing or even eliminating the probability of measuring that result.

This ability to reshape the probability distribution of the final result by controlling the phase is the core physical mechanism for quantum computing to manipulate information.

The core of quantum algorithms is to cleverly use the quantum interference effect to accelerate classical algorithms.

The design goal of the algorithm is to systematically adjust the phases of each path in the computational process through a series of precise quantum gates. Each quantum gate acts linearly on the entire superposition state.

By designing a sequence of quantum gates, all computational paths leading to the correct answer will produce constructive interference, and their probability amplitudes will be amplified, enhancing the correct solution.

At the same time, all computational paths leading to wrong answers will experience destructive interference, and their probability amplitudes will be weakened or completely cancelled.

In this way, when the quantum system finishes evolving, its state will collapse to the desired solution with a very high probability.

This is the key for algorithms such as Shor’s algorithm and Grover’s algorithm to efficiently solve specific problems. They concentrate computational resources on finding the correct answer through the interference effect, achieving exponential or quadratic acceleration.

(5) Six Steps of Quantum Computing

In summary, quantum computing uses the three basic characteristics of quantum mechanics. Through quantum superposition, 2ⁿ computational paths can evolve synchronously in the same set of hardware, greatly saving computational resources. Finally, the correct answer is revealed through quantum interference.

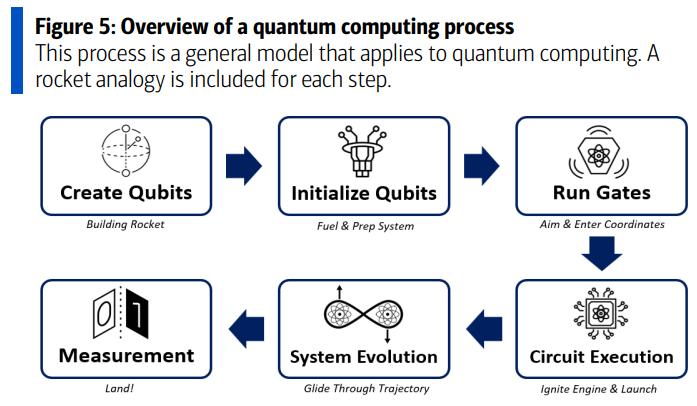

From a macroscopic perspective, the quantum computing process can be divided into six steps, as shown in the following figure.

Figure: Six Steps of Quantum Computing, BofA, AlphaEngine

First is to create physical qubits. This is the hardware foundation of a quantum computer. Qubits can exhibit quantum behaviors such as superposition and entanglement states.

Then is initialization. Reset the qubits to a “clean” starting state, clearing all superpositions and entanglements to ensure a consistent and noise-free computational process. A common starting state is the computational basis state |0⟩, also called “ket zero.”

The third step is to run gates. Place the qubits in superposition and entanglement states through quantum gates, “encoding” the problem to be solved into the quantum system.

The fourth step is circuit execution. Here, the quantum circuit refers to a combination of a series of quantum gates designed to complete a specific computational task.

The fifth step is evolution. Through the quantum circuit, apply quantum gates in a specific order to process the encoded quantum state. Quantum gates achieve specific quantum logic through constructive or destructive interference, driving the system to evolve.

The sixth step is measurement. When all operations are completed, the state of the qubits should contain the solution to the problem.

At this time, perform a measurement. The qubits will collapse from the quantum state to classical bits for subsequent analysis or decision-making.

It’s worth noting that the measured qubits don’t “permanently become classical bits” or lose their function. They can be reinitialized to the ground state for future quantum computing.

(6) Mainstream Technological Paths of Quantum Computing

Currently, the six mainstream technological paths adopted by the global quantum computing industry are: superconducting, ion trap, photon, neutral atom, topological, and spin. Each technological path has its own advantages and disadvantages due to the different scientific natures of the computational methods, and no single technological path has achieved absolute dominance.

In terms of maturity, superconducting ≈ ion trap > photon ≈ neutral atom > spin > topological.

The photon and neutral atom technological paths have stronger long-term scalability, while superconducting and spin are suitable for medium-term transitions.

As of now, superconducting (IBM’s 127-qubit processor) and ion trap (IonQ’s 32 qubits) have entered the cloud service stage and are the first to attempt commercialization. Let’s look at them separately.

The core of superconducting quantum computing lies in constructing a Josephson junction, utilizing the macroscopic quantum effect of superconducting materials in an extremely low-temperature (about 10mK) environment.

After IBM launched the “Condor” processor with 1121 qubits, it reduced the error rate by 3 – 5 times and plans to release the “Kookaburra” processor with 1386 qubits within 2025, continuously consolidating its leading position in scaling up.

Google, through its new-generation “Willow” chip, increased the effective computing time of qubits to 100 microseconds, a five-fold improvement compared to the previous product, significantly enhancing the ability to execute complex quantum algorithms.

The ion trap path has core advantages of ultra-high fidelity (>99.9%) and long coherence time and has been initially commercialized in scenarios requiring high-precision computing.

The core of this technology is to use precisely controlled electromagnetic fields to trap single charged atoms (ions) in an ultra-high vacuum environment and use them as qubits.

By using high-precision laser beams to cool and manipulate the internal electronic energy levels and external vibration modes of the ions, the initialization of qubits, quantum logic gate operations, and the readout of the final state are achieved.

As of 2025, the ion trap path has made key breakthroughs in building high-quality, error-correctable quantum computers.

Quantinuum achieved a system with 50 entangled logical qubits, with a two-qubit logical gate fidelity of over 98%, demonstrating significant fault-tolerant computing capabilities and marking an important milestone towards practical fault-tolerant quantum computing.

IonQ leads in commercial deployment. Its Forte Enterprise system has been integrated into the data center, providing a computing capacity of 36 algorithmic qubits (#AQ 36).

Different from the superconducting path that requires an environment close to absolute zero, the ion trap system can operate at room temperature or near room temperature, significantly reducing the dependence on expensive refrigeration equipment (such as dilution refrigerators), thereby reducing hardware complexity and operating costs.

There is a lot of content about the technological paths of quantum computing, which is also a key part of understanding the quantum computing industry. I’ll elaborate on it in the next issue. Friends interested can follow this official account. It will be released soon.

(7) The Main Bottleneck of Current Quantum Computing: Quantum Decoherence

Quantum decoherence is the fundamental physical bottleneck restricting the practical application of quantum computing.

This process refers to the inevitable interaction between qubits and the external environment (such as temperature fluctuations, electromagnetic radiation, and environmental noise), resulting in the rapid loss of information in the superposition and entanglement states on which parallel computing relies. Eventually, the qubits “degrade” into classical bits, seriously affecting the accuracy and reliability of computing.