Artificial intelligence (AI) and combinatorial optimization are driving the rapid development of scientific research and industrial applications. However, their increasing energy consumption also poses a severe challenge to the sustainability of digital computing.

Meanwhile, most current novel computing systems are either only good at AI or only good at optimization. Moreover, they often require frequent high – energy – consuming digital conversions, which limits their efficiency. Additionally, in practical applications, these systems often have difficulty cooperating efficiently with hardware. They perform poorly whether dealing with neural networks with limited memory, solving complex optimization problems, or coping with analog computing noise.

What if we change our thinking and use light and analog signals for computing instead of relying on the switching between “0” and “1”? Can this computing method, which doesn’t require frequent conversions and doesn’t rely on digital logic, break the existing limitations?

Based on this, the team from Microsoft Research Cambridge, UK, and its collaborators proposed the “Analog Optical Computer” (AOC). Without digital conversion, it can efficiently complete both AI inference and combinatorial optimization tasks simultaneously, and has significant expansion potential and energy – efficiency advantages.

The relevant research paper has been published in the authoritative scientific journal Nature. Jiaqi Chu, an alumnus of Shanghai Jiao Tong University and the chief researcher at Microsoft Research Cambridge, UK, is one of the authors of the paper.

Paper link: https://www.nature.com/articles/s41586-025-09430-z

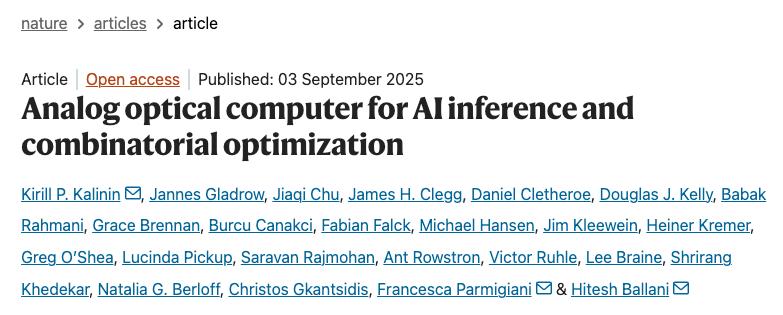

It is reported that AOC combines analog electronic technology with three – dimensional optical technology, enabling the same platform to accelerate both AI inference and combinatorial optimization tasks simultaneously. This “dual – domain capability” benefits from a fast fixed – point search method, which doesn’t require digital conversion and also enhances noise resistance. Based on this fixed – point abstraction, AOC can run a new type of computationally intensive neural model with recursive inference ability and use advanced gradient descent methods to solve high – expressiveness optimization problems.

Figure | Schematic diagram of AOC architecture and applications

The research team said that the AOC architecture is built on scalable consumer – grade technologies, providing a promising path for achieving faster and more sustainable computing. It natively supports iterative, computationally intensive models, providing a scalable analog computing platform for future innovation in the fields of AI and optimization.

Analog Optical Computer: How to Accelerate AI and Optimization Tasks?

AOC is mainly aimed at two types of tasks at the application level: machine learning inference tasks and combinatorial optimization tasks. The research team demonstrated the capabilities of AOC in these two types of tasks through four typical cases. This research also reflects the advantages of the co – design of hardware and the abstraction layer, echoing the trend of the co – evolution of digital accelerators and deep – learning models.

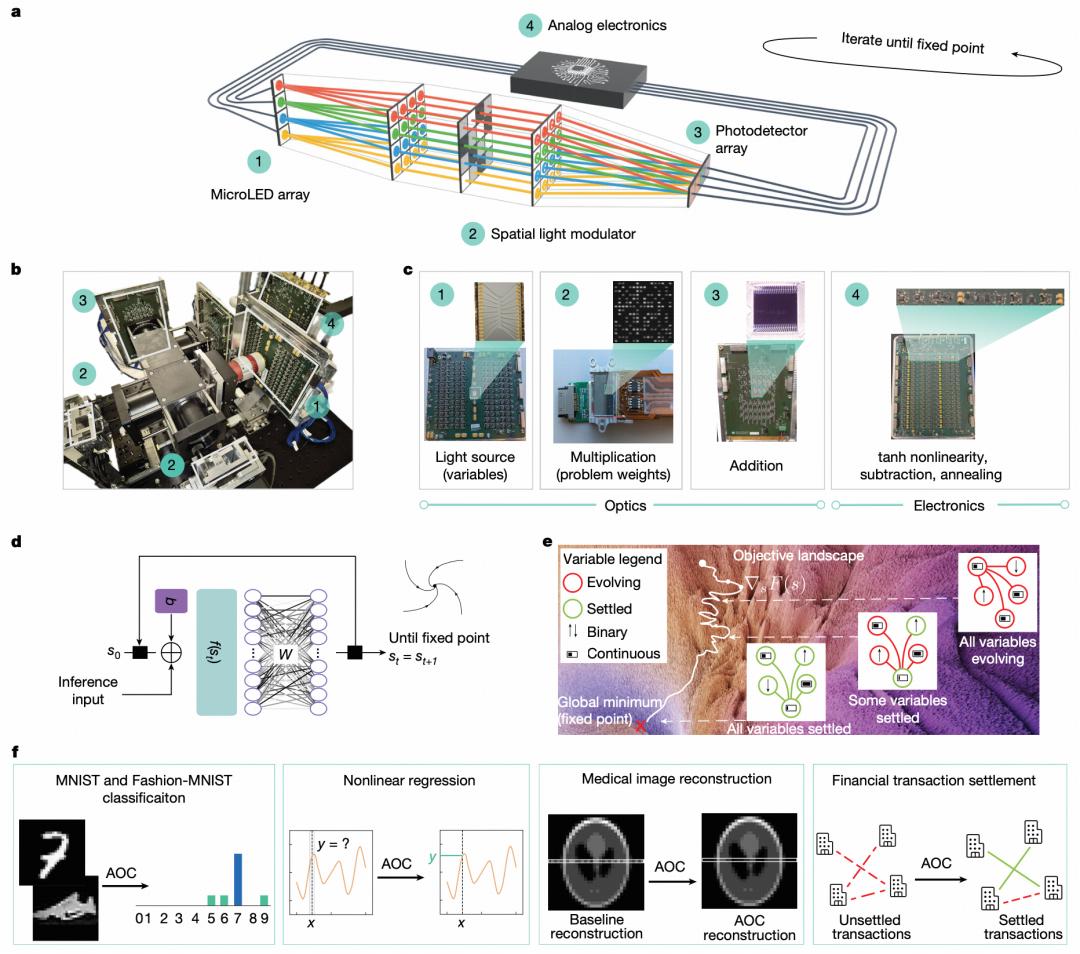

The AOC hardware combines 3D optical technology with analog electronic technology and implements two machine – learning inference tasks based on a balanced model: image classification and nonlinear regression. In these two tasks, the models are digitally trained through AOC – DT and directly deployed on the hardware without further calibration. This places high requirements on hardware accuracy and also requires AOC – DT to have high fidelity.

In the image classification experiment, the results of AOC proved the feasibility of using digital training and transferring the weights to optoelectronic analog inference hardware. When comparing the results of AOC with a linear classifier, the contribution of the balanced model running on AOC is more obvious. The researchers also trained a simple feed – forward model. Both the linear classifier and the feed – forward model have the same number of parameters as the AOC hardware. Although AOC achieved a slightly higher accuracy rate, the MNIST and Fashion – MNIST datasets are relatively simple, making it difficult to fully demonstrate the full potential of the self – recursive model.

Research shows that the AOC hardware can run nonlinear regression models. They selected two nonlinear functions for regression: the Gaussian curve and the sine curve. The hardware accurately reproduced these two functions. Compared with the Gaussian curve, the sine curve has multiple minima and maxima, which places higher requirements on fitting accuracy. Therefore, a more accurate differentiable digital twin model (AOC – DT) is needed. In addition, AOC provides support for the balanced model running on the hardware.

Figure | Applications of AOC in machine learning inference

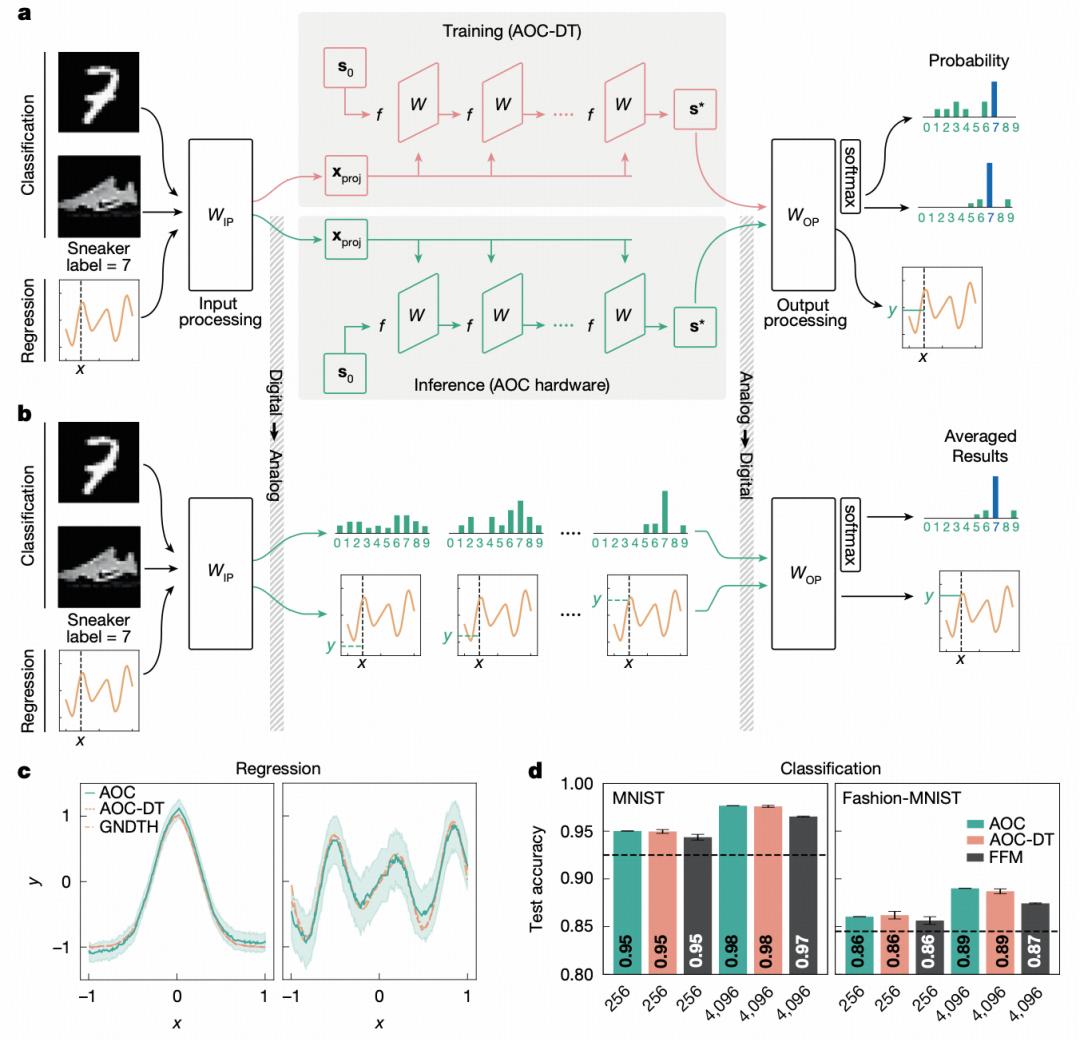

QUMO represents a wide range of combinatorial optimization problems, aiming to minimize the objective function. The process of solving the QUMO problem is to find a set of variable assignments that minimize the objective function. The research team demonstrated two typical QUMO application scenarios on the AOC hardware: medical image reconstruction and financial transaction settlement.

They implemented compressed sensing on the AOC hardware, a technology that can achieve accurate signal reconstruction with fewer measurements. The final image reconstruction results are highly consistent with the original lines. All QUMO instances were solved in a fully analog manner without any digital post – processing. To verify the QUMO expression form of compressed sensing on a large scale, the researchers used AOC – DT to reconstruct a brain scan image from the FastMRI dataset. This problem involves more than 200,000 variables. At typical 4 – fold and 8 – fold undersampling rates, the mean squared error (MSE) of the reconstruction is less than 0.07.

In the financial optimization task, they used the AOC hardware to solve a transaction settlement problem. Each securities transaction is an exchange of payment for securities. Clearinghouses process batches of such transactions. In each transaction batch, the goal of transaction settlement is to maximize the total number or total value of settled transactions. Given the large number of transactions and the constraints of laws and other additional requirements, this becomes a complex optimization problem. In this transaction settlement scenario, the AOC hardware found the global optimal solution within 7 block coordinate descent (BCD) steps. In contrast, the success rate of quantum hardware on the same problem is only 40 – 60%.

Figure | Applications of AOC in optimization

Using AOC – DT, the researchers also verified the algorithm performance on the most difficult quadratic binary problems with linear inequality constraints in the QPLIB benchmark test. These problems are formulated as QUMO instances. The AOC method was compared with the commercial solver Gurobi, which usually takes more than a minute to reach the currently known optimal solution on these problems.

This fully analog operation minimizes the overhead of analog – to – digital conversion.

Future Potential: Achieving a 100 – fold Increase in Energy Efficiency

It is worth noting that real – world applications place higher requirements on the scalability of hardware. To use AOC to handle practical tasks, its hardware needs to support scalability from hundreds of millions to billions of weights.

The research team said that AOC has the potential to meet this demand through its modular architecture. This architecture can decompose the core optical matrix – vector multiplication operation into smaller sub – vector and sub – matrix multiplications, thus enabling scalable in – memory computing.

The research team expects that AOC can support models with a parameter scale between 100 million and 2 billion, corresponding to the need for 50 to 1000 optical modules. If a single optical module can handle both positive and negative weights simultaneously, the number of required modules can be reduced by half. All components used in AOC, including microLEDs, photodetectors, SLMs, and analog electronic devices, already have a continuously expanding manufacturing ecosystem that can support wafer – level production.

The operating speed and power consumption of AOC determine its energy efficiency. Its speed is limited by the bandwidth of optoelectronic components, usually 2 GHz or higher. For a matrix with 100 million weights, when using 25 AOC modules, the estimated power consumption is 800 W, and a computing speed of 400 Peta – OPS can be achieved. At 8 – bit weight precision, the energy efficiency is 500 TOPS per watt. In contrast, the latest GPUs can only achieve a maximum system energy efficiency of 4.5 TOPS per watt when processing dense matrices at the same precision.

In summary, the AOC architecture shows good prospects for scaling to practical machine – learning and optimization tasks and is expected to achieve about a 100 – fold increase in energy efficiency.

Looking to the future, AOC’s co – design method – closely aligning hardware with machine – learning and optimization algorithms – is expected to continuously drive the innovation flywheel of hardware and algorithms, which is crucial for achieving sustainable computing.

This article is from the WeChat official account “Academic Headlines” (ID: SciTouTiao). The author is Academic Headlines. It is published by 36Kr with authorization.