Why is “Decentralized AI Cloud” Hard to Implement?

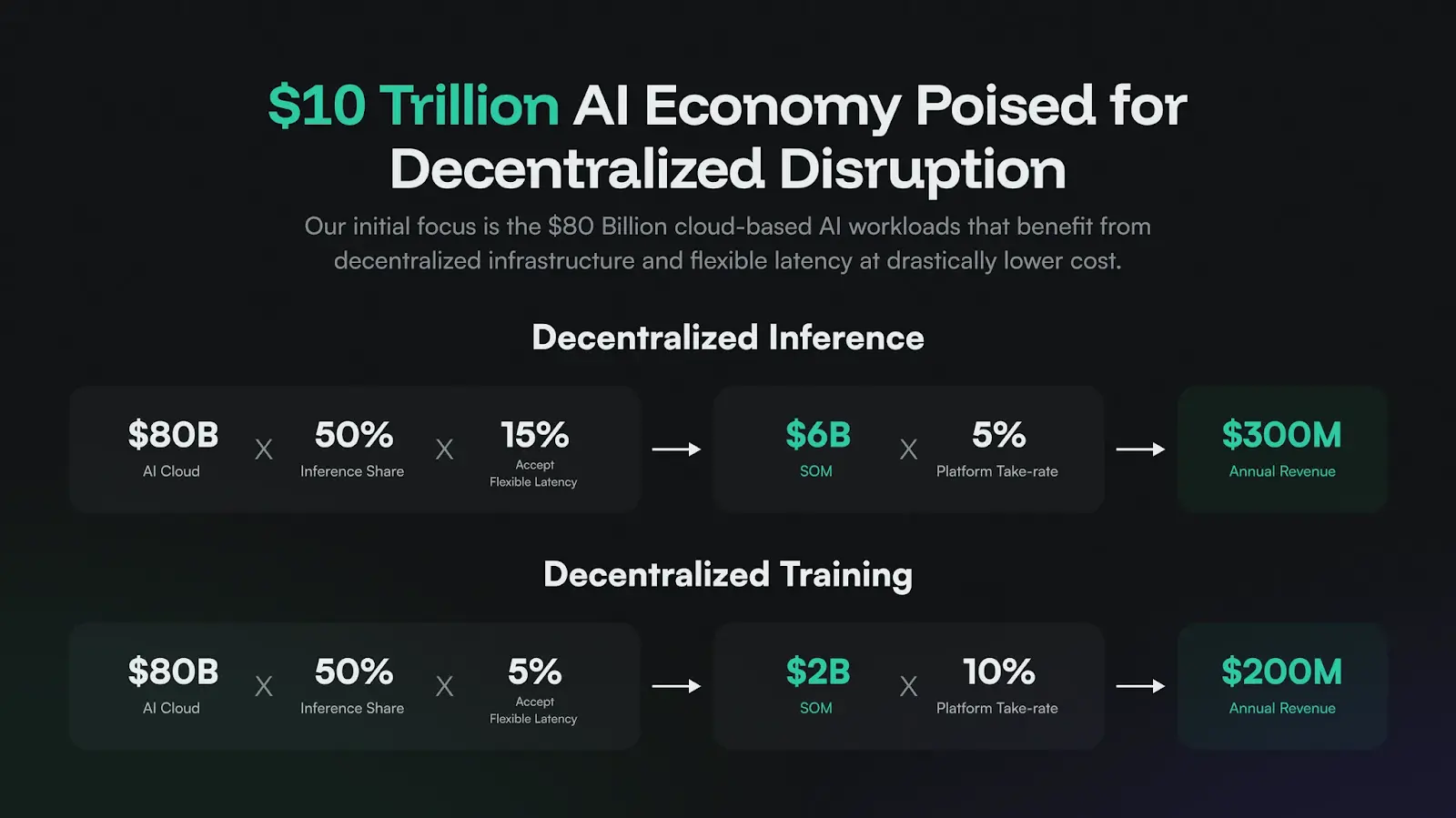

The market space accessible to AI is extremely vast, and the overlooked potential of the Web2 market is gradually becoming apparent, with enormous opportunities available: the current AI cloud service market has reached $80 billion and is growing at a rate of 32% per year. If we break it down into inference and training, there is approximately $8 billion in market segments that can be served. Even under a conservative revenue share model, Gata still targets an annual revenue of $500 million.

At the same time, although the AI×Crypto track in Web3 is still in its early stages, it is experiencing strong growth. Overall, “AI” tokens have become a solid top narrative in the crypto market, with a total market capitalization close to $30 billion on mainstream trackers.

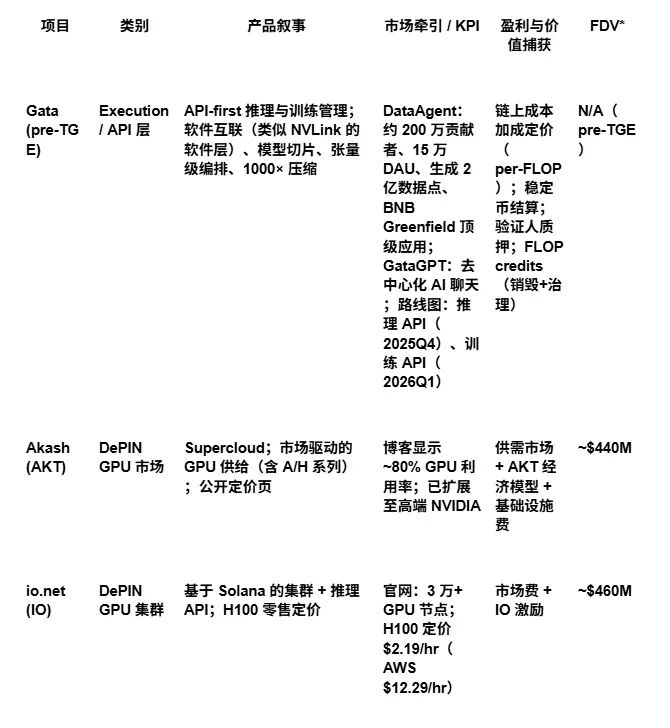

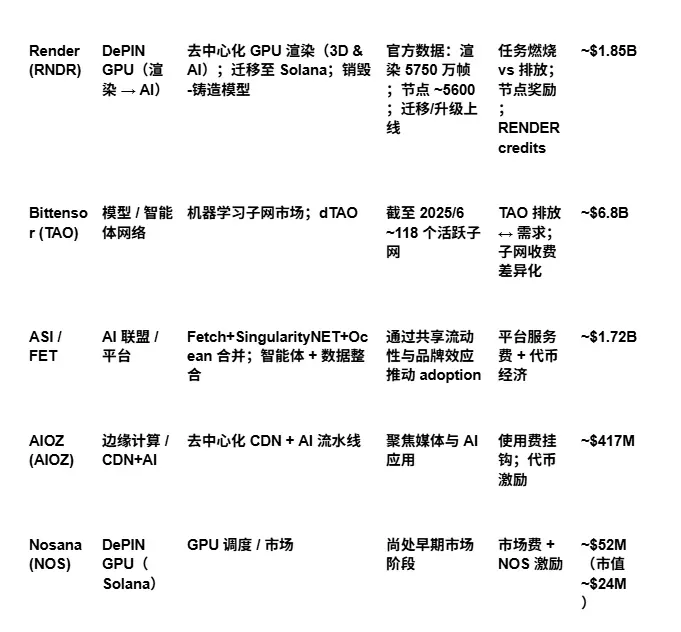

However, this narrative actually conceals four distinctly different business models: DePIN, model and agent networks, general AI platforms/alliances, and edge computing, CDN, and media infrastructure. Each model commercializes around different bottlenecks and comes with differentiated risk exposures, key performance indicators (KPIs), and token economic logic.

Taking the DePIN track as an example, Akash, io.net, and Render can be understood as entry points for raw GPU/CPU computing power. These platforms achieve access to hardware resources through tokenization and match supply and demand. The logic holds because, compared to hyperscale cloud providers, these networks still have significant price advantages—acquiring high-end graphics cards in DePIN networks typically costs single-digit dollars per hour, while on mainstream cloud platforms, it is double digits, especially with multi-GPU configurations where the gap is even more pronounced.

At the same time, the supply side is also maturing rapidly, with longer task durations and higher utilization rates, and “toy-like” experimental loads are gradually decreasing. Key metrics to track in this space include GPU model distribution (e.g., A100/H100/H200), utilization rates, task success rates, SLA fulfillment, unit computing cost ($/FLOP), and platform commission rates.

However, the shortcomings of such DePIN platforms are also evident: node quality varies widely, large model parallel training is limited by a lack of efficient interconnection, and when token rewards diminish, users and nodes are prone to churn.

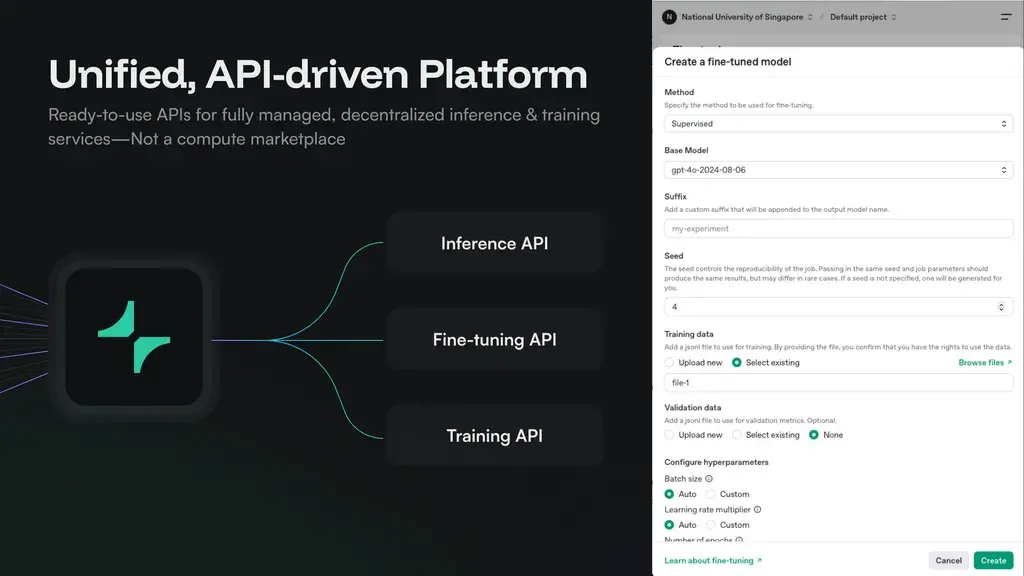

This is precisely where Gata’s value proposition lies. Through software orchestration, Gata stitches distributed nodes into a behavior-consistent, manageable cluster and opens it up to developers and applications through a simple and intuitive API. This not only addresses the structural flaws of the decentralized computing power market but also endows the fragmented network with stability and availability similar to traditional hosted clouds, allowing the execution infrastructure for decentralized AI to truly take root.

At the model and agent network level, Bittensor and Autonolas provide a higher level of service. They do not simply rent out computing power boxes but coordinate models, tasks, or agents through “subnets” and reward valuable work. This field is a testing ground full of experimentation: there are numerous ongoing innovations, real-time market validation of model quality, and genuine community-driven momentum. Key observation points include: the number of active subnets and services, the method of incentive distribution, task volume, quality benchmark performance, and whether demand-side fees can exceed inflation. If fees do not grow with usage, value capture will gradually diminish. Gata does not compete directly with them but plays a complementary role. Because whether it is agents or networks, a reliable and price-transparent execution environment is ultimately needed to support scalable operations, and Gata’s positioning is precisely as “the reasoning and training execution layer with clear economic logic.”

In the broader context of general AI platforms and alliances, such as ASI (formed by the merger of Fetch, SingularityNET, and Ocean), they integrate agents, data, and services through a single token economy. Their core assumption is that shared liquidity and brand effects can drive adoption by developers and enterprises. However, the success of such platforms will not solely depend on the token mechanism but also on the activity level, integration, market trading volume of the developer ecosystem, and the ability to quickly establish standards on a few advantageous workloads. The challenge lies in the execution power after the merger: coordination costs significantly increase, and the platform is at risk of becoming decentralized. Here, Gata also plays a neighboring rather than opposing role. Larger platforms will bring more agents and data flows, and they still need a transparent, scalable computing power foundation for support.

By analogy, Uniswap opened up liquidity by abstracting trading into APIs, while Gata opens the door to decentralized AI by abstracting computing power into APIs. AI does not have to continue being the most expensive monopolistic industry in the world, and computing power does not have to remain locked behind corporate walls.

Currently, the bottleneck for artificial intelligence is not a lack of innovation but a lack of accessibility to computing power. The AI cloud market is now controlled by hyperscale giants, referred to as the “landlords” of the new digital empire, including AWS, Azure, and Google Cloud. They continuously raise the financial barriers to accessing resources. Today, training a cutting-edge AI model can cost tens of millions to hundreds of millions of dollars, and if the trend continues, it may exceed $1 billion by 2027—this threshold nearly deters everyone except the largest laboratories. Even “basic” AI tools are not cheap, with enterprise-level instances costing between $100 and $5,000 per month. This is not innovation; it is a tax.

In theory, decentralization should be the most natural hedge. But so far, it has not truly worked. Most so-called “decentralized computing” solutions are merely marketplaces that sell scattered GPU nodes one by one. However, this cannot support real AI workloads. Because there is no decentralized interconnection like Nvidia NVLink, nor a unified distributed computing data center network structure. Without interconnection, you are not a data center; you are just a pile of scattered parts. This is also why decentralized AI clouds have yet to truly take root.

How Does Gata Truly Decentralize AI Computing Power?

This is the moment for Gata to shine. Gata is not just another computing power marketplace; it is a fully managed decentralized AI cloud centered around APIs. Developers can access it anytime and scale anywhere. Its breakthrough lies in providing a decentralized version of the network interconnection layer through software, without relying on expensive proprietary hardware, but binding distributed computing power into a single execution layer with code. The effect that previously required proprietary interconnection hardware worth billions of dollars is now achieved through a software layer.

Gata’s mission is simple: to truly decentralize AI computing power through the following three principles:

Affordable: Transparent cost-plus pricing model.

Accessible: Anyone can contribute computing power, and anyone can use it.

Auditable: Every transaction is recorded on-chain.

From independent researchers to startup teams to cutting-edge laboratories, Gata opens the path to innovation while dismantling the toll booths set up by hyperscale cloud providers.

Gata is transforming decentralized computing power supply into a supercomputer through a layer of interconnected networks, thanks to its core capabilities:

Model Sharding across multiple nodes;

Tensor-level scheduling to enhance execution efficiency;

Asynchronous execution and compression mechanisms, reducing performance bottlenecks by up to 1000 times.

The end result is a decentralized infrastructure that possesses the same scalability as centralized clouds but without vendor lock-in, price manipulation, or geopolitical bottlenecks.

At the Web3 level, Gata fully embraces its crypto-native economic model.

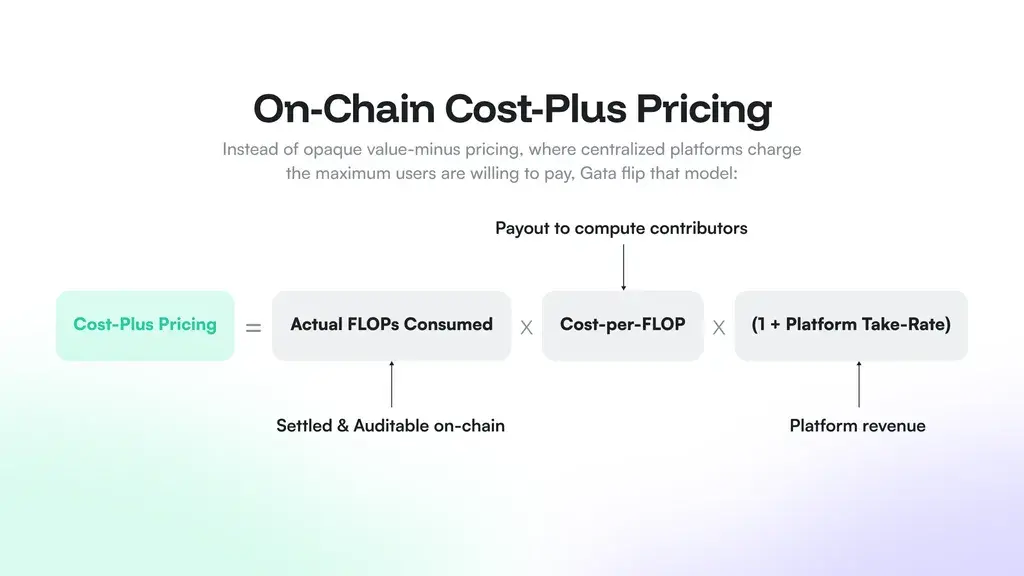

Unlike the pricing model of hyperscale cloud providers that charges as much as the market can bear, Gata introduces an on-chain cost-plus pricing mechanism. The price of each floating-point operation (FLOP) is transparently marked on-chain.

Users only pay for the resources they actually consume;

Contributors earn income directly based on the computing power they provide;

All fund flows are completely auditable, transparent, and trustless.

At the same time, the billing system settles through stablecoins, allowing payments to be completed instantly. Whether contributors are in Lagos, Seoul, or São Paulo, they can go live with computing power and receive rewards within seconds. There are no opaque billing cycles, no 30-day settlement waits, only the liquidity of contributors and the stability of users.

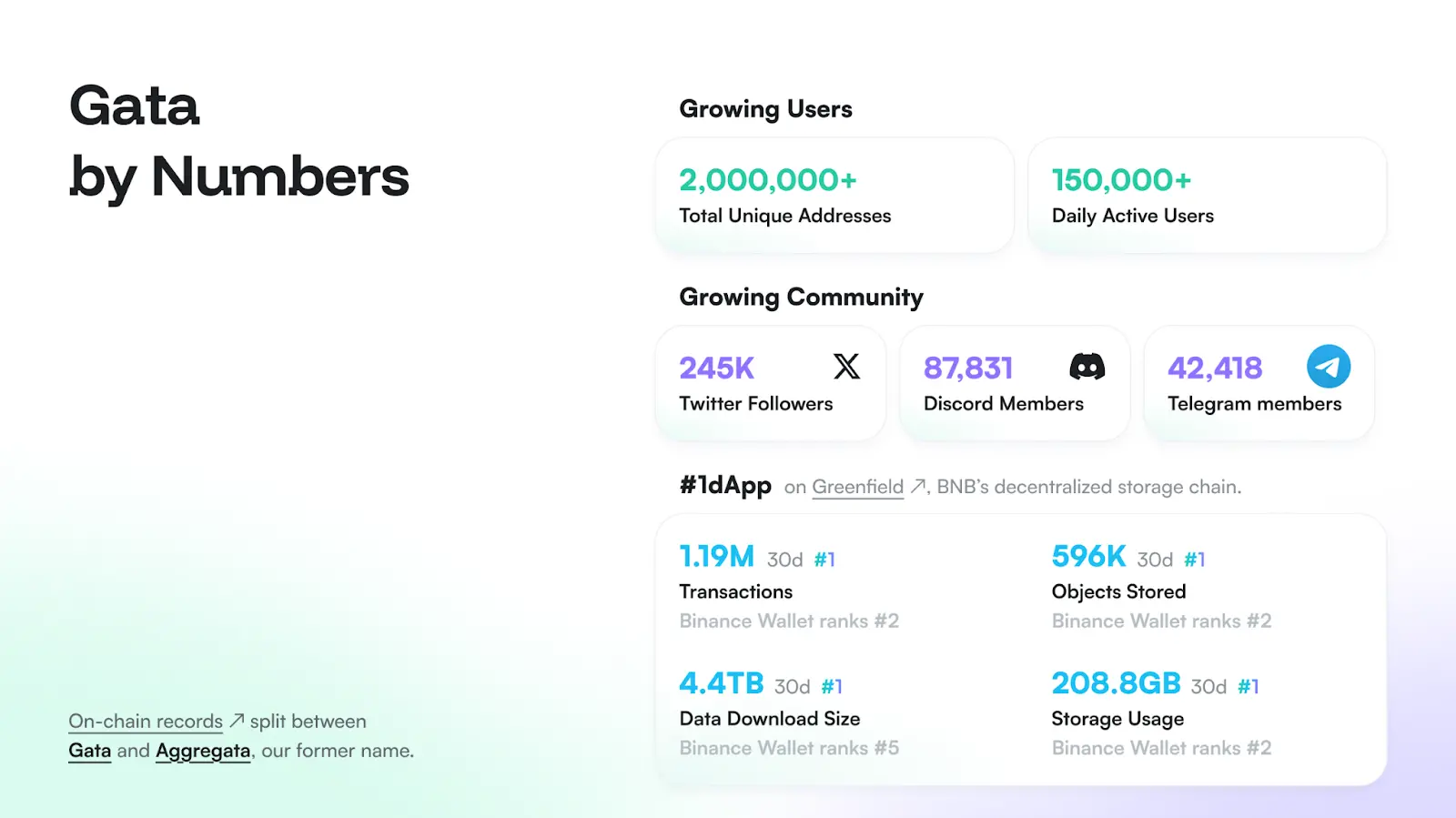

And these are not fantasies from a white paper; Gata has already launched products that are genuinely used by millions of users:

DataAgent → A data factory driven by decentralized inference.

Nearly 2 million contributors

150,000 daily active users (DAU)

Generates 200 million data points

Has become a top application (Top dApp) on BNB Greenfield

GataGPT → A decentralized AI chat product that supports side-by-side responses, where users help train the model and earn rewards during use.

And in the fourth quarter of 2025, it will launch:

Production-grade inference and training API —— the core cornerstone of Gata’s open execution infrastructure.

The community scale is also enormous: with 249,000 followers on X and 86,000 active members on Discord. This is not an air project but a tangible user-driven and grounded achievement.

Gata is building a decentralized AI supercomputer: permissionless, fully transparent, and globally accessible. The question is not whether this transformation will happen, but when it will happen. And when all of this becomes a reality, you will remember who paved the way for this open path.

Project Comparison Table

Conclusion

From a valuation perspective, Bittensor tops the list with an FDV of about $6.8 billion, Render at about $1.85 billion, while Akash, io.net, and AIOZ are distributed in the $400-500 million FDV range, belonging to the mid-sized track. In contrast, Gata is still in the pre-TGE stage, with estimated financing valuations reaching $100-120 million. However, from the perspective of product traction and user volume, Gata has shown significant first-mover advantages: its DataAgent has gathered nearly 2 million contributors and 150,000 DAU, ranking as a top application on BNB Greenfield. This “unpriced but already grounded” state gives Gata a highly scarce balance point between narrative and fundamentals.

ChainCatcher reminds readers to view blockchain rationally, enhance risk awareness, and be cautious of various virtual token issuances and speculations. All content on this site is solely market information or related party opinions, and does not constitute any form of investment advice. If you find sensitive information in the content, please click “Report”, and we will handle it promptly.