Denby, B. et al. Silent speech interfaces. Speech Commun. 52, 270–287 (2010). This comprehensive review formalizes SSIs as a research field.

Gonzalez-Lopez, J. A. et al. Silent speech interfaces for speech restoration: a review. IEEE Access 8, 177995–178021 (2020).

Guenther, F. H. et al. in Speech Motor Control in Normal and Disordered Speech 29–49 (Oxford Univ. Press, 2004).

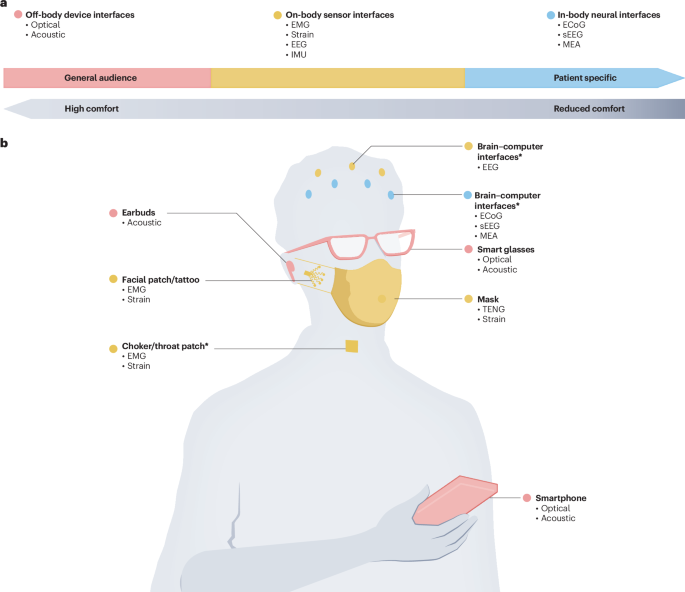

Silva, A. B. et al. The speech neuroprosthesis. Nat. Rev. Neurosci. 25, 473–492 (2024).

Afouras, T. et al. Deep audio-visual speech recognition. IEEE Trans. Pattern Anal. Mach. Intell. 44, 8717–8727 (2022).

Jin, Y. et al. EarCommand: “hearing” your silent speech commands in ear. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 6, 1–28 (2022).

Liu, H. et al. An epidermal sEMG tattoo-like patch as a new human–machine interface for patients with loss of voice. Microsyst. Nanoeng. 6, 16 (2020). This study introduces flexible surface EMG sensors to silent-speech-related human–machine interfaces.

Kim, T. et al. Ultrathin crystalline-silicon-based strain gauges with deep learning algorithms for silent speech interfaces. Nat. Commun. 13, 5815 (2022).

Liu, S. et al. A data-efficient and easy-to-use lip language interface based on wearable motion capture and speech movement reconstruction. Sci. Adv. 10, eado9576 (2024).

Card, N. S. et al. An accurate and rapidly calibrating speech neuroprosthesis. N. Engl. J. Med. 391, 609–618 (2024).

Willett, F. R. et al. A high-performance speech neuroprosthesis. Nature 620, 1031–1036 (2023).

Sugie, N. & Tsunoda, K. A speech prosthesis employing a speech synthesizer: vowel discrimination from perioral muscle activities. IEEE Trans. Biomed. Eng. 32, 485–490 (1985).

Petajan, E. D. Automatic lipreading to enhance speech recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 40–47 (IEEE, 1985). This conference paper describes an off-body silent speech decoding system using camera-based automatic lipreading.

Suppes, P., Lu, Z.-L. & Han, B. Brain wave recognition of words. Proc. Natl Acad. Sci. USA 94, 14965–14969 (1997).

Assael, Y. M., Shillingford, B., Whiteson, S. & de Freitas, N. LipNet: end-to-end sentence-level lipreading. Preprint at https://doi.org/10.48550/arXiv.1611.01599 (2016).

Wand, M., Koutník, J. & Schmidhuber, J. Lipreading with long short-term memory. In Proc. 2016 IEEE International Conference on Acoustics, Speech and Signal Processing 6115–6119 (IEEE, 2016).

Su, Z., Fang, S. & Rekimoto, J. LipLearner: customizable silent speech interactions on mobile devices. In Proc. 2023 CHI Conference on Human Factors in Computing Systems 1–21 (ACM, 2023).

Pandey, L. & Arif, A. S. LipType: a silent speech recognizer augmented with an independent repair model. In Proc. 2021 CHI Conference on Human Factors in Computing Systems 1–19 (ACM, 2021).

Pandey, L. & Arif, A. S. MELDER: the design and evaluation of a real-time silent speech recognizer for mobile devices. In Proc. CHI Conference on Human Factors in Computing Systems 1–23 (ACM, 2024).

Wang, X., Su, Z., Rekimoto, J. & Zhang, Y. Watch your mouth: silent speech recognition with depth sensing. In Proc. CHI Conference on Human Factors in Computing Systems 1–15 (ACM, 2024).

Chen, T. et al. C-Face: continuously reconstructing facial expressions by deep learning contours of the face with ear-mounted miniature cameras. In Proc. 33rd Annual ACM Symposium on User Interface Software and Technology 112–125 (ACM, 2020).

Zhang, R. et al. SpeeChin: a smart necklace for silent speech recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 5, 1–23 (2021).

Kimura, N., Kono, M. & Rekimoto, J. SottoVoce: an ultrasound imaging-based silent speech interaction using deep neural networks. In Proc. 2019 CHI Conference on Human Factors in Computing Systems 1–11 (ACM, 2019).

Tan, J., Nguyen, C.-T. & Wang, X. SilentTalk: lip reading through ultrasonic sensing on mobile phones. In Proc. IEEE INFOCOM 2017—IEEE Conference on Computer Communications 1–9 (IEEE, 2017).

Gao, Y. et al. EchoWhisper: exploring an acoustic-based silent speech interface for smartphone. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 1–27 (2020).

Zhang, Q. et al. SoundLip: enabling word and sentence-level lip interaction for smart devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 5, 1–28 (2021).

Zhang, R. et al. EchoSpeech: continuous silent speech recognition on minimally-obtrusive eyewear powered by acoustic sensing. In Proc. 2023 CHI Conference on Human Factors in Computing Systems 1–18 (ACM, 2023).

Dong, X. et al. ReHEarSSE: recognizing hidden-in-the-ear silently spelled expressions. In Proc. CHI Conference on Human Factors in Computing Systems 1–16 (ACM, 2024).

Zhang, R. et al. HPSpeech: silent speech interface for commodity headphones. In Proc. 2023 International Symposium on Wearable Computers 60–65 (ACM, 2023).

Kwon, J. et al. Novel three-axis accelerometer-based silent speech interface using deep neural network. Eng. Appl. Artif. Intell. 120, 105909 (2023).

Zhou, Q. et al. Triboelectric nanogenerator-based sensor systems for chemical or biological detection. Adv. Mater. 33, 2008276 (2021).

Wang, Z. L. On Maxwell’s displacement current for energy and sensors: the origin of nanogenerators. Mater. Today 20, 74–82 (2017).

Lu, Y. et al. Decoding lip language using triboelectric sensors with deep learning. Nat. Commun. 13, 1401 (2022). This paper reports on a triboelectric-sensor-based silent speech decoding system.

De Luca, C. J. The use of surface electromyography in biomechanics. J. Appl. Biomech. 13, 135–163 (1997).

Phinyomark, A. et al. Feature extraction and selection for myoelectric control based on wearable EMG sensors. Sensors 18, 1615 (2013).

Wang, Y. et al. All-weather, natural silent speech recognition via machine-learning-assisted tattoo-like electronics. npj Flex.Electron. 5, 20 (2021).

Tang, C. et al. Wireless silent speech interface using multi-channel textile EMG sensors integrated into headphones. IEEE Trans. Instrum. Meas. 74, 1–10 (2025).

Lipomi, D. J. et al. Skin-like pressure and strain sensors based on transparent elastic films of carbon nanotubes. Nat. Nanotechnol. 6, 788–792 (2011).

Amjadi, M. et al. Stretchable, skin-mountable, and wearable strain sensors and their potential applications: a review. Adv. Funct. Mater. 26, 1678–1698 (2016).

Xu, S. et al. Force-induced ion generation in zwitterionic hydrogels for a sensitive silent-speech sensor. Nat. Commun. 14, 219 (2023).

Yang, Q. et al. Mixed-modality speech recognition and interaction using a wearable artificial throat. Nat. Mach. Intell. 5, 169–180 (2023).

Tang, C. et al. Ultrasensitive textile strain sensors redefine wearable silent speech interfaces with high machine learning efficiency. npj Flex. Electron. 8, 27 (2024).

Tang, C. et al. Wearable intelligent throat enables natural speech in stroke patients with dysarthria. Preprint at https://doi.org/10.48550/arXiv.2411.18266 (2024). This on-body sensing study demonstrates generalized silent speech decoding directly in patients with speech impairment.

Farwell, L. A. & Donchin, E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523 (1988).

Wolpaw, J. R. & McFarland, D. J. Control of a two-dimensional movement signal by a noninvasive brain–computer interface in humans. Proc. Natl Acad. Sci. USA 101, 17849–17854 (2004).

Kaongoen, N., Choi, J. & Jo, S. Speech-imagery-based brain–computer interface system using ear-EEG. J. Neural Eng. 18, 016023 (2021).

Wang, Z. et al. Conformal in-ear bioelectronics for visual and auditory brain–computer interfaces. Nat. Commun. 14, 4213 (2023).

Occhipinti, E., Davies, H. J., Hammour, G. & Mandic, D. P. Hearables: artefact removal in ear-EEG for continuous 24/7 monitoring. In Proc. 2022 International Joint Conference on Neural Networks 1–6 (IEEE, 2022).

Mandic, D. P. et al. In your ear: a multimodal hearables device for the assessment of the state of body and mind. IEEE Pulse 14, 17–23 (2023).

Cooney, C. et al. A bimodal deep learning architecture for EEG-fNIRS decoding of overt and imagined speech. IEEE Trans. Biomed. Eng. 69, 1983–1994 (2021).

Défossez, A. et al. Decoding speech perception from non-invasive brain recordings. Nat. Mach. Intell. 5, 1097–1107 (2023).

Leuthardt, E. C. et al. A brain–computer interface using electrocorticographic signals in humans. J. Neural Eng. 1, 63–71 (2004).

Schalk, G. & Leuthardt, E. C. Brain–computer interfaces using electrocorticographic signals. IEEE Rev. Biomed. Eng. 4, 140–154 (2011).

Angrick, M. et al. Speech synthesis from ECoG using densely connected 3D convolutional neural networks. J. Neural Eng. 16, 036019 (2019).

Moses, D. A. et al. Neuroprosthesis for decoding speech in a paralyzed person with anarthria. N. Engl. J. Med. 385, 217–227 (2021).

Metzger, S. L. et al. A high-performance neuroprosthesis for speech decoding and avatar control. Nature 620, 1037–1046 (2023).

Littlejohn, K. T. et al. A streaming brain-to-voice neuroprosthesis to restore naturalistic communication. Nat. Neurosci. 28, 1–11 (2025).

Duraivel, S. et al. High-resolution neural recordings improve the accuracy of speech decoding. Nat. Commun. 14, 6938 (2023).

Munari, C. et al. Stereo-electroencephalography methodology: advantages and limits. Acta Neurol. Scand. 89, 56–67 (1994).

Mullin, J. P. et al. Is SEEG safe? A systematic review and meta-analysis of stereo-electroencephalography-related complications. Epilepsia 57, 386–401 (2016).

Abarrategui, B. et al. New stimulation procedures for language mapping in stereo-EEG. Epilepsia 65, 1720–1729 (2024).

He, T. et al. VocalMind: a stereotactic EEG dataset for vocalized, mimed, and imagined speech in tonal language. Sci. Data 12, 657 (2025).

Schwartz, A. B. et al. Brain-controlled interfaces: movement restoration with neural prosthetics. Neuron 52, 205–220 (2006).

Flint, R. D. et al. Accurate decoding of reaching movements from field potentials in the human motor cortex. J. Neural Eng. 9, 046006 (2012).

Mugler, E. M. et al. Direct classification of all American English phonemes using signals from functional speech motor cortex. J. Neural Eng. 11, 035015 (2014).

Willett, F. R. et al. High-performance brain-to-text communication via handwriting decoding. Nature 593, 249–254 (2021).

Matsumura, G. et al. Real-time personal healthcare data analysis using edge computing for multimodal wearable sensors. Device 3, 100597 (2025).

Liu, T. et al. Machine learning-assisted wearable sensing systems for speech recognition and interaction. Nat. Commun. 16, 2363 (2025).

Cai, D. et al. SILENCE: protecting privacy in offloaded speech understanding on resource-constrained devices. Adv. Neural Inform. Proc. Syst. 37, 105928–105948 (2024).

Deng, Z. et al. Silent speech recognition based on surface electromyography using a few electrode sites under the guidance from high-density electrode arrays. IEEE Trans. Instrum. Meas. 72, 1–11 (2023).

Pang, C. et al. A flexible and highly sensitive strain-gauge sensor using reversible interlocking of nanofibres. Nat. Mater. 11, 795–801 (2012).

Tang, C. et al. A deep learning-enabled smart garment for accurate and versatile monitoring of sleep conditions in daily life. Proc. Natl Acad. Sci. USA 122, e2420498122 (2025).

Xu, M. et al. Simultaneous isotropic omnidirectional hypersensitive strain sensing and deep learning-assisted direction recognition in a biomimetic stretchable device. Adv. Mater. 37, 2420322 (2025).

Gong, S. et al. Hierarchically resistive skins as specific and multimetric on-throat wearable biosensors. Nat. Neurosci. 18, 889–897 (2023).

Xue, C. et al. A CMOS-integrated compute-in-memory macro based on resistive random-access memory for AI edge devices. Nat. Electron. 4, 81–90 (2021).

Fei, N. et al. Towards artificial general intelligence via a multimodal foundation model. Nat. Commun. 13, 3094 (2022).

Roy, K. et al. Towards spike-based machine intelligence with neuromorphic computing. Nature 575, 607–617 (2019).

Wang, S. et al. Memristor-based adaptive neuromorphic perception in unstructured environments. Nat. Commun. 15, 4671 (2024).

Tang, C. et al. A roadmap for the development of human body digital twins. Nat. Rev. Elect. Eng. 1, 199–207 (2024).

Zinn, S. et al. The effect of poststroke cognitive impairment on rehabilitation process and functional outcome. Arch. Phys. Med. Rehab. 85, 1084–1090 (2004).

Kennedy, P. R. & Bakay, R. A. E. Restoration of neural output from a paralyzed patient by a direct brain connection. Neuroreport 9, 1707–1711 (1998). This paper reports on a patient-level in-body neural interface demonstrating direct brain-connected communication in a paralysed individual.

Denby, B. & Stone, M. Speech synthesis from real-time ultrasound images of the tongue. In Proc. 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing 685–688 (IEEE, 2004). This conference paper demonstrates silent speech synthesis using real-time ultrasound imaging of the tongue.

Galatas, G., Potamianos, G. & Makedon, F. Audio-visual speech recognition incorporating facial depth information captured by the Kinect. In Proc. 20th European Signal Processing Conference 2714–2717 (IEEE, 2012).

Shin, Y. H. & Seo, J. Towards contactless silent speech recognition based on detection of active and visible articulators using IR-UWB radar. Sensors 16, 1812 (2016).

Sun, K. et al. Lip-Interact: improving mobile device interaction with silent speech commands. In Proc. 31st Annual ACM Symposium on User Interface Software and Technology 581–593 (ACM, 2018).

Anumanchipalli, G. K., Chartier, J. & Chang, E. F. Speech synthesis from neural decoding of spoken sentences. Nature 568, 493–498 (2019). This paper reports a neural decoder that synthesizes full spoken sentences from ECoG recordings.

Ravenscroft, D. et al. Machine learning methods for automatic silent speech recognition using a wearable graphene strain gauge sensor. Sensors 22, 299 (2022).

Che, Z. et al. Speaking without vocal folds using a machine-learning-assisted wearable sensing-actuation system. Nat. Commun. 15, 1873 (2024).