The tiny-but-mighty inertial confinement fusion (ICF) target is a marvel of engineering. Carefully positioned inside the hohlraum, the capsule is held in place by a tented membrane (tent) and receives fuel via a miniscule fill tube that is 10 times thinner than a human hair. Other materials may be included to serve various purposes, such as to improve implosion symmetry. At micrometer and millimeter scales, very little room exists for something to go awry. Whether inside a capsule or along a beamline, understanding and harnessing the complex physics underpinning ICF experiments are only possible with a scientific feedback loop that relies on computing power to accelerate research.

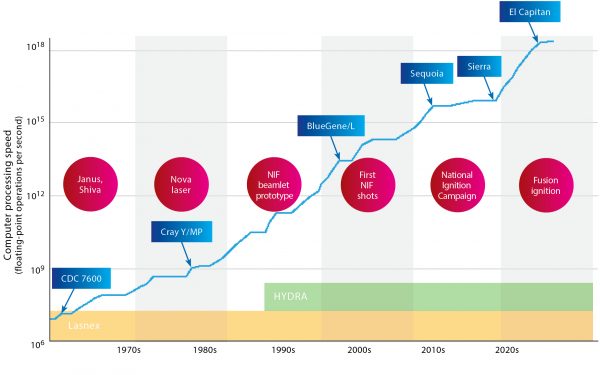

From the early days of Livermore’s ICF program, physicist and former Laboratory Director John Nuckolls advocated for supercomputing as a necessary component in laser research and the pursuit of ignition. (See S&TR, April/May 2022, Beaming with Excellence.) Decades of investments in laser and computing systems have produced mutually beneficial research and development—from the single-beam Janus laser to the 192-beam National Ignition Facility (NIF), and from the megascale CDC 7600 computer to today’s exascale supercomputer El Capitan. That one laboratory houses the world’s most energetic laser alongside the world’s most powerful supercomputer is hardly a coincidence.

The Laboratory is also home to the world’s most complete set of ICF modeling and simulation tools, encapsulating the intricacies of laser light interaction, electron and x-ray transport, nonequilibrium atomic physics, magnetohydrodynamics, and fusion burn. By running through millions of permutations and billions of data points, then predicting likely outcomes, these simulations set the stage for NIF experiments. Additionally, ICF codes can simulate diagnostic measurements to compare directly with experiments, which in turn validate the models. “Simulations are absolutely crucial to ICF research and help us determine with great detail how to design experiments. It’s beyond human ability to take all the physics into account precisely,” states physicist Mordy Rosen, who joined the ICF program in 1976.

NIF conducts hundreds of experiments each year primarily in support of stockpile stewardship, so only a fraction of NIF experiments are designed for ignition. (See LLNL’s S&TR, March 2021, Fusion Supports the Stockpile.) This scarcity makes simulations all the more valuable, and better predictions can reduce the number of experiments needed. And the greater the desired performance of the experiment, the more consequential any tiny imperfection or asymmetry becomes, so researchers rely on iterative simulations to explore combinations of design variables. No commercial off-the-shelf code can meet these demands. The Laboratory’s portfolio of export-controlled ICF codes includes two that are noteworthy for their longevity, agility, and role in ignition: Lasnex and HYDRA.

For more than 50 years, Livermore’s laser (red) and computing (blue) technologies have advanced alongside—and to the mutual benefit of—each other. Development continues on the Lasnex and HYDRA codes as simulation needs evolve.

For more than 50 years, Livermore’s laser (red) and computing (blue) technologies have advanced alongside—and to the mutual benefit of—each other. Development continues on the Lasnex and HYDRA codes as simulation needs evolve.

Lasnex Generation

Computing technology and programming environments change quickly, rendering obsolete any code that cannot keep pace. When the code simulates a specialized scientific domain in service to national security, it must adapt to remain relevant. Created by physicist George Zimmerman, Lasnex has done just that.

Lasnex development began in 1970 when laser absorption models were generalized from the 1D WAZER code developed by Laboratory physicist Ray Kidder. Lasnex was the first 2D code to simulate all ICF-relevant physics, such as laser absorption, electron conduction, hydrodynamics, and fusion burn. These capabilities influenced early target designs and made the case for investments in laser technology including the Laser Program’s first laser, Janus. In 1975, Lasnex simulated the first experimental proof of laser-induced thermonuclear burn, which was conducted using Janus.

“Lasnex has always contained models for all the physics required to simulate current experiments and often several models for the same process to assess uncertainties,” states Zimmerman. When the ICF program shifted the laser delivery method from direct to indirect drive to reduce instabilities and improve symmetry, Lasnex did, too. The code evolved to include a variety of physics models such as x-ray transport and nonequilibrium atomic physics, and by the early 1990s was used for integrated hohlraum–capsule simulations.

One key to the code’s longevity is its user interface. Leveraging the Basis scripting language, the interface allows researchers at Livermore, Los Alamos, and Sandia national laboratories to create their own modeling specifications. Zimmerman explains, “Through the Basis interface, users can test sensitivities by turning off various physical processes or exaggerating their effects. This better understanding of the physics leads to better predictions and extrapolations into regimes that cannot be accessed with current laser facilities.” Lasnex runs on Livermore’s capacity computing systems and does not require massively parallel resources to produce results quickly.

HYDRA Dynamics

In 1994, just a few years before NIF construction broke ground, computational physicist Robert Tipton began developing a new hydrodynamics code called HYDRA. Computational physicist Marty Marinak soon joined him to develop HYDRA into an ICF code. Designed for massively parallel 2D and 3D simulations, HYDRA simulates the entire implosion process including capsule and hohlraum physics. The code includes models for equations of state and atomic physics as well as physical processes related to laser light, ion beams, magnetic fields, burn products, and more. Its versatility stems from complex meshing options and a Python-based programming interface where users can integrate their own physics packages.

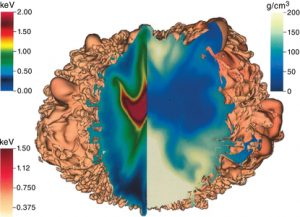

A cutaway HYDRA simulation shows capsule details and asymmetry in shot N170601, five years before ignition. On the right half of the cutaway, density levels are indicated in grams per cubic centimeter (g/cm3; top, right). On the left half of the cutaway, x-ray energy levels are indicated in kiloelectronvolts (keV) for the capsule interior (top, left). X-ray energy levels for the exterior are indicated in keV (bottom, left).

A cutaway HYDRA simulation shows capsule details and asymmetry in shot N170601, five years before ignition. On the right half of the cutaway, density levels are indicated in grams per cubic centimeter (g/cm3; top, right). On the left half of the cutaway, x-ray energy levels are indicated in kiloelectronvolts (keV) for the capsule interior (top, left). X-ray energy levels for the exterior are indicated in keV (bottom, left).

“HYDRA stands on the shoulders of the codes that came before. We’re continuing the ICF simulation leadership George started with Lasnex,” says Marinak, now the HYDRA project leader. Over the past 30 years, HYDRA has chalked up several first-of-a-kind 3D simulations that demonstrate progress in both computation and laser physics: Rayleigh–Taylor instability growth in ICF targets; high-resolution capsule simulations for the Nova laser and NIF; NIF integrated hohlraums with high-fidelity laser and photon transport; and the full set of nuclear diagnostics in implosion experiments in realistic geometry.

The HYDRA team adapted to the massively parallel computing paradigm, committing to long-term portability efforts that ensure the code could run on different supercomputing architectures and memory spaces. In the petascale era, when Livermore’s Sequoia machine performed 20 quadrillion floating-point operations per second, HYDRA simulated a NIF capsule in 3D with unprecedented resolution. The team updated the code as heterogeneous computing systems—combining central and graphics processing units—came to the fore and recently ran a test problem using one-third of El Capitan to confirm that HYDRA could scale to thousands of nodes on an exascale system.

Beyond NIF, HYDRA serves research partners at universities and other national laboratories with simulations of laser indirect and direct drive, magnetic drive, heavy ions, and high-energy-density physics. The code can run large-scale simulations detailing the features and nuances of ICF targets. The evolution of HYDRA’s simulation fidelity and accuracy bolsters what Marinak calls “the cycle of learning.” He says, “We have a much better understanding of the impacts of various physical processes and asymmetry sources, while we continue to learn from experiments where the models need further improvement.”

Predicting Ignition

For several years during pre-NIF research, scientists feared that the tent inside the hohlraum could be a problem. Indeed, Rosen recalls, “The tent was a pernicious killer of implosions that cut the original target design to pieces.” In 2007, the codes could not tackle this problem, in large part due to inadequate computing resources for the high resolution required. However, in 2014, after NIF and its diagnostic tools became operational and computational capabilities caught up, HYDRA could properly simulate the tent. The updated experiment–simulation loop revealed that the tent’s position led to scarring of the capsule upon implosion, so designers changed the tent’s orientation to grasp the capsule at its poles instead. Experimental data showed the predicted improvement in capsule performance. Simulation advancements have uncovered many such details on the journey to ignition.

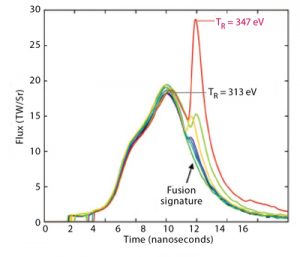

Lasnex and HYDRA simulations predicted the hohlraum reheating phenomenon, which was demonstrated in multiple NIF shots (plotted in different colors). X-ray energy exiting the hohlraum’s laser entrance holes is shown in gross terawatts per steradian (Flux TW/Sr), while radiation temperature (TR) is measured in electronvolts (eV). The red spike of the N221204 ignition shot indicates a high hohlraum reheating signal.

Lasnex and HYDRA simulations predicted the hohlraum reheating phenomenon, which was demonstrated in multiple NIF shots (plotted in different colors). X-ray energy exiting the hohlraum’s laser entrance holes is shown in gross terawatts per steradian (Flux TW/Sr), while radiation temperature (TR) is measured in electronvolts (eV). The red spike of the N221204 ignition shot indicates a high hohlraum reheating signal.

Both Lasnex and HYDRA proved valuable in predicting a phenomenon known as hohlraum reheating. The process unfolds in nanoseconds: NIF’s lasers heat the hohlraum, producing x-rays that compress the capsule’s fuel and initiate fusion reactions, which generate alpha particles that deposit energy back into the plasma—ultimately resulting in ignition. The ignited capsule explodes and forcibly encounters the surrounding plasma, which becomes a source of radiation that further heats the hohlraum. Hohlraum reheating is a distinct indicator of ignition. Rosen confirms, “Lasnex and HYDRA predicted it correctly and in detail.”

Before the December 2022 shot—known in NIF parlance as N221204—HYDRA simulations predicted it would produce more than a 2.5-times yield increase compared to the previous shot. The subsequent experimental yield fell within the preshot predicted range. Since then, Livermore scientists have achieved ignition several more times, tweaking design variables along the way to maximize yield. (See the article The Future of Ignition.) HYDRA simulations have guided all these designs. “HYDRA benefits from 30 years of comparisons to experimental data, including the ignition shots, so we have a great deal of validation of its physics models. We continually update them,” notes Marinak.

Combined Strengths

Livermore’s computing power has skyrocketed since these codes’ development began. Processing more than 2 quintillion floating-point operations per second, the exascale El Capitan supercomputer is a formidable tool in the Laboratory’s ICF toolbox. (See S&TR, December 2024, Introducing El Capitan.)

Large-scale simulations have also come a long way. A decade ago, researchers ran an ensemble of 60,000 2D capsule simulations using the Los Alamos petascale Trinity supercomputer. “We built on that capability with our Sierra system, pushing the scale to millions of simpler simulations. Now, with El Capitan, we can achieve even higher fidelity simulations at larger scale,” notes computational physicist Luc Peterson, who joined the Laboratory in 2011. “It’s thrilling to see how far we’ve come—from the rare 3D simulations that existed when I started to making them routine today.”

Further enhancing the ICF workflow is cognitive simulation, or CogSim, which uses AI to analyze simulation ensembles and experimental data. (See S&TR, September 2022, Cognitive Simulation Supercharges Scientific Research.) The results provide probabilities for experimental outcomes and help optimize designs faster and with more confidence. Researchers have trained CogSim models on tens of thousands of HYDRA simulations.

The ICECap (Inertial Confinement on El Capitan) project brings simulations, supercomputing, and CogSim together. The team is already expanding the ICF design space by training AI models on capsule simulations, then predicting successful features from these massive data sets. Peterson, ICECap’s principal investigator, states, “ICF is hard. The simulations needed to design experiments are bespoke, and keeping up with the accelerated pace of science can be overwhelming. AI helps us answer questions we couldn’t ask before.”

Ignition and Liftoff

With more than 150 years of combined experience in ICF physics and computational modeling, Marinak, Zimmerman, Rosen, and Peterson all expected to witness ignition during their careers. But ignition was a milestone, not an endpoint, and ICF codes continue to adapt to NIF’s mandate and Livermore’s national security mission. The HYDRA team is scaling up to take advantage of El Capitan’s processing power while improving predictive capabilities through efforts such as updated gold opacity models that influence x-ray emission. Meanwhile, the Lasnex team is developing new models for laser–plasma interactions and nonlocal thermodynamic equilibrium, among others.

The multidisciplinary teams keeping Lasnex and HYDRA at the forefront of ICF simulation know their work matters to the Laboratory’s present and future. Marinak affirms, “The physics is still interesting, and there’s always something new to learn in this field. It’s rewarding to help researchers conduct their work and help the program succeed. We take our responsibility seriously.”

For further information contact Marty Marinak (925) 423-8458 ([email protected]).

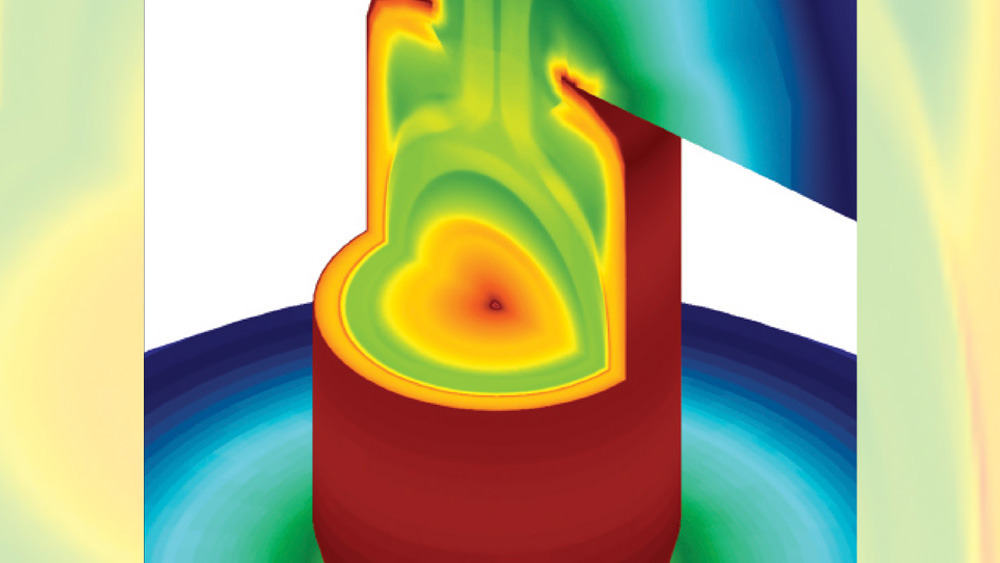

Featured Image Caption: Run on Livermore’s RZAdams supercomputer, a HYDRA simulation indicates density variations as the interior of the hohlraum is heated by laser energy. Credit: LLNL

This article was originally published by Lawrence Livermore National Laboratory (LLNL) and is reprinted with permission.