What is a data center?

More than 4,000 data centers exist across the U.S. Each one is a physical space designed to house computers used for IT and data-managing services. Most early data centers were privately owned, but with the growth of distributed computing, many have grown into facilities operated by cloud-service providers working with multiple companies.

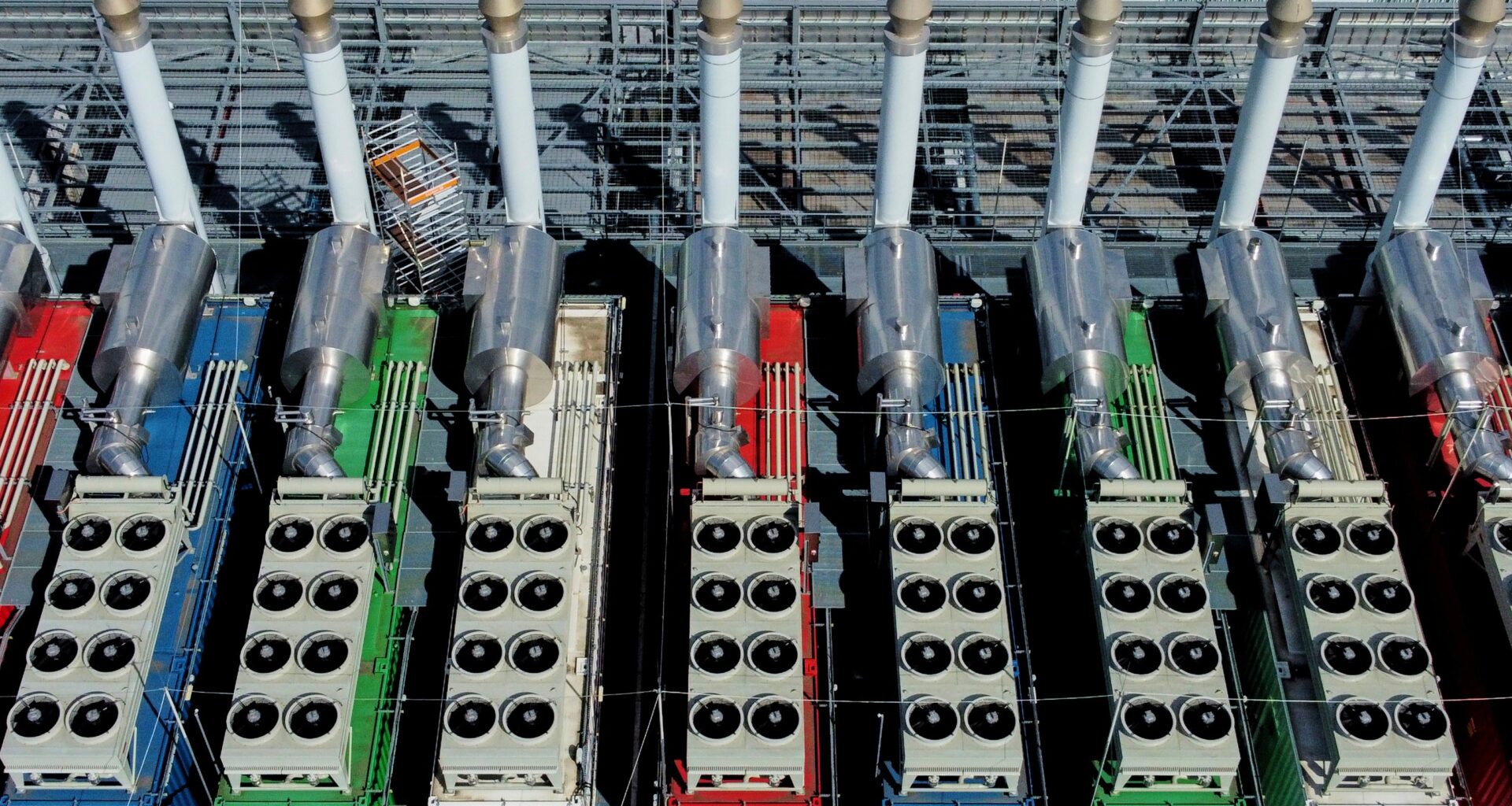

Data centers are typically high-security buildings with few windows. The servers, routers, switches, cooling systems and backup generators that live there don’t need sunlight. They are powerful — an operating data center consumes as much electricity as tens of thousands of homes.

One of the earliest-known computers, built at the University of Pennsylvania in the mid-1940s by the U.S. Army for artillery research, is a historical example of a data center. The Electronic Numerical Integrator and Computer weighed 30 tons and performed bulk mathematical jobs in a 30-by-50-foot room. Such computing hardware needs much less space to operate today.

But the demands of new technologies like AI are leading to a larger data center footprint.

One McKinsey and Company study expects the data center industry to grow by 10% a year through 2030. A report from the U.S. Department of Energy expects data centers to account for 12% of all energy use in the country by 2028. Much of that growth is spurred by projections around artificial intelligence — a Goldman Sachs report expects AI to soon account for 30% of the entire data center market.

The processing power required to train AI models demands unprecedented levels of energy. Traditional data services rely on central processing units, while AI relies on graphics processing units, which are able to perform calculations more rapidly. The difference in energy use between CPUs and GPUs is stark: It’s estimated that a typical ChatGPT prompt needs 10 times as much energy as an old-school Google search.