For the first time in history, there’s no friction between having an idea and putting it into writing. Thanks to generative AI, telling stories and sharing them with the world has never been easier.

This ease brings with it some downsides. And when it comes to potential detrimental effects, “workslop” has nothing on “news slop.”

Professional storytellers — journalists, creators and other information providers — are trusted messengers. If the work we put out regularly includes hallucinations, misinterpretations or lack of contextual understanding, our communities suffer. Shared reality overlap degrades, connections become suspect and human progress slows.

Still, at Technical.ly we believe generative AI technology offers plenty of upsides for local news.

We can’t risk ignoring AI, and shouldn’t just leave it to others to wield.

In a resource-strained industry, it can help increase access to key info, uncover pernicious patterns and enable wider and deeper coverage. So we can’t risk ignoring it, and shouldn’t just leave it to others to wield.

What that means is we need guidelines for its use. Two years ago, Technical.ly proposed to the Lenfest Institute of Journalism to create such a guide. They agreed, and offered support as part of a generous local news sustainability grant.

Now, it’s not easy to make a guide to using generative AI when generative AI keeps evolving so fast.

After more than 18 months of intense internal experimentation, gathering external feedback, following other news organizations’ successes and challenges, reading all the other artificial intelligence use guides published in the meantime (by media companies, journalism support orgs, universities, AI companies and pretty much everyone else) we came to a realization:

No matter how the generators change, how much the models improve, what tools are put out, what overall progress is made with this transformative tech, a set of core principles will remain.

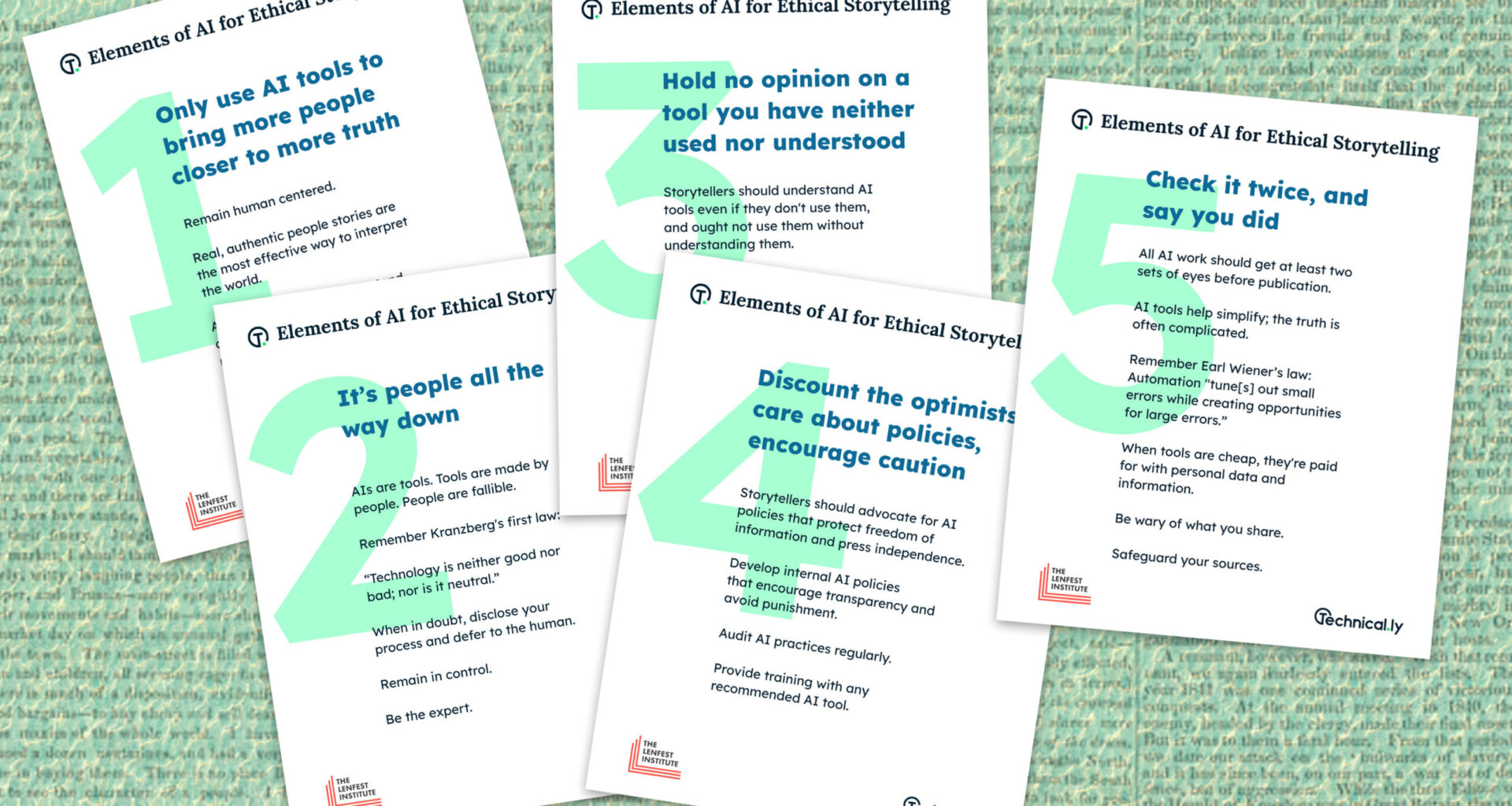

We’re calling these principles “Elements of AI for Ethical Storytelling.” Keep scrolling to read them. Download a PDF here and graphics for social sharing here.

We’ll be discussing all this in person at Klein News Innovation Camp, the 16th edition of which happens Saturday, Nov. 8, at Temple University.

Our annual unconference this year expands beyond journalists to creators and other information providers. People come from NYC, DC and all over the region — if you’ll be near Philadelphia on that day, join us!

Elements of AI for Ethical Storytelling

5 simple principles for use of generative artificial intelligence by journalists, creators and other information providers, by Christopher Wink and Danya Henninger

Artificial intelligence is a term encompassing decades of computer science evolution, but recent developments in generative AI have catapulted it into the public conversation. Available AI tools are shaping how media organizations and trusted messengers inform their audiences, and bring both opportunities and challenges.

Drawing on more than 15 years of local reporting and continual experimentation in Technical.ly’s newsroom, we have distilled our experience into five simple principles for ethical AI use in journalistic information sharing, defined as fact-finding and storytelling that helps a community find its truth. Every technology we adopt must serve that mission rather than eclipse it.

1

Remain human centered.

Real, authentic people stories are the most effective way to interpret the world.

AI tools must be used to extend and deepen the work of humans, not replace them.

Most people prefer to speak with other people.

This will not change.

2

AIs are tools. Tools are made by people. People are fallible.

Remember Kranzberg’s first law:

“Technology is neither good nor bad; nor is it neutral.”

When in doubt, disclose your process and defer to the human.

Remain in control.

Be the expert.

3

Storytellers should understand AI tools even if they don’t use them, and ought not use them without understanding them.

Scrutinize the tools.

Stay aware of the differences between models, and the companies behind them.

Seek AI models that are transparent about their sources of information.

4

Storytellers should advocate for AI policies that protect freedom of information and press independence.

Develop internal AI policies that encourage transparency and avoid punishment.

Audit AI practices regularly.

Provide training with any recommended AI tool.

This will not change.

5

All AI work should get at least two sets of eyes before publication.

Remember Earl Wiener’s law: Automation “tune[s] out small errors while creating opportunities for large errors.”

When tools are cheap, they’re paid for with personal data and information.

Be wary of what you share.

Safeguard your sources.

Have feedback or comments? Send us an email.