The European Association of Urology recommends adjuvant radiation following RP for patients with adverse pathology7. Notwithstanding, a systematic review of risk stratification for patients with recurrence after RP did not provide evidence that the proposed risk factors should be used in clinical practice to tailor treatment. Furthermore, after RP, the only risk factors with moderate levels of evidence were pT3 stage, Gleason grade 4, surgical margins and pre-salvage PSA levels exceeding 0.5 ng/ml18.

There is little evidence on the use of ML tools to assess relapsing patients after RP19. Correctly classifying patients who experience BCR is extremely important, as adjuvant therapies carry adverse effects. Unfortunately, the recommended prognostic tools have low discriminative capacity20,21.

Wong analyzed 19 variables in 338 patients who underwent RALP, 25 of whom experienced BCR over a follow-up period of 12 months. This analysis resulted in AUCs of 0.903, 0.924, and 0.940 with the k-nearest neighbors (kNN), RF, and RL algorithms, respectively. However, the models were generated based on information captured from a small database. Therefore, it is unknown whether this assessment featured an overfitting of the ML algorithms used22. In a similar study, Eski analyzed 37 variables in 368 patients who underwent RALP, 73 of whom experienced BCR over a follow-up period of 35 months. The authors obtained better results with kNN, an AUC of 0.93, an AUC of 0.95 RF, and an AUC of 0.93. However, the study was poorly designed, and thus, the results were unreliable23.

Tan analyzed 18 variables in 1,130 patients who underwent RALP, 176 of whom experienced BCR over a follow-up period of 70 months. Three ML models were applied to predict BCR, including data validation and a 70/30 split strategy. The following results were obtained: 0.823 precision and an AUC of 0.894 using naïve Bayes, 0.838 precision and an AUC of 0.887 with RF, and 0.810 precision and an AUC of 0.852 with SVM 60 months after BCR. Tan also compared statistical risk models against ML models and found that the ML models were comparable to Kattan’s nomogram24.

Finally, Lee conducted an ML study assessing 13 variables in 5,114 patients who underwent RP, 1,207 of whom experienced BCR over a follow-up period of 60 months. They used an 80/20 split strategy for the data and obtained the following results: 0.719 precision and AUC of 0.805 with RF, 0.705 precision and AUC of 0.796 with ANN, and 0.740 precision and AUC of 0.803 with RL25.

The aforementioned studies have generally found that tree-based algorithms exhibit good prediction performance. In this study, the incorporation of RF as the initial model provided consistent results. Transitioning to the XGBoost ensemble method improved the performance metrics, thereby consolidating its position as the best model in this scenario. XGBoost outperformed DNN likely due to better regularization, early stopping, and superior handling of tabular structured data with moderate sample size.

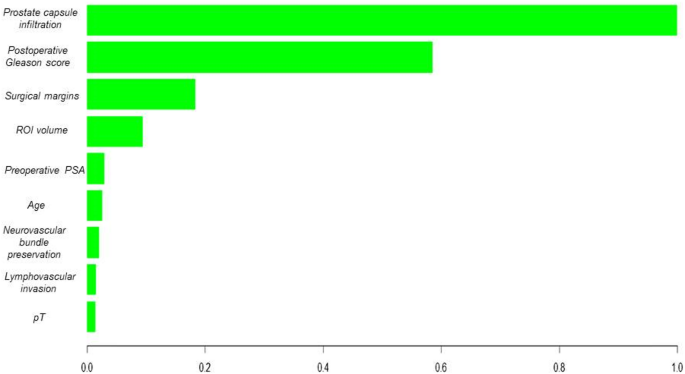

The ability to visualize variable importance, as highlighted in the analysis of the XGBoost-based model, underscores the ability of these algorithms to identify key features for BCR prediction among patients with PCa. It is essential for healthcare professionals to understand and rely on the model’s decisions in a clinical setting. Moreover, the robustness of the XGBoost algorithm in the validation of a novel patient dataset supports its generalization capabilities, making it potentially applicable in dynamic clinical settings.

Previous oncology studies have highlighted the ability of tree-based algorithms with ensemble methods to handle complex datasets and extract nonlinear patterns, thus improving the prediction of clinically relevant events26,27,28. This may result in a more accurate assessment of the probability of recurrence in post-RALP patients with PCa.

In the present project, the analysis of the decision curves highlights the superiority of the AI model based on XGBoost over the CAPRA-S model in predicting BCR. While CAPRA-S offers moderate performance, the net clinical benefit of the XGBoost model, shown in the decision curve, suggests that using this model could avoid both overtreatment and undertreatment of patients. These findings align with previous studies that have demonstrated that ML models can outperform traditional models like CAPRA in complex clinical scenarios24. Model outputs were stratified into clinically actionable risk categories: > 80% as high risk (requiring intensified PSA monitoring and early salvage evaluation), < 20% as low risk (standard surveillance), and 20–80% as intermediate risk (individualized follow-up). These thresholds correspond to the net benefit range observed in the decision curve analysis (Fig. 1), where the XGBoost model outperformed CAPRA-S, particularly among patients with recurrence probabilities between 30 and 70%. In this intermediate-risk group, where clinical decisions are often uncertain, the model offers refined stratification, supporting more selective intervention and potentially reducing overtreatment.

The main limitation of this study is its retrospective, single-centre design, which may reduce the generalisability of the findings to other populations and clinical settings. Further constraints include potential over-regularisation introduced by class sub-sampling during model training, reliance on the accuracy and completeness of electronic health-record coding, and the absence of both genomic variables and imaging data, elements that could provide additional information and enhance the model’s predictive power. However, these results reinforce the need to consider advanced ML-based approaches to assess and predict clinical outcomes in post-RALP patients with BCR, providing a personalized perspective for counseling and treatment. It is important to conduct additional research to optimize these models and understand their limitations in the clinical setting. Future steps will include external validation using public datasets such as TCGA-PRAD, and the application of transfer learning techniques to evaluate model adaptability when incorporating molecular or imaging biomarkers. Continuous collaboration between clinical experts and data scientists is key to achieve effective medical decision-making.

Although the model included patients aged 18 to 80 years, certain subgroups such as older adults may be underrepresented. This raises potential concerns regarding algorithmic bias, as an uneven distribution of demographic or clinical characteristics could affect prediction reliability across populations. Future studies should ensure diverse and representative samples to support equitable clinical applicability of AI-based models.

The clinical applicability of our model for predicting BCR after RP is strengthened by the use of postoperative Gleason score, lymphovascular invasion, and tumor percentage, which have demonstrated strong associations with prostate cancer prognosis. These predictors not only enhance the interpretability of the model but also align with existing clinical assessment protocols, making the model results directly actionable for healthcare providers. The XGBoost model provides an individualised probability of BCR during the first 24 months after surgery. In clinical practice this probability can stratify patients into higher and lower BCR risk groups. A higher predicted risk supports more intensive management, including earlier consideration of salvage radiotherapy before the prostate-specific antigen rises appreciably, PSA surveillance every three months instead of every six months, and shared-decision counselling about adjuvant androgen deprivation therapy or enrolment in clinical trials. In contrast, a lower predicted risk justifies a more conservative approach in which imaging and laboratory follow-up are scheduled less frequently, thereby reducing patient burden and avoiding unnecessary interventions. Because the score is calculated from clinicopathological variables already stored in the electronic health record, it can be generated automatically at discharge and displayed alongside established tools such as CAPRA-S, giving clinicians an additional data driven aid to personalise postoperative care.

To facilitate real-world integration, we developed a prototype web-based risk calculator using Python and Streamlit. (Online Appendix 3, Fig. 1) It is currently used internally by the physicians in our Urology Department for prospective validation in new post-RARP patients. This pilot use enables real-time risk estimation at the point of care and allows comparison of model predictions with actual outcomes to iteratively assess clinical utility.

Further studies across diverse populations and clinical environments are essential to validate the model’s robustness and ensure its applicability in a wider range of clinical contexts.