![]()

It’s the morning after, all three Pixel 10 series phones are configured to some degree, and I’m easing into the full experience. After posting Google Pixel 10 Series First Impressions yesterday, I connected my Pixel Buds Pro 2 and Pixel Watch 3 to the Pixel 10 Pro XL and then moved on to the next steps.

🏁 Finish up the initial configuration … three times

When I set up the Google Pixel 10 Pro XL, I did what I usually do with a new phone by undergoing a time-consuming process during which I manually install and configure all the apps I use, arrange the home screen, and make an initial pass personalizing system settings.

I have good reasons for doing things that way, and I do the same with Windows 11 and other platforms. And it’s infrequent enough in a typical year in which I buy two, maybe three phones. But three Pixel 10 series phones arrived on the same day, just three days before we leave for an international trip. And I’m behind enough as it is. I couldn’t take the time to go through this with three phones back-to-back.

So I didn’t. With the Pixel 10 and Pixel 10 Pro, I did what most mainstream users do and copied all my apps and settings from a previous phone, in this case the Pixel 10 Pro XL. This is considerably less time consuming than my normal approach, though you still need to manually sign in to and configure each app in turn.

Side note. This is obvious to some degree, but you can see how Microsoft wants to provide a similar experience in Windows 11 despite the comparative complexity of that desktop platform. This is why we get things like Windows Backup, OneDrive Folder backup, and, for more sophisticated users, the Windows Package Manager (winget).

📷 Photo first

Since its inception, Pixel has been synonymous with photography, and perhaps more specifically computational photography. In the early Pixel models, Google stuck with a single camera lens at at time in which rivals, even Apple, were moving to multiple lens. And the dark middle years in which Pixel plodded along with lower-end Snapdragon chips until the Tensor processor was ready, Google somehow managed to wring incredible photographic capabilities out of an increasingly aging set of lens that never changed much year-to-year.

But in the current Pixel era, which I define as Pixel 6 through now, some things have changed and some have not. Google did get its Tensor chips into the market, though few are impressed and even Pixel’s biggest fans are forced into contorted arguments about it being just fine for day-to-day use. But it also rapidly evolved the camera hardware alongside its AI-based computational photography capabilities.

Consider this string of advances. The Pixel 6 Pro featured a high resolution 50 MP main lens (binned to 12.5 MP). The Pixel 7 Pro added 5x optical zoom to the telephoto lens and Super Res Zoom. The Pixel 8 Pro was the first to offer a high resolution (48 to 50 MP) on all three rear lenses, and native 50 MP shots on the main lens, and it came with a nice new Pixel Camera app. And the Pixel 9 Pro XL brought 50 MP photo options on all lenses, an improved ultrawide lens with less edge distortion, and a high resolution 45 MP selfie camera.

I think it’s fair to say that Pixel fans got pretty used to the steady year-over-year improvements in photography. So it was somewhat surprising that the Pixel 10 Pro XL, especially, seemed to deliver no hardware upgrades whatsoever. Instead, Google has focused almost exclusively on the software and AI-based innovations. (Or, with the base Pixel 10, the addition of a third camera lens.)

I was hoping for hardware improvements. I imagined that Google would, at the least, add 8x optical zoom to the telephoto lens, a change that might help it compete with the long-range capabilities of the Samsung Galaxy S24/25 Ultra. But instead, it said that the Tensor G5 in the Pixel 10 series enabled new Pro Res Zoom capabilities. In the Pixel 10 Pro and 10 Pro XL, this allows you to zoom up to 100x and potentially get a reasonable shot, where the previous limit was 30x.

This I needed to see.

In my very early usage, two things are obvious. Super Res Zoom can deliver terrific shots well north of 30x that match or even beat what’s possible with the most recent Galaxy Ultra phones. And you will need to take multiple shots just in case: Through a variety of factors, the most obvious being how your hand shaking is vastly exaggerated the more you zoom, not every shot will be a winner.

The way the Pixel does this is interesting. You can zoom as before, using the 2x, 5x, and 10x on-screen buttons or by pinching the screen in 1x increments, now all the way up to 100x. What you see through the viewfinder will vary, but it’s often blurry if the distances are great. And then you take the shot, which may or may not include a Night Sight-induced shutter delay, depending on the lighting and how you configured the Camera app. If you switch to the camera roll immediately, you can see a new AI-based image processing animation moving around over the picture in waves of tiny white dots. And then it appears.

The resulting images are often incredible. And because the camera roll-based image viewer provides thumbnails for the original and processed version of each Pro Res Zoom shot, you can quickly compare both versions and see where Google’s AI succeeded. Or in some cases, fell flat.

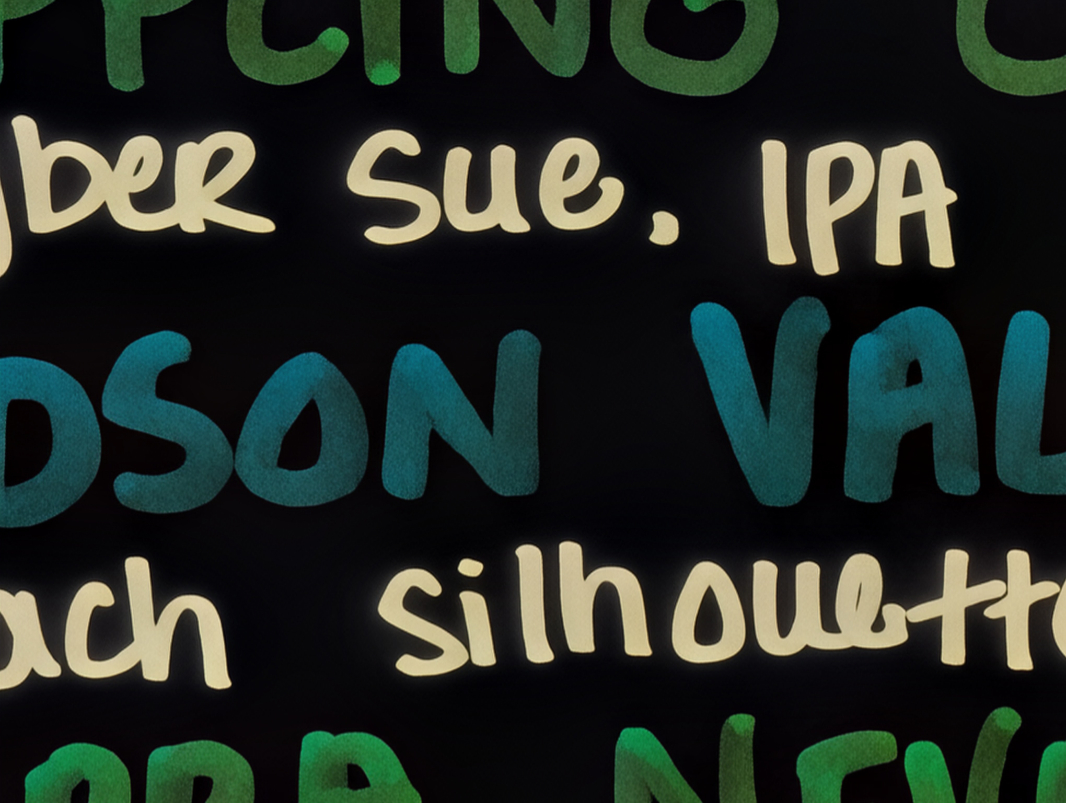

I’ve only taken several long range shots like this, but then I just got the phones yesterday. Inside, I’ve zoomed to 60x across a bar and photographed a bottle in a wine rack and gotten a nice closeup of text on a chalkboard-type whiteboard, and both were quite clear.

There was a sliver of a moon visible last night, so I took several shots that range from blurry to painterly to reasonably accurate.

And I’ve taken a few more inside shots this morning, at 80x, of items in the room I’m in. Of these, only the nighttime moon shots were problematic, and then only some of them.

🪄 AI next

I also want to test the many AI capabilities in the Pixel 10 series, especially the new features, many of which are exclusive to these new devices, at least for now. The key new feature here is Magic Cue, but as other reviewers point out, it doesn’t appear immediately and can be difficult to experience until you’ve used the phone for a while.

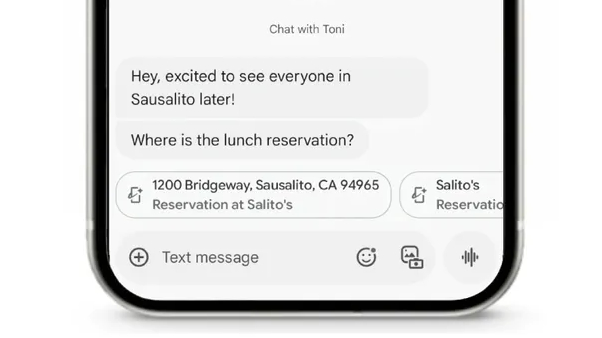

The idea behind Magic Cue is solid and it mirrors how I think other platforms are or will integrate AI capabilities in that it will surface capabilities that have, to date, been locked in apps or online services, and it will do so automatically and when needed. For example, Google says that if you receive a text message asking about a time, date, or location for some event, it will proactively try to match that request to information in Google Calendar. If there’s a hit, it will then provide a suggestion directly in Messages so you can tap that as you might tap other suggestions, like auto-replies, and add the information to the chat.

Image source: Google

Image source: Google

That’s a simple enough example, so I asked my wife to text me a question about the time of the Monday flight to Berlin. Nothing. Then I asked her to text me a question about my sister’s phone number. In that case, I got standard auto-reply suggestions like “Let me check,” “No,” and so on. But no Magic Cue.

Magic Cue uses the Tensor G5 and one of over 20 on-device AI models that Google preloads on the new Pixels. And I assume it requires some buy-in on the app and services side, so it only works with a limited range of first-party Google apps and services for now. Including the Messages app I was using for texts, the Google Calendar app I use for my schedule, and several others. Compatibility with Google Photos is coming in early September. I’m sure more will follow.

But it doesn’t work quite yet, not for me. And that’s likely tied to me just getting started with the new phones. Presumably, these features will light up over time. So I will move on for now.

🌈 Photos + AI

Google was an early proponent of AI-based photo editing capabilities and its Magic Eraser feature, in particular, was an early wake-up call about the impact this technology will have on images. And then audio and video, of course. That this and other AI features have triggered a new round of handwringing over what’s real and what’s not was easily predictable. But as I’ve pointed out, these aren’t new questions. We asked them at the dawn of the still camera era, we asked them about Photoshop, and we will keep asking them.

I don’t see any issues with this type of thing. At a high level, AI is all about saving us time. And while professional photographers may prefer spending time to get that perfect shot, most of us just want to capture a moment and move on. A few years ago, I might have waited several seconds when taking a photo of a person or group of people if there people in the background or other distractions. Or perhaps I would take several shots since we don’t have to worry about film limits anymore and then pick the best one. But today we have other options. We can simply remove objects from a photo that we find undesirable.

Oddly, I don’t need this functionality very often. Or perhaps I just don’t think about it all that much. But I scanned through some recent pictures on the Pixel 10 Pro XL so I could see how Magic Eraser and the other AI features had evolved. And I got stuck pretty quick. Because Magic Eraser is now quite seamless. It lives up to its name.

Yesterday, I took dozens of photos of the Pixel 10 series phones while I was setting up the Pixel 10 Pro XL and doing other work, and some of them made their way into my Google Pixel 10 Series First Impressions post. When I take photos like this, I try to orient them so that there nothing superfluous in the shots when possible, and I always have the 16 x 9 aspect ratio cropping I have to perform in the back of my mind. This shot is an example of one in which I had to crop close to the subject specifically to edit out the corner of the laptop on the left and some other phone over on the right.

Looking at this in Google Photos on the Pixel 10 Pro XL, it occurred that this might be a good choice for Magic Eraser: I could remove both of those superfluous objects from the shot instead of cropping so closely to the subject. And so I gave it a try. And though I might have spent a few seconds more on some fine-tuning were I going to use this version of the picture on the site, the results were good enough.

Some other edits were more impressive. In this case, I had taken a zoom shot of a glass of Guinness on a bar from across the room, but people behind the bar made it less interesting.

When I used Magic Eraser to remove that person on the left, it somehow created the contents of the bar behind him.

Then I used it again to remove the person on the right.

In another photo depicting a zoom view down a street towards some water, there are groups of people walking around, which is normal and not the sort of thing I’d think about editing normally.

But what the heck, I used Magic Eraser to remove the first two groups of people closest to the front. And it seamlessly filled in the (or “a”) background, removing them without leaving any obvious artifacts.

To many, this isn’t news. And it’s certainly not new, in that Magic Eraser has been available since 2021 with the Pixel 6 series. But it’s also the tip of the proverbial iceberg when it comes to the AI photo editing capabilities that Google has conjured up. And it’s more impressive today than it’s ever been.

OK, that’s enough for this morning. But I’ll have more soon.