Last week the National Energy Research Scientific Center (NERSC) and Lawrence Berkeley National Laboratory (LBNL) issued a comprehensive report on the state of quantum computing and its prospects as seen through the eyes of NERSC’s application mission.

The new report — Quantum Computing Technology Roadmaps and Capability Assessment for Scientific Computing – An analysis of use cases from the NERSC workload — is a must read for quantum watchers. It’s rare to have such a detailed overview from DoE —the new report includes a quantum tech primer (skip if you’re already knowledgeable); substantial dives into promising workloads, a review of quantum modality technologies and vendor roadmaps, and projections about the future.

The report makes clear there is little doubt that quantum computing will play a major role at NERSC. “We analyze the NERSC workload and identify materials science, quantum chemistry, and high-energy physics as the science domains and application areas that stand to benefit most from quantum computers. These domains jointly make up over 50% of the current NERSC production workload, which is illustrative of the impact quantum computing could have on NERSC’s mission going forward.”

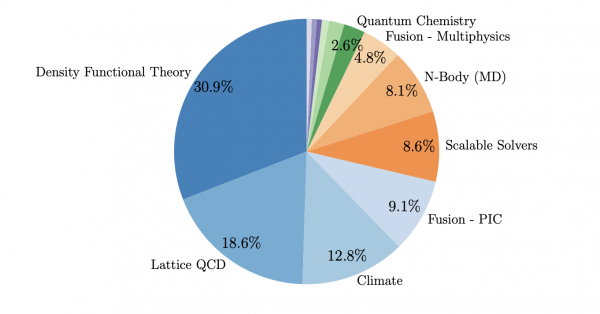

Figure 2.1 summarizes the resource allocation for various workloads on NERSC systems. Thanks to NERSC’s ongoing efforts, for example through the aforementioned NERSC Science Acceleration Program (NESAP) program, most of Perlmutter compute performance is stemming from the GPU partition.

Figure 2.1 summarizes the resource allocation for various workloads on NERSC systems. Thanks to NERSC’s ongoing efforts, for example through the aforementioned NERSC Science Acceleration Program (NESAP) program, most of Perlmutter compute performance is stemming from the GPU partition.

Here’s an excerpt from the executive summary:

“Quantum computers may fundamentally alter the HPC landscape and profoundly advance the DOE SC mission by the end of the decade. Quantum Computing (QC) is expected to (i) lead to exponential speedups for important scientific problems that classical HPC cannot solve, (ii) do so in an energy-efficient manner that is decoupled from Moore’s law, and (iii) offer opportunities and challenges to develop new paradigms for HPC-QC integration. The analysis in this report shows that at least 50% of the DOE SC production workload, which aggregates the computational needs of more than 12,000 NERSC users across the DOE landscape, is allocated to solving problems for which exponential improvements in terms of problem complexity, time-to-solution, and/or solution accuracy are anticipated with the advent of QC.

“We focus on three major scientific domains of strategic relevance to NERSC’s mission — materials science, quantum chemistry, and high energy physics (HEP)— and collect over 140 end-to-end resource estimates for benchmark problems from the scientific literature. We draw three main conclusions from this analysis.

“First, Hamiltonian simulation of quantum mechanical systems, also known as real-time evolution, is the key quantum algorithmic primitive upon which many known applications and speedups rely.

“Second, the end-to-end resource estimates differ significantly by scientific domain. Condensed matter physics offers a prime candidate for early quantum advantage as model problems relevant to materials science are more easily mapped to quantum computers and thus the least quantum resources are required at a given problem scale. Problems in quantum chemistry, including electronic structure, require an intermediate number of resources as the encoding overhead is larger. High energy physics problems, including lattice gauge theories, show the most dramatic scaling due to the encoding overhead encountered in including both fermionic and gauge degrees of freedom.

“Third, we show that, while asymptotically optimal algorithms exist, constant factor algorithmic improvements over the past five years have reduced the quantum resources needed to compute the ground state energy of a strongly-correlated molecule by orders of magnitude. We expect this trend to continue going forward and advance applications in all aforementioned domains. We show that current estimates of quantum resources required for scientific quantum advantage start at about 50 to a 100 qubits and a million quantum gate operations and go up from there.”

Besides science domains, the report also singles out broad application areas including linear algebra, simulation of differential equations, optimization and search, machine learning and artificial intelligence.

About ML and AI, the report says, “The integration of QC with Machine Learning (ML) and Artificial Intelligence (AI) is poised to overcome several fundamental computational barriers faced by classical methods. Quantum Processing Units (QPUs) offer the potential to address key deficiencies in classical ML, particularly in the efficient handling of high-dimensional feature spaces, combinatorial optimization, and probabilistic sampling. For example, quantum kernel methods can map data into exponentially large Hilbert spaces, enabling the creation of classifiers and regressors that are classically infeasible.”

Clearly, there’s a lot to unpack in the report (full table of contents at end of article).

While widespread practical quantum computing remains years away — how many years is uncertain — the technology and business ramp-up has suddenly surged, marked by expanded fund-raising (PsiQuantum’s $1 billion and Quantinuum’s $600 million), new IPOs (Infleqtion, Horizon Quantum), and rising geopolitical competition. (See HPCwire article, DoD’s 2024 China Report Highlights Plans for AI and Quantum in Military Use).

Among many other things, the report recommends a new benchmarking tool — “We anticipate that the execution time of large-scale quantum workflows will become a major performance parameter and propose a simple metric, the Sustained Quantum System Performance (SQSP), to compare system-level performance and throughput for a heterogeneous workload.”

While the report doesn’t take sides, it does offer capsule summaries of vendor technologies and roadmaps including: superconducting qubits (Alice & Bob, Google QuantumAI, IBM, IQM, Oxford Quantum Circuits, and Rigetti Computing); trapped ions (IonQ, Quantinuum); neutral atoms (Infleqtion, Pasqal, Alpine Technologies, Atom Computing, QuEra; and others (Equal1, Microsoft, PsiQuantum, Xanadu, Quantum Circuits).

Here are three examples of short vendor descriptions:

“Google Quantum AI. Google Quantum AI is building quantum processors based on superconducting transmon qubits arranged in a square grid topology where each qubit has four nearest-neighbors. This naturally matches the connectivity requirements for a surface code. Google’s current 105 qubit system named Willow was announced in late 2024, while the previous system named Sycamore was originally announced in 2019 with 53 qubits and upgraded to 70 qubits in 2023. Google Quantum AI presents their technology roadmap in terms of 6 milestones and we summarize them in Figure 5.2. The first milestone, labeled beyond classical, was achieved in 2019 on the Sycamore device. Milestone 2, labeled Quantum Error Correction, was achieved in 2023 on the second generation Sycamore-2 device. Future milestones 3 through 6 continuously improve both the scale and the quality of the system and Google reports the expected number of physical qubits and the logical error rates in their roadmap. Based on this information, we estimate the code distance using Equation (3.4), which in turns allows us to estimate the number of logical qubits. As their milestones have no estimates on when they will become available, we allow for a 3 year period between consecutive milestones, extrapolating from the announcement of Willow. We assume a physical error rate of 10−3 up to milestone 4, and 5 ×10−4 afterwards.

“Quantinuum. Quantinuum is a quantum computing company headquartered in Cambridge, United Kingdom and Broomfield, Colorado that was formed after the merger of Cambridge Quantum and Honeywell Quantum Solutions. Quantinuum is building trapped ion quantum computers based on Quantum Charge-Coupled Device (QCCD) architecture. Their H-series systems are currently industry-leading in terms of the quantum volume benchmark for NISQ systems. The company has announced their roadmap of ion trap devices up to the Apollo system in 2029 and specifies milestones in terms of qubits and error rates. Note that Quantinuum reports the Apollo system to have “hundreds” of logical qubits; we choose 500 as a proxy. The Quantinuum roadmap is summarized in Figure 5.8. One could expect qLDPC or many-hypercube codes as their strategy for error correction due to high qubit connectivity.

“Pasqal. Pasqal is a quantum computing startup based in France that is developing quantum computers based on arrays of Rubidium atoms. The company has recently demonstrated the capability to load large arrays of over a 1,000 atoms and announced their technical roadmap in June of 2025 which we summarize in Fig. 5.10. They aim to combine analog with FTQC approaches in future hybrid systems such as Centaurus and Lyra. Although we were not able to identify specific details on this hybrid approach, recent research suggest that analog mode may be used for preparing an initial many-body state that is subsequently used by a quantum algorithm running in digital mode. We remark that Pasqal is one of the few companies reporting clock speeds for their future systems.”

Obviously, it’s best to read the full report to get the full value of accompanying tables and charts.

About vendor roadmaps, the report notes: “The different vendor roadmaps report the number of qubits, the first metric, at their technology milestones either by specifying the number of physical qubits, logical qubits, or both, or remaining ambiguous. The distinction between physical qubits and logical qubits becomes relevant as the field matures from Noisy Intermediate Scale Quantum (NISQ) systems to (early) Fault-Tolerant Quantum Computing (FTQC). In a NISQ system the (noisy) physical qubits are used directly as a platform for logical (but noisy) quantum operations, while in a FTQC many imperfect physical qubits work together in a carefully orchestrated manner to represent fewer logical qubits with improved performance.

“We observe that different milestones either specify that the proposed system is a NISQ system or a (early) FTQC system, or do not specify which category the system falls into. In the latter case, we assume that systems that can run 104 gates or fewer are NISQ systems (labeled N ), systems that can reliably run between 104 to 106 gates are early FTQC systems (labeled EF ), and systems that can reliably run more than 106 gates are FTQC systems (labeled F ). The number of qubits nQ that we report in the P-vector is the number of physical qubits for NISQ systems and m the number of logical qubits for (early) FTQC systems. The P-vector then estimates an upper bound on the performance of the technology that each vendor is developing. Any quantum application that requires at most nQ qubits and at most nG gates will be able to run on the forthcoming system. We denote this as Papp ≤Pqc”

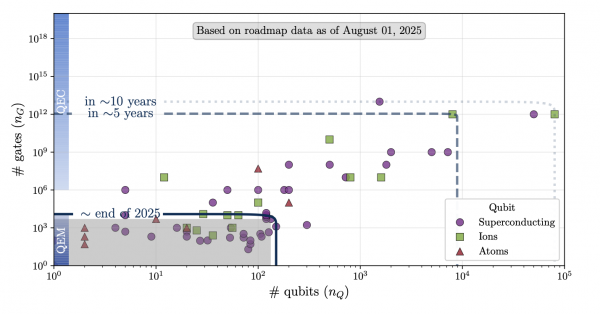

Show below are two figures in which the NERSC report attempts to condense vendor roadmaps, first measuring modalities versus performance and system size, and the second against key NERSC application areas.

Figure 5.11: Overview of all technology milestones presented in Section 5.1. Purple circles indicate milestones for superconducting quantum computers, green squares indicate trapped ion systems, and red triangles correspond to neutral atom systems. The current limits on quantum computer performance correspond to the area in gray. The dark blue line shows the expected performance by the end of 2025, the medium blue line shows the expected performance in five years from the time of writing, and the light blue line shows the expected performance ten years from today.

Figure 5.11: Overview of all technology milestones presented in Section 5.1. Purple circles indicate milestones for superconducting quantum computers, green squares indicate trapped ion systems, and red triangles correspond to neutral atom systems. The current limits on quantum computer performance correspond to the area in gray. The dark blue line shows the expected performance by the end of 2025, the medium blue line shows the expected performance in five years from the time of writing, and the light blue line shows the expected performance ten years from today.

Figure 5.12: Overview of vendor milestones expected by the end of 2025, in five years from today, and in ten years from today (without the individual milestones shown in Figure 5.11) together with the regions of application resource requirements for high energy physics in red, quantum chemistry in blue, and materials science in yellow shown previously in Figure 4.5. We observe a significant overlap between the capabilities of future quantum computers listed on the vendor roadmaps and requirements for scientific quantum advantage relevant to U.S. Department of Energy (DOE) Office of Science (SC) mission.

Figure 5.12: Overview of vendor milestones expected by the end of 2025, in five years from today, and in ten years from today (without the individual milestones shown in Figure 5.11) together with the regions of application resource requirements for high energy physics in red, quantum chemistry in blue, and materials science in yellow shown previously in Figure 4.5. We observe a significant overlap between the capabilities of future quantum computers listed on the vendor roadmaps and requirements for scientific quantum advantage relevant to U.S. Department of Energy (DOE) Office of Science (SC) mission.

As the plots show, with time progression, we can expect the hardware technology, coupled with error mitigation, to provide support for many scientific applications in the Quantum Relevant Workload (QRW) within the next five years. This finding is summarized below.

As noted earlier. This is a rich report with a fair amount of detail. It will be interesting to see how industry responds. The new report is free an downloadable.

Link to NERSC/LBNL report, https://www.osti.gov/biblio/2588210

NERSC-LBNL Report TOC