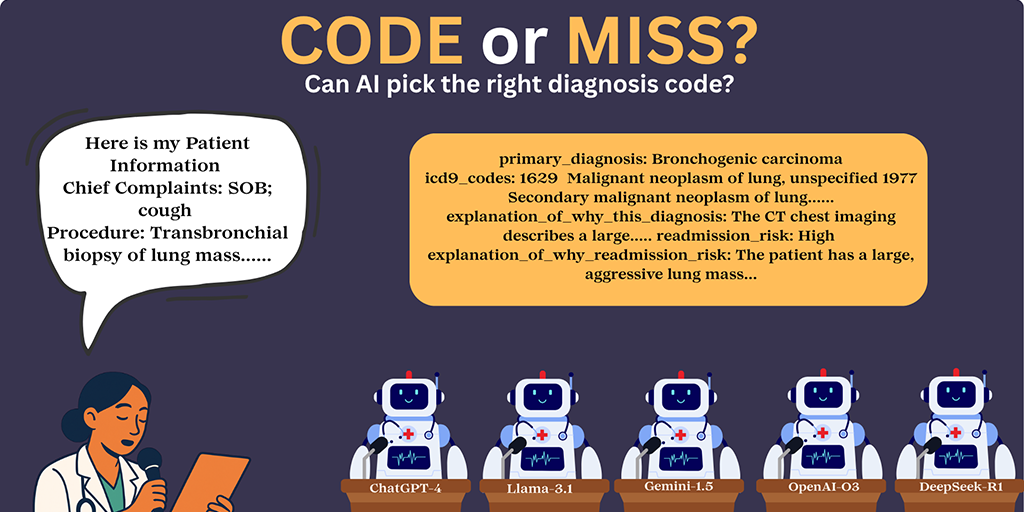

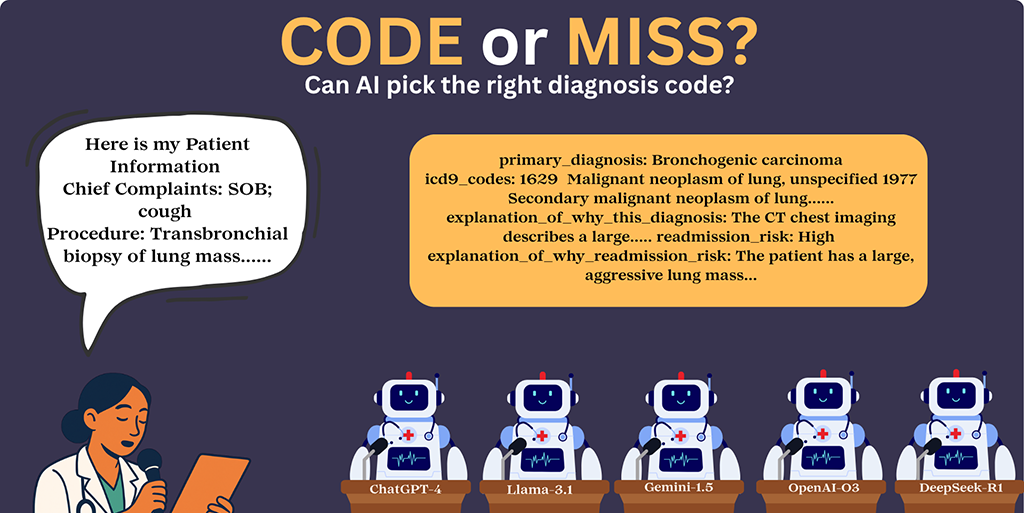

The researchers assessed how various large-language models would make health care decisions.

The researchers assessed how various large-language models would make health care decisions.

Could AI and large-language models become tools to better manage health care?

The potential is there – but evidence is lacking. In a paper published in the Journal of Medical Internet Research (JMIR), Luddy Indianapolis Associate Professor Saptarshi Purkayastha examines how large-language models (LLMs) such as ChatGPT-4 and OpenAI-03 performed when tackling some essential clinical tasks. (Spoiler: large-language models have some large problems to overcome.)

The paper published July 30 in the journal, “Evaluating the Reasoning Capabilities of Large Language Models for Medical Coding and Hospital Readmission Risk Stratification: Zero-Shot Prompting Approach,” assesses whether LLMs can serve as general-purpose clinical decision support tools.

“For health care leaders and clinical researchers, the study offers a clear message: while LLMs hold significant potential to support clinical workflows, such as speeding up coding drafts and risk stratification, they are not yet ready to replace human expertise,” says Purkayastha, Ph.D. He is director of Health Informatics and associate chair of the Biomedical Engineering and Informatics department at IU’s Luddy School of Informatics, Computing, and Engineering in Indianapolis.

“The recommended path forward,” he adds, “lies in responsible deployment through hybrid human-AI workflows, specialized fine-tuning on clinical datasets, inspections for detecting bias, and robust governance frameworks that ensure continuous monitoring, auditing, and correction.”

Crunching the numbers

Large-language models are artificial intelligence systems designed to understand and generate human-like text.

Newer reasoning models, which emerged during the study, have reasoning capabilities embedded in their design, allowing more logical, step-by-step decision-making, the paper notes.

For this study, Purkayastha and co-authors Parvati Naliyatthaliyazchayil, Raajitha Mutyala, and Judy Gichoya focused on five LLMs: DeepSeek-R1 and OpenAI-O3 (reasoning models), and ChatGPT-4, Gemini-1.5, and LLaMA-3.1 (non-reasoning models).

The study evaluated the models’ performance in three key clinical tasks:

Primary diagnosis generation

ICD-9 medical code prediction

Hospital readmission risk stratification

Working backwards

When you’re hospitalized, you probably have a lot of questions. By the time you’re discharged, you should have some answers.

In their study, Purkayastha and his co-authors reversed the process, giving the large-language models the results, and letting them take it from there.

“We selected a random cohort of 300 hospital discharge summaries,” the authors explained in their JMIR research paper. The large-language models were given structured clinical content from five note sections:

Chief complaints

Past medical history

Surgical history

Labs

Imaging

The challenge: Would the models be able to accurately generate a primary diagnosis; predict medical codes; and assess risk of readmission?

A variable track record

The researchers used zero-shot prompting. This meant the LLMs had NOT seen the actual samples used in the discharge summaries before.

“All model interactions were conducted through publicly available web user interfaces,” the researchers noted, “without using APIs or backend access, to simulate real-world accessibility for non-technical users.”

How did the large-language models perform?

Primary diagnosis generation

This is where LLMs shone brightest. “Among non-reasoning models, LLaMA-3.1 achieved the highest primary diagnosis accuracy (85%), followed by ChatGPT-4 (84.7%) and Gemini-1.5 (79%),” the researchers reported. “Among reasoning models, OpenAI-O3 outperformed in diagnosis (90%).”

ICD-9 medical code prediction

Large-language models fell behind in this category. “For ICD-9 prediction, correctness dropped significantly across all models: LLaMA-3.1 (42.6%), ChatGPT-4 (40.6%), Gemini-1.5 (14.6%),” according to the researchers. OpenAI-03, a reasoning model, scored 45.3%.

Hospital readmission risk stratification

Hospital readmission risk prediction showed low performance in non-reasoning models: LLaMA-3.1 (41.3%), Gemini-1.5 (40.7%), ChatGPT-4 (33%). Reasoning model DeepSeek-R1 performed slightly better in the readmission risk prediction (72.66% vs. OpenAI-O3’s 70.66%), the paper states.

The takeaways

“This study reveals critical insights with profound real-world implications,” Purkayastha says.

“Misclassification in coding can lead to billing inaccuracies, resource misallocation, and flawed health care data analytics. Similarly, incorrect readmission risk predictions may impact discharge planning and patient safety.

“When AI systems err or hallucinate, questions of liability and transparency become pressing. Ambiguities about who is accountable – developers, clinicians, or health care providers – raise legal and professional risks.”

Looking at reasoning vs. non-reasoning models, the researchers said, “Our results show that reasoning models outperformed nonreasoning ones across most tasks.”

The researchers concluded OpenAI-03 outperformed the other models in these tasks, noting, “Reasoning models offer marginally better performance and increased interpretability but remain limited in reliability.”

Their conclusion: when it comes to clinical decision-making and artificial intelligence, there’s a lot of room for improvement.

Identifying LLM shortcomings can lead to solutions

“These results highlight the need for task-specific fine-tuning and adding more human-in-loop models to train them,” the researchers concluded. “Future work will explore fine-tuning, stability through repeated trials, and evaluation on a different subset of de-identified real-world data with a larger sample size.

“The recorded limitations serve as essential guideposts for safely and effectively integrating LLMs into clinical practice.”

Purkayastha acknowledges the role of artificial intelligence in the clinical workflow going forward.

“As artificial intelligence continues to reshape the future of health care, this study represents an important contribution,” he says, “demonstrating original research with significant implications.

“It advocates for balanced optimism paired with caution and ethical vigilance, ensuring that the power of AI truly enhances patient care without compromising safety or trust.”