First, it was the CPU; after came the GPU; then all parallel workloads started running on GPUs, integrated or hyperscale. Similarly, the large language models we use today have adapted to the parallel nature of data processing on the GPU. The scale at which AI workloads operate is much larger than most of us can comprehend, and today we are exploring the new computing unit that was established as a byproduct of the need for much faster systems: the AI token factory. The AI token factory is a system or a combination of systems that work in tandem for a single purpose—the maximum throughput of AI tokens per second.

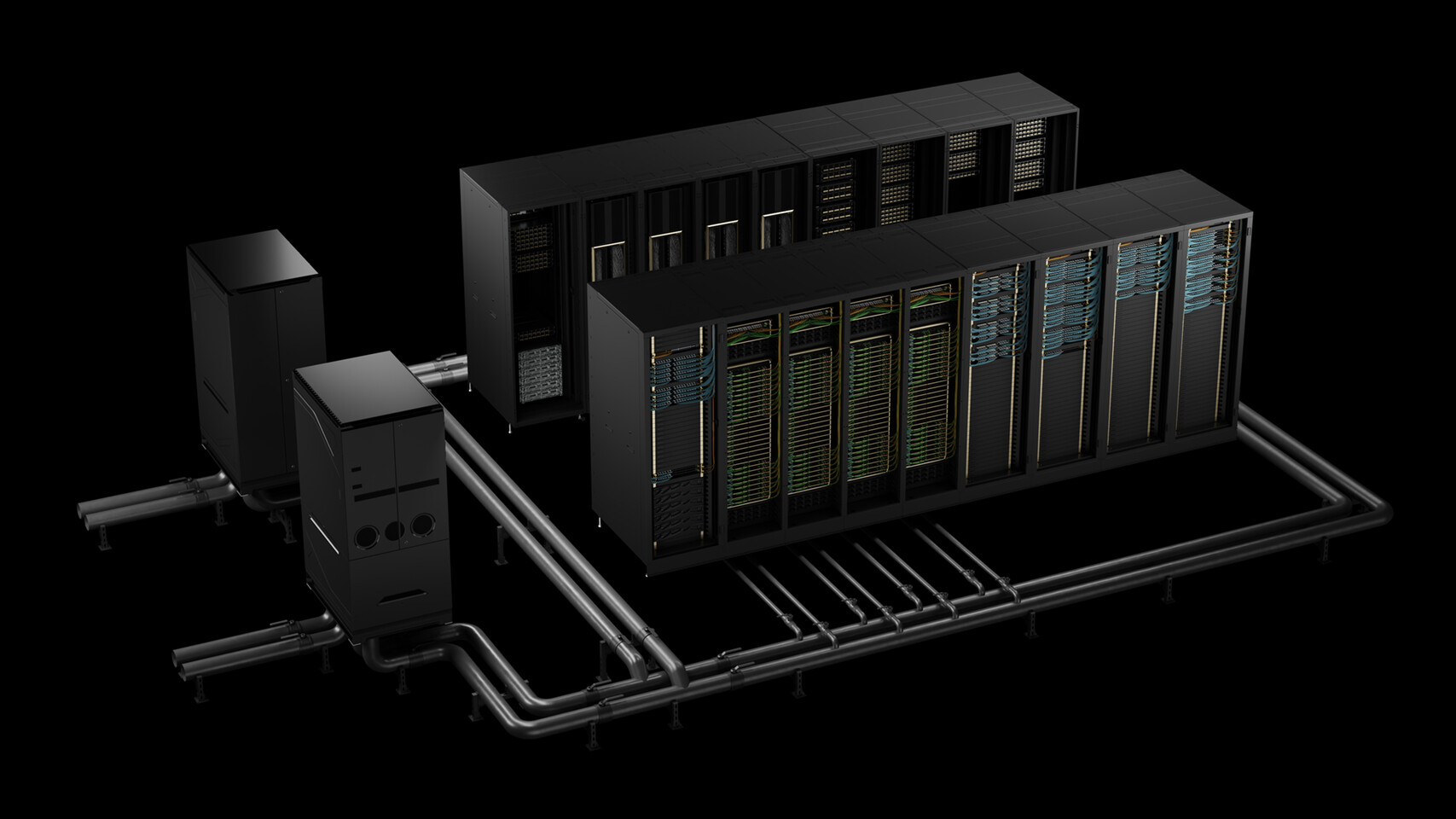

When large-scale AI systems are designed for training runs and later inference, the amount of compute resources is extensive. For example, xAI’s Colossus 1 system has 100,000 NVIDIA H100 GPUs, while the next-generation Colossus 2 will utilize over 550,000 NVIDIA GB200 and GB300 GPUs. This is an insane amount of compute all for a single purpose: training and inferencing next-generation AI models. The “currency” of these AI models is tokens, and pumping out as many tokens as possible is what gives these data centers their core specifications. This includes the final token/s throughput that they are able to achieve on any hardware. First, it was inferencing on CPUs, then GPUs, then entire systems like NVIDIA NVL72, and now the latest unit of computing is an entire AI token factory. The AI token factory is the final form of AI systems, designed from the ground up for maximum efficiency in token creation, with a primary focus on throughput.

NVIDIA’s CEO Jensen Huang paints the AI factory as a full-stack intelligence plant that transforms electricity and raw data into usable intelligence, a purpose-built facility whose primary job is to generate huge volumes of tokens that can be reconstituted into words, images, music, research, or even molecular designs. He has repeatedly argued that we now have the tools to “manufacture intelligence” and that, in the near future, many companies will run an AI factory alongside their physical factories. Because the AI factory is engineered and measured end-to-end for token throughput, it makes sense to treat tokens per second as a practical new unit of computing that reflects the system-level performance that actually matters for AI applications. Hence, we seem to be looking at the new unit of computing dedicated solely to this application. Called AI token factory, it is a collection of systems dedicated to generating tokens for any specific goal.

NVIDIA’s CEO Jensen Huang paints the AI factory as a full-stack intelligence plant that transforms electricity and raw data into usable intelligence, a purpose-built facility whose primary job is to generate huge volumes of tokens that can be reconstituted into words, images, music, research, or even molecular designs. He has repeatedly argued that we now have the tools to “manufacture intelligence” and that, in the near future, many companies will run an AI factory alongside their physical factories. Because the AI factory is engineered and measured end-to-end for token throughput, it makes sense to treat tokens per second as a practical new unit of computing that reflects the system-level performance that actually matters for AI applications. Hence, we seem to be looking at the new unit of computing dedicated solely to this application. Called AI token factory, it is a collection of systems dedicated to generating tokens for any specific goal.

The AI token factory is becoming a common system type. Any dedicated accelerated computing infrastructure paired with software that transforms data into intelligence is, in practice, an AI factory, and its core elements include accelerated compute, networking and interconnects, software, storage, systems, and tools and services. When someone prompts an AI, the factory’s full stack springs into action: the prompt is tokenized into small units of meaning, such as fragments of images, sounds, or words; each token is fed through GPU-powered models that perform compute-intensive reasoning in parallel, enabled by high-speed networking, and the system returns responses in real time. An AI factory will run this loop continuously for users around the globe, producing inference at an industrial scale and effectively manufacturing intelligence. Hence, every AI factory is at the same time an AI token factory, as its primary currency—the token—is the ultimate “byproduct.”

Additionally, think about the scale of AI systems. With NVIDIA’s Hopper generation, we were used to seeing systems with tens of thousands of GPUs. But for the current Blackwell, NVIDIA envisions systems with a million GPUs in a cluster. This is the case for hyperscalers, as well as private AI labs like xAI, OpenAI, and Anthropic. With systems with millions of GPUs used for both training and later inference, millions of users can issue prompts. However, the human aspect of prompting and token generation is about to be overtaken by AI’s agentic usage. The ultimate goal of AI systems is to achieve agentic correspondence and automate tasks by setting the final goal of the system and allowing the AI to use its agents to work on and implement the solution.

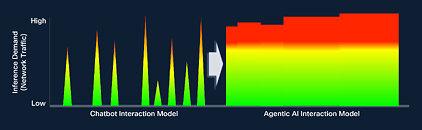

To imagine the scale of token generation that this AI agentic era will bring, Cisco’s recent investor presentation shared an interesting chart to show the demand and intensity of inference demand, one done through the chatbot model, and one through the agentic model, where AI performs tasks in the background. You can see the chart showing that the load that the agentic era is about to drive is so immense that even million GPU clusters will have a hard time keeping up with it. Imagine thousands of frontier models, all with multi-trillion parameter sizes, running 24/7 on various tasks. That is enough to overload any known data center and cluster. Therefore, building and maintaining more infrastructure is the only way forward for these systems to continue thriving and operating effectively. For now, demand is outpacing supply.

Leading AI labs, xAI, OpenAI, Anthropic, and Meta, have regularly restricted access to top-tier features of their models to a certain number of queries per day/week/month. Features like DeepResearch in ChatGPT are restricted to up to 10 uses per month, even with a $20 subscription, and 150 uses with a $200/month subscription. Why? Because agentic AI is working in the background for the tasks and research you have designated it to, and the AI token factory is overloaded with the intensive resource demand. What most users fail to see is how these resource limitations are significant, despite untold amounts of investment, the computing infrastructure is still not enough for the AI workloads we want to run. ChatGPT alone has nearly 700 million weekly users. You can imagine just how significant the enterprise load, who use these models via APIs, contributes to the whole picture. Google CEO Sundar Pichai recently confirmed that the company serves over 980 trillion monthly tokens via Google’s APIs, which is more than double the 480 trillion tokens reported in May. The exponential growth in demand is incredible, and hearkens back to the birth of the internet, 3D gaming becoming mainstream, or the early years of streaming services to find similar scaled computationally intensive comparisons.

Leading AI labs, xAI, OpenAI, Anthropic, and Meta, have regularly restricted access to top-tier features of their models to a certain number of queries per day/week/month. Features like DeepResearch in ChatGPT are restricted to up to 10 uses per month, even with a $20 subscription, and 150 uses with a $200/month subscription. Why? Because agentic AI is working in the background for the tasks and research you have designated it to, and the AI token factory is overloaded with the intensive resource demand. What most users fail to see is how these resource limitations are significant, despite untold amounts of investment, the computing infrastructure is still not enough for the AI workloads we want to run. ChatGPT alone has nearly 700 million weekly users. You can imagine just how significant the enterprise load, who use these models via APIs, contributes to the whole picture. Google CEO Sundar Pichai recently confirmed that the company serves over 980 trillion monthly tokens via Google’s APIs, which is more than double the 480 trillion tokens reported in May. The exponential growth in demand is incredible, and hearkens back to the birth of the internet, 3D gaming becoming mainstream, or the early years of streaming services to find similar scaled computationally intensive comparisons.

These AI token factories are now producing so many tokens every month that we are about to see yet another order of magnitude in scaling, such as quadrillions of generated tokens every month, from one AI lab alone. Imagine the reach that all these labs have combined. This will amount to multi-quadrillion tokens generated per month. With the agentic era upon us, AI token factories are firmly in the exponential growth phase.