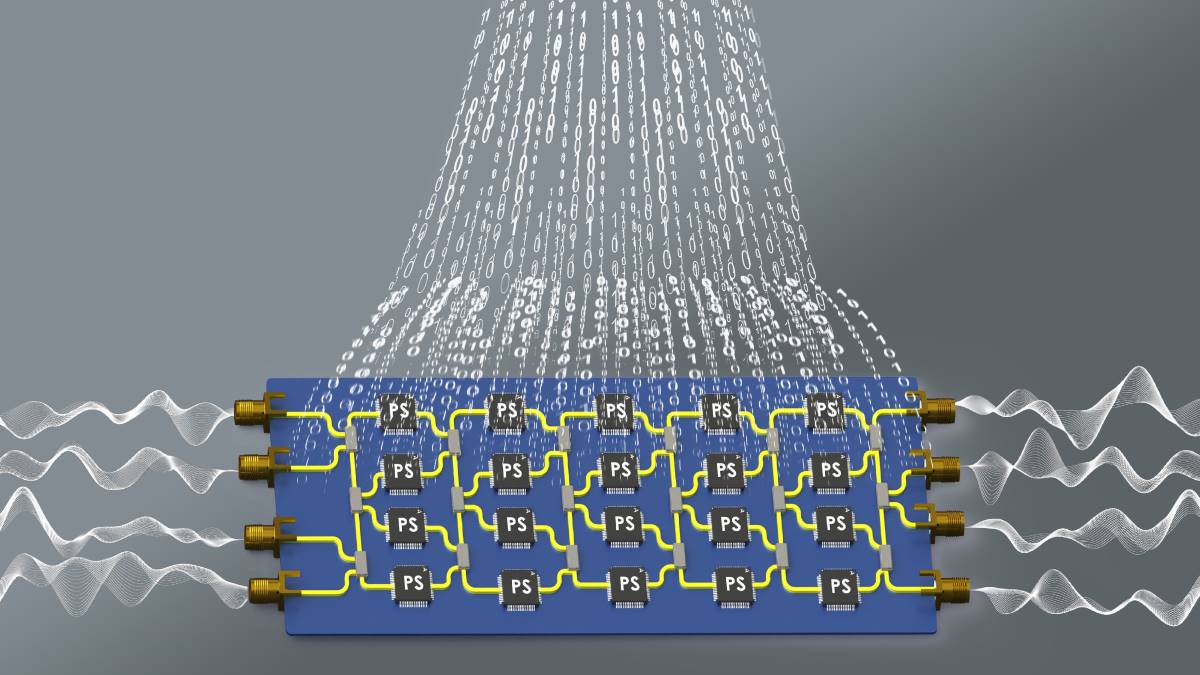

Fabricated unitary universal device and its power-divider layer. Credit: UTS

Australian and US researchers have designed an analogue computer circuit which uses radio and microwave signals to do massive calculations while using less energy than conventional digital electronics.

“Unlike quantum systems, which face major challenges in scalability and stability, our analogue computing platform is feasible today and capable of delivering real-world applications much sooner,” says Dr Rasool Keshavarz from the Radio Frequency and Communication Technologies (RFCT) Lab at Australia’s University of Technology Sydney (UTS).

“This breakthrough paves the way for next-generation analogue radio frequency and microwave processors with applications in radar, advanced communications, sensors and space technologies that require real-time operations.”

The new findings are published in a paper in Nature Communications.

“We’ve bridged physics and electronics to design the first programmable microwave-integrated circuit that can execute matrix transformations, a type of mathematical operation fundamental to modern technologies,” adds Mohammad-Ali Miri, an associate professor at Rochester Institute of Technology, USA.

A matrix is a grid of numbers. Matrix transformations are a kind of mathematical function that changes one set of data, or matrix, into another.

Analog computing processes information using continuous signals like electromagnetic waves, which allows many calculations to happen in parallel and with far less energy than digital computing.

The ultra-fast analogue processors could power next-generation computing systems including as wireless networks, real-time radar and sensing, monitoring in mining and agriculture and new tools for scientific research.

“This study marks the start of a broader research trajectory,” says Keshavarz.

“Follow-up studies are already in preparation to expand the technology toward practical system-level architectures, so that computing can move beyond digital limits.”