In addition to hardware costs, power generation and delivery and cooling requirements will be among the main constraints for massive AI data centers in the coming years. X, xAI, SpaceX, and Tesla CEO Elon Musk argues that over the next four to five years, running large-scale AI systems in orbit could become far more economical than doing the same work on Earth.

That’s primarily due to ‘free’ solar power and relatively easy cooling. Jensen Huang agrees about the challenges ahead of gigawatt or terawatt-class AI data centers, but says that space data centers are a dream for now.

Terawatt-class AI datacenter is impossible on Earth

Jensen Huang, chief executive of Nvidia, notes that the compute and communication equipment inside today’s Nvidia GB300 racks is extremely small compared to the total mass, because nearly the entire structure — roughly 1.95 tons out of 2 tons — is essentially a cooling system.

Best picks for you

Musk emphasized that as compute clusters grow, the combined requirements for electrical supply and cooling escalate to the point where terrestrial infrastructure struggles to keep up. He claims that targeting continuous output in the range of 200 GW – 300 GW annually would require massive and costly power plants, as a typical nuclear power plant produces around 1 GW of continuous power output. Meanwhile, the U.S. generates around 490 GW of continuous power output these days (note that Musk says ‘per year,’ but what he means is continous power output at a given time), so using the lion’s share of it on AI is impossible. Anything approaching a terawatt of steady AI-related demand is unattainable within Earth-based grids, according to Musk.

“ There is no way you are building power plants at that level: if you take it up to say, a [1 TW of continuous power], impossible,” said Musk. You have to do that in space. There is just no way to do a terawatt [of continuous power on] Earth. In space, you have got continuous solar, you actually do not need batteries because it is always sunny in space and the solar panels actually become cheaper because you do not need glass or framing and the cooling is just radiative.”

While Musk may be right about issues with generating enough power for AI on Earth and the fact that space could be a better fit for massive AI compute deployments, many challenges remain with putting AI clusters into space, which is why Jensen Huang calls it a dream for now.

“That’s the dream,” Huang exclaimed.

Remains a ‘dream’ in space too

On paper, space is a good place for both generating power and cooling down electronics as temperatures can be as low as -270°C in the shadow. But there are many caveats. For example, they can reach +120°C in direct sunlight. However, when it comes to earth orbit, temperature swings are less extreme: –65°C to +125°C on Low Earth Orbit (LEO), –100°C to +120°C on Medium Earth Orbit (MEO), –20°C to +80°C on Geostationary Orbit (GEO), and –10°C to +70°C on High Earth Orbit (HEO).

LEO and MEO are not suitable for ‘flying data centers’ due to unstable illumination pattern, substantial thermal cycling, crossing of radiation belts, and regular eclipses. GEO is more feasible as there is always sunny (well, there are annual eclipses too, but they are short) and it is not too radioactive.

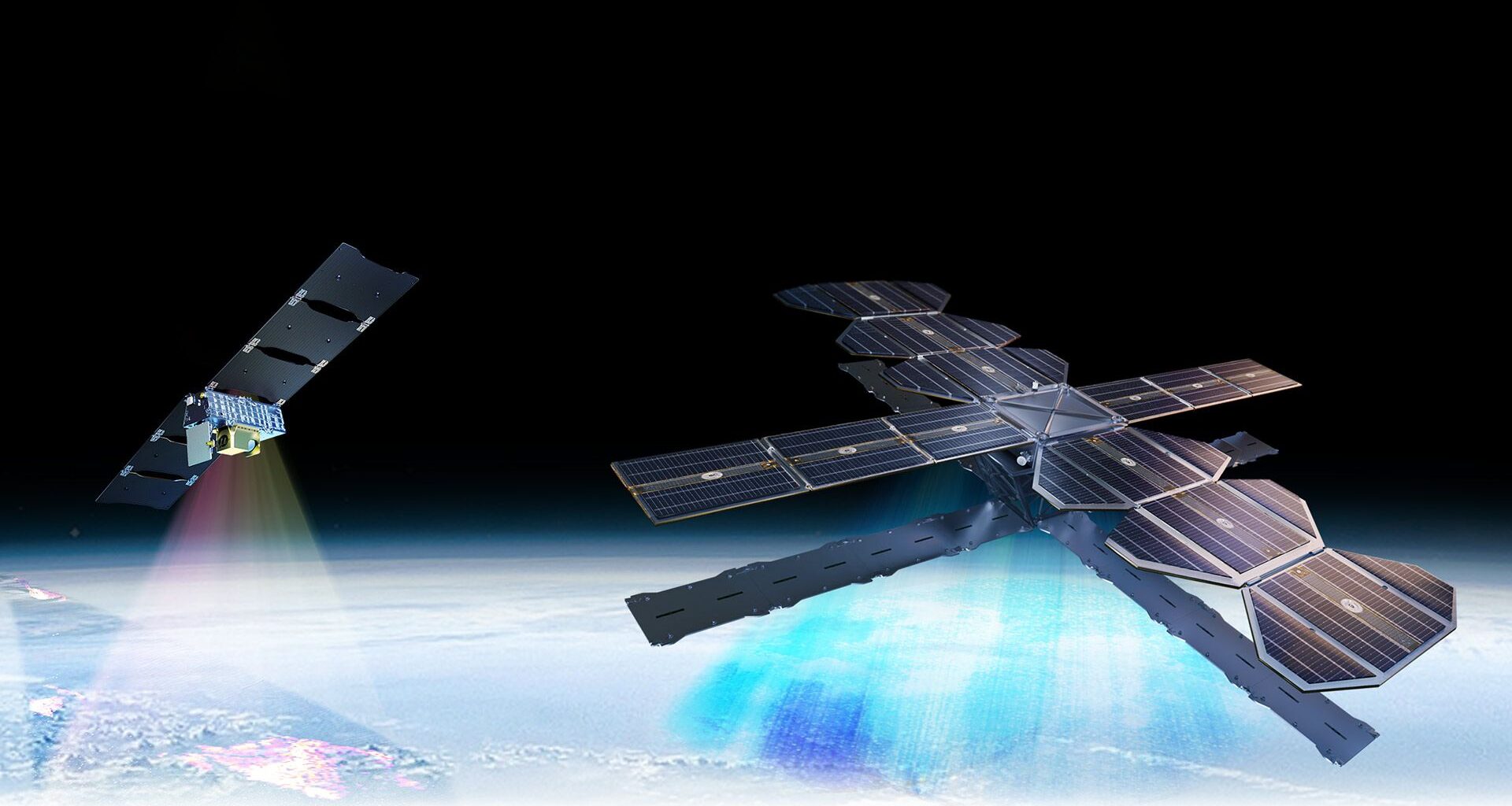

Even in GEO, building large AI data centers faces severe obstacles: megawatt-class GPU clusters would require enormous radiator wings to reject heat solely through infrared emission (as only radiative emission is possible, as Musk noted). This translates into tens of thousands of square meters of deployable structures per multi-gigawatt system, far beyond anything flown to date. Launching that mass would demand thousands of Starship-class flights, which is unrealistic within Musk’s four-to-five-year window, and which is extremely expensive.

Also, high-performance AI accelerators such as Blackwell or Rubin as well as accompanying hardware still cannot survive GEO radiation without heavy shielding or complete rad-hard redesigns, which would slash clock speeds and/or require entirely new process technologies that are optimized for resilience rather than for performance. This will reduce feasibility of AI data centers on GEO.

On top of that, high-bandwidth connectivity with earth, autonomous servicing, debris avoidance, and robotics maintencance all remain in their infancy given the scale of the proposed projects. Which is perhaps why Huang calls it all a ‘dream’ for now.

Follow Tom’s Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.