A study led by researchers from Beijing University of Posts and Telecommunications published a research paper in the special issue “Integration of Computing and Networking: Architectures, Theories, and Practices” of Frontiers of Information Technology & Electronic Engineering 2024, Volume 25, Issue 5. The paper proposes a graph neural network (GNN)-based deep reinforcement learning (DRL) framework, enabling the agent to jointly optimize network and computing resources while possessing topology generalization, making it more adaptable to dynamic changes in network topologies.

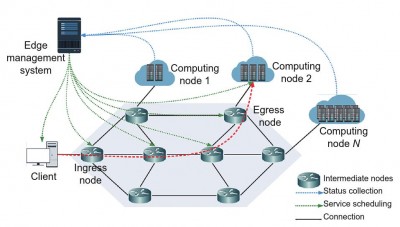

The computing force network (CFN) aims to connect and utilize network resources to meet application requirements. Its resource allocation faces challenges such as the need to consider both network and computing resources simultaneously, obtain optimization results in a timely manner, and solve the generalization problem. Graph neural networks can process non-Euclidean graph data and capture spatial information. Combining them with deep reinforcement learning is expected to address the resource allocation challenges in CFN, and this combined approach also has potential benefits such as improving resource utilization.

The GNN-based DRL architecture consists of five components: environment, agent, state, action, and reward. The agent interacts with the environment to make decisions. The state includes information about links and nodes. Actions involve path selection, etc., and the reward function is used to maximize network bandwidth and service scheduling efficiency. By integrating the message passing neural network (MPNN) into the DRL framework, data is processed through message passing and readout phases. The agent combines a deep Q-learning network with GNN to select computing nodes and allocate resources. Preprocessing steps to reduce complexity and the agent’s learning process are also introduced.

Training and evaluating the GNN-based DRL agent on datasets from SNDlib shows that this method outperforms baseline solutions in cumulative reward. In experiments with dynamically changing topologies (randomly deleting nodes), the agent demonstrates good generalization ability and maintains superior performance.

The paper “Combining graph neural network with deep reinforcement learning for resource allocation in computing force networks” authored by Xueying HAN, Mingxi XIE, Ke YU, Xiaohong HUANG, Zongpeng DU and Huijuan YAO. Full text of the open access paper: https://doi.org/10.1631/FITEE.2300009.