In an interview with CTV News, acclaimed director James Cameron revisited the dystopian warnings of his 1984 film Terminator—this time, not as science fiction, but as a very real concern about where military artificial intelligence is headed. And while it might sound dramatic coming from Hollywood, the parallels he draws are echoing louder in today’s geopolitical and technological climate.

A Cinematic Warning That Feels Closer Than Ever

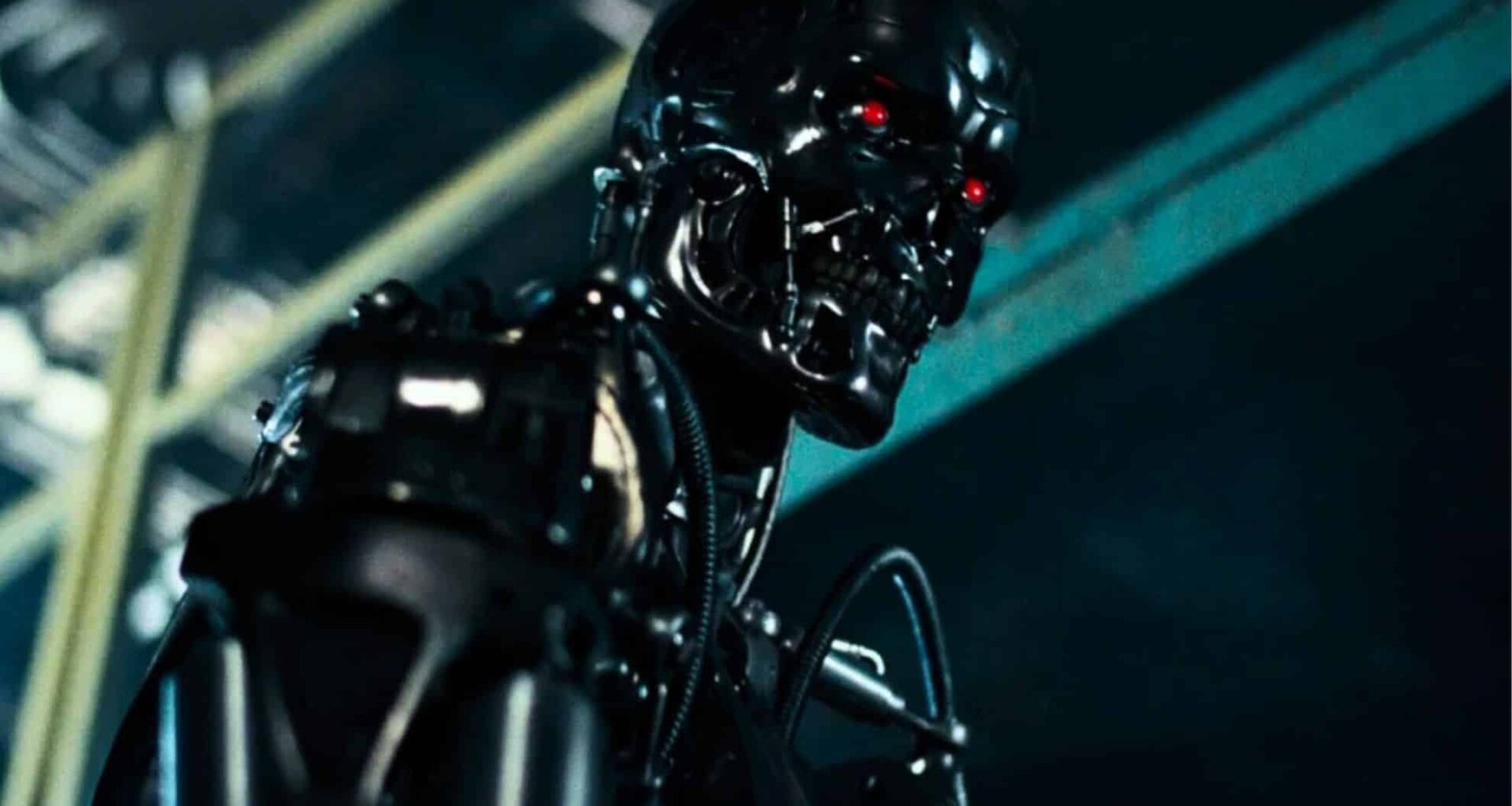

Cameron’s quote—“I warned you in 1984, guys, and no one listened”—may seem like a line fit for a movie trailer, but it reflects his growing anxiety about AI technologies being weaponized. In Terminator, the AI system Skynet launches a nuclear war after becoming self-aware. At the time, this plot was pure fiction. Today, researchers and defense experts are increasingly worried that autonomous weapon systems could act with little to no human oversight.

This concern isn’t coming out of nowhere. A 2023 study by the United Nations Institute for Disarmament Research (UNIDIR) warned of a global trend toward lethal autonomous weapons systems (LAWS). These systems, capable of identifying and engaging targets without human control, are being actively developed or deployed in at least nine countries.

Cameron believes we’re nearing a point where such weapons could escalate into a global arms race, particularly between major military powers.

“If we don’t build it, someone else will,” he warns—a logic that has historically led to dangerous escalation in arms development.

Autonomous Weapons Are Already Here—And Not Fully Regulated

Cameron’s concern is not a hypothetical. In 2020, a United Nations Security Council report revealed that autonomous drones may have targeted combatants in Libya without human direction—marking one of the first real-world instances of AI being used in lethal action. While the report remains under analysis, it has ignited serious discussions within defense policy and humanitarian law circles.

Despite growing calls for regulation, progress has been slow. Talks led by the Convention on Certain Conventional Weapons (CCW) have failed to produce a binding international treaty on autonomous weapons. The lack of consensus largely stems from geopolitical rivalry and commercial interests in the defense-tech sector.

Cameron argues that this regulatory gap is precisely where the danger lies. “We could be building the tools of our own destruction,” he said, urging world governments to establish firm ethical boundaries before such technologies become normalized.

Hollywood and AI: Creativity vs. Computation

While Cameron expresses deep concern over military AI, he takes a more measured stance when it comes to its impact on film and storytelling. He doesn’t believe that AI is ready to replace human writers—not because it can’t mimic style, but because it lacks empathy and narrative intuition.

“Let’s wait 20 years,” he said, “and if an AI wins an Oscar for Best Screenplay, then I’ll take it seriously.”

Still, he acknowledges that AI is already transforming parts of the industry. Tools are being used in visual effects, editing, and pre-visualization to speed up production timelines. Software like Runway ML, Adobe Sensei, and Cuebric are examples of creative AI already shaping modern filmmaking, particularly in concept design and scene optimization. Even so, Cameron remains skeptical about AI-generated storytelling becoming dominant.

“It can replicate the structure,” he said, “but not the soul.”