Jiang, L. Y. et al. Health system-scale language models are all-purpose prediction engines. Nature 619, 357–362 (2023).

Singhal, K. et al. Large language models encode clinical knowledge. Nature 620, 172–180 (2023).

Chen, R. J. et al. Towards a general-purpose foundation model for computational pathology. Nat. Med. 30, 850–862 (2024).

Habicht, J. et al. Closing the accessibility gap to mental health treatment with a personalized self-referral chatbot. Nat. Med. 30, 595–602 (2024).

Lu, M. Y. et al. A multimodal generative AI copilot for human pathology. Nature 634, 466–473 (2024).

Wan, P. et al. Outpatient reception via collaboration between nurses and a large language model: a randomized controlled trial. Nat. Med. 30, 2878–2885 (2024).

Huang, K. et al. A foundation model for clinician-centered drug repurposing. Nat. Med. 30, 3601–3613 (2024).

Li, J. et al. Integrated image-based deep learning and language models for primary diabetes care. Nat. Med. 30, 2886–2896 (2024).

Liu, X. et al. A generalist medical language model for disease diagnosis assistance. Nat. Med. 31, 932–942 (2025).

Van Veen, D. et al. Adapted large language models can outperform medical experts in clinical text summarization. Nat. Med. 30, 1134–1142 (2024).

Johri, S. et al. An evaluation framework for clinical use of large language models in patient interaction tasks. Nat. Med. 31, 77–86 (2025).

Ao, G. et al. Comparative analysis of large language models on rare disease identification. Orphanet J. Rare Dis. 20, 150 (2025).

Shyr, C. Large language models for rare disease diagnosis at the undiagnosed diseases network. JAMA Netw. Open 8, e2528538 (2025).

Weiner, S. J. & Schwartz, A. Listening for What Matters: Avoiding Contextual Errors in Health Care (Oxford Univ. Press, 2023).

Yu, K. -H. & Kohane, I. S. Framing the challenges of artificial intelligence in medicine. BMJ Qual. Saf. 28, 238–241 (2019).

Zhang, S., Liu, Q., Qin, G., Naumann, T. & Poon, H. Med-RLVR: emerging medical reasoning from a 3B base model via reinforcement learning. Preprint at https://doi.org/10.48550/arXiv.2502.19655 (2025).

Hager, P. et al. Evaluation and mitigation of the limitations of large language models in clinical decision-making. Nat. Med. 30, 2613–2622 (2024).

McDermott, M. B. A., Yap, B., Szolovits, P. & Zitnik, M. Structure-inducing pre-training. Nat. Mach. Intell. 5, 612–621 (2023).

Guo, L. L. et al. A multi-center study on the adaptability of a shared foundation model for electronic health records. npj Digit. Med. 7, 171 (2024).

Wornow, M. et al. The shaky foundations of large language models and foundation models for electronic health records. npj Digit. Med. 6, 135 (2023).

Pais, C. et al. Large language models for preventing medication direction errors in online pharmacies. Nat. Med. 30, 1574–1582 (2024).

Sabuncu, M. R., Wang, A. Q. & Nguyen, M. Ethical use of artificial intelligence in medical diagnostics demands a focus on accuracy, not fairness. NEJM AI 2, AIp2400672 (2024).

Li, M. M. et al. Contextual AI models for single-cell protein biology. Nat. Methods 21, 1546–1557 (2024).

Kather, J. N., Ferber, D., Wiest, I. C., Gilbert, S. & Truhn, D. Large language models could make natural language again the universal interface of healthcare. Nat. Med. 30, 2708–2710 (2024).

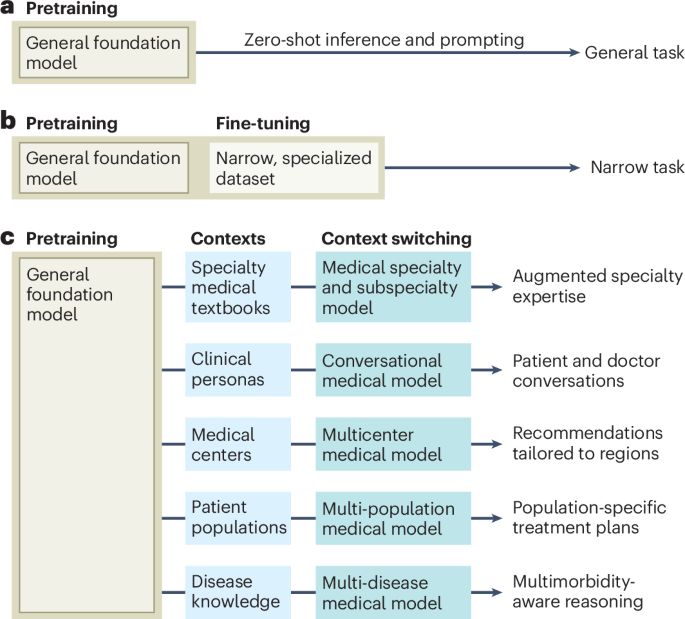

Brown, T. et al. Language models are few-shot learners. Adv. Neural Inf. Proc. Syst. 33, 1877–1901 (2020).

Liu, H. et al. Few-shot parameter-efficient fine-tuning is better and cheaper than in-context learning. Adv. Neural Inf. Proc. Syst. 35, 1950–1965 (2022).

Pan, J., Gao, T., Chen, H. & Chen, D. What in-context learning ‘learns’ in-context: disentangling task recognition and task learning. In Findings of the Association for Computational Linguistics 8298–8319 (ACL, 2023).

Min, S. et al. Rethinking the role of demonstrations: what makes in-context learning work? In Proc. 2022 Conference on Empirical Methods in Natural Language Processing 11048–11064 (ACL, 2022).

Chen, B., Zhang, Z., Langrené, N. & Zhu, S. Unleashing the potential of prompt engineering for large language models. Patterns 6, 101260 (2025).

Shen, S. et al. Multitask vision-language prompt tuning. In Proc. the IEEE/CVF Winter Conference on Applications of Computer Vision 5656–5667 (IEEE, 2024).

Wang, W. et al. VisionLLM: large language model is also an open-ended decoder for vision-centric tasks. Adv. Neural Inf. Proc. Syst. 36, 61501–61513 (2023).

Tanwani, A. K., Barral, J. & Freedman, D. RepsNet: combining vision with language for automated medical reports. In International Conference on Medical Image Computing and Computer-assisted Intervention 714–724 (Springer, 2022).

Shentu, J. & Al Moubayed, N. CXR-IRGen: an integrated vision and language model for the generation of clinically accurate chest X-ray image-report pairs. In Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (IEEE, 2024).

Wu, S. et al. CollabLLM: from passive responders to active collaborators. In Proc. 42nd International Conference on Machine Learning (PMLR, 2025).

Alsentzer, E. et al. Few shot learning for phenotype-driven diagnosis of patients with rare genetic diseases. npj Digit. Med. 8, 380 (2025).

Goh, E. et al. Large language model influence on diagnostic reasoning: a randomized clinical trial. JAMA Netw. Open 7, e2440969 (2024).

Wang, L. et al. Prompt engineering in consistency and reliability with the evidence-based guideline for LLMs. npj Digit. Med. 7, 41 (2024).

Khattab, O. et al. DSPy: compiling declarative language model calls into state-of-the-art pipelines. In International Conference on Learning Representations (ICLR, 2024).

Yuksekgonul, M. et al. Optimizing generative AI by backpropagating language model feedback. Nature 639, 609–616 (2025).

Vaziri, M., Mandel, L., Spiess, C. & Hirzel, M. PDL: a declarative prompt programming language. Preprint at https://doi.org/10.48550/arXiv.2410.19135 (2024).

Lu, Y. et al. Towards doctor-like reasoning: Medical RAG fusing knowledge with patient analogy through textual gradients. In 39th Conference on Neural Information Processing Systems (NeurIPS, 2025).

Maharjan, J. et al. OpenMedLM: prompt engineering can out-perform fine-tuning in medical question-answering with open-source large language models. Sci. Rep. 14, 14156 (2024).

Nori, H. et al. Can generalist foundation models outcompete special-purpose tuning? Case study in medicine. Preprint at https://doi.org/10.48550/arXiv.2311.16452 (2023).

Wu, S., Koo, M., Scalzo, F. & Kurtz, I. AutoMedPrompt: a new framework for optimizing LLM medical prompts using textual gradients. Preprint at https://doi.org/10.48550/arXiv.2502.15944 (2025).

Yu, F. et al. Heterogeneity and predictors of the effects of AI assistance on radiologists. Nat. Med. 30, 837–849 (2024).

Rrv, A., Tyagi, N., Uddin, M. N., Varshney, N. & Baral, C. Chaos with keywords: exposing large language models sycophancy to misleading keywords and evaluating defense strategies. In Findings of the Association for Computational Linguistics 12717–12733 (ACL, 2024).

Fanous, A. et al. SycEval: evaluating LLM sycophancy. In Proc. AAAI/ACM Conference on AI, Ethics, and Society 8, 893–900 (ACM, 2025).

Su, X. et al. KGARevion: an AI agent for knowledge-intensive biomedical QA. In International Conference on Learning Representations (ICLR, 2025).

Zhang, G. et al. Leveraging long context in retrieval augmented language models for medical question answering. npj Digit. Med. 8, 239 (2025).

Ke, Y. H. et al. Retrieval augmented generation for 10 large language models and its generalizability in assessing medical fitness. npj Digit. Med. 8, 187 (2025).

Kresevic, S. et al. Optimization of hepatological clinical guidelines interpretation by large language models: a retrieval augmented generation-based framework. npj Digit. Med. 7, 102 (2024).

Lopez, I. et al. Clinical entity augmented retrieval for clinical information extraction. npj Digit. Med. 8, 45 (2025).

Asai, A., Wu, Z., Wang, Y., Sil, A. & Hajishirzi, H. Self-RAG: learning to retrieve, generate, and critique through self-reflection. In International Conference on Learning Representations (ICLR, 2024).

Yang, D., Zeng, L., Rao, J. & Zhang, Y. Knowing you don’t know: learning when to continue search in multi-round RAG through self-practicing. In Proc. 48th International ACM SIGIR Conference on Research and Development in Information Retrieval 1305–1315 (ACM, 2025).

Islam, S. B. et al. Open-RAG: enhanced retrieval augmented reasoning with open-source large language models. In Findings of the Association for Computational Linguistics 14231–14244 (ACL, 2024).

Jeong, S., Baek, J., Cho, S., Hwang, S. J. & Park, J. C. Adaptive-RAG: learning to adapt retrieval-augmented large language models through question complexity. In Proc. 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Vol. 1: Long Papers) 7036–7050 (ACL, 2024).

Yang, R. et al. Retrieval-augmented generation for generative artificial intelligence in health care. npj Health Syst. 2, 2 (2025).

Anisuzzaman, D. M., Malins, J. G., Friedman, P. A. & Attia, Z. I. Fine-tuning large language models for specialized use cases. Mayo Clin. Proc. Digit. Health 3, 100184 (2025).

Wiest, I. C. et al. Deidentifying medical documents with local, privacy-preserving large language models: the LLM-anonymizer. NEJM AI 2, 4 (2025).

Croskerry, P. A universal model of diagnostic reasoning. Acad. Med. 84, 1022–1028 (2009).

Geiping, J. et al. Scaling up test-time compute with latent reasoning: a recurrent depth approach. In 39th Annual Conference on Neural Information Processing Systems (NeurIPS, 2025).

Makarov, N. et al. Large language models forecast patient health trajectories enabling digital twins. npj Digit. Med. 8, 588 (2025).

Renc, P. et al. Zero shot health trajectory prediction using transformer. npj Digit. Med. 7, 256 (2024).

Wang, J. et al. Self-improving generative foundation model for synthetic medical image generation and clinical applications. Nat. Med. 31, 609–617 (2024).

Rao, V. M. et al. Multimodal generative AI for medical image interpretation. Nature 639, 888–896 (2025).

Duan, Y., Xu, C., Pei, J., Han, J. & Li, C. Pre-train and plug-in: flexible conditional text generation with variational auto-encoders. In Proc. 58th Annual Meeting of the Association for Computational Linguistics 253–262 (ACL, 2020).

Epstein, D., Jabri, A., Poole, B., Efros, A. & Holynski, A. Diffusion self-guidance for controllable image generation. Adv. Neural Inf. Proc. Syst. 36, 16222–16239 (2023).

Li, Z. et al. ControlAR: controllable image generation with autoregressive models. In 13th International Conference on Learning Representations (ICLR, 2025).

Beattie, J. et al. Using large language models to create patient centered consent forms. Int. J. Radiat. Oncol. Biol. Phys. 120, e612 (2024).

Shi, Q. et al. Transforming informed consent generation using large language models: mixed methods study. JMIR Med. Inform. 13, e68139 (2025).

Rudra, P., Balke, W. -T., Kacprowski, T., Ursin, F. & Salloch, S. Large language models for surgical informed consent: an ethical perspective on simulated empathy. J. Med. Ethics https://doi.org/10.1136/jme-2024-110652 (2025)

Ravfogel, S., Goldberg, Y. & Goldberger, J. Conformal nucleus sampling. In Findings of the Association for Computational Linguistics 27–34 (ACL, 2023).

Minh, N. N. et al. Turning up the heat: min-p sampling for creative and coherent LLM outputs. In 13th International Conference on Learning Representations (ICLR, 2025).

Zhou, K., Yang, J., Loy, C. C. & Liu, Z. Conditional prompt learning for vision-language models. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 16816–16825 (IEEE, 2022).

Khasentino, J. et al. A personal health large language model for sleep and fitness coaching. Nat. Med. 31, 3394–3403 (2025).

Wen, J. et al. The genetic architecture of multimodal human brain age. Nat. Commun. 15, 2604 (2024).

Mizrahi, D. et al. 4M: massively multimodal masked modeling. Adv. Neural Inf. Proc. Syst. 36, 58363–58408 (2023).

Meng, X., Sun, K., Xu, J., He, X. & Shen, D. Multi-modal modality-masked diffusion network for brain MRI synthesis with random modality missing. IEEE Trans. Med. Imaging 43, 2587–2598 (2024).

Stahlschmidt, S. R., Ulfenborg, B. & Synnergren, J. Multimodal deep learning for biomedical data fusion: a review. Brief Bioinform. 23, bbab569 (2022).

Boehm, K. M., Khosravi, P., Vanguri, R., Gao, J. & Shah, S. P. Harnessing multimodal data integration to advance precision oncology. Nat. Rev. Cancer 22, 114–126 (2022).

Johnson, R., Li, M. M., Noori, A., Queen, O. & Zitnik, M. Graph artificial intelligence in medicine. Annu. Rev. Biomed. Data Sci. 7, 345–368 (2024).

Kline, A. et al. Multimodal machine learning in precision health: a scoping review. npj Digit. Med. 5, 171 (2022).

Huang, Y. et al. Multimodal AI predicts clinical outcomes of drug combinations from preclinical data. Preprint at https://doi.org/10.48550/arXiv.2503.02781 (2025).

Zhang, Y. et al. Multiple heads are better than one: mixture of modality knowledge experts for entity representation learning. In 13th International Conference on Learning Representations (ICLR, 2025).

Bao, H. et al. VLMo: unified vision-language pre-training with mixture-of-modality-experts. Adv. Neural Inf. Proc. Syst. 35, 32897–32912 (2022).

Yun, S. et al. Flex-MoE: modeling arbitrary modality combination via the flexible mixture-of-experts. Adv. Neural Inf. Proc. Syst. 37, 98782–98805 (2024).

Cho, M. et al. Cocoon: robust multi-modal perception with uncertainty-aware sensor fusion. In 13th International Conference on Learning Representations (ICLR, 2025).

Tu, T. et al. Towards conversational diagnostic artificial intelligence. Nature 642, 442–450 (2025).

McDuff, D. et al. Towards accurate differential diagnosis with large language models. Nature 642, 451–457 (2025).

Gao, S. et al. Empowering biomedical discovery with AI agents. Cell 187, 6125–6151 (2024).

Guo, D. et al. DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning. Nature 645, 633–638 (2025).

Gao, S. et al. TxAgent: an AI agent for therapeutic reasoning across a universe of tools. Preprint at https://doi.org/10.48550/arXiv.2503.10970 (2025).

Qu, X. et al. A survey of efficient reasoning for large reasoning models: language, multimodality, and beyond. Preprint at https://doi.org/10.48550/arXiv.2503.21614 (2025).

Besta, M. et al. Reasoning language models: a blueprint. Preprint at https://doi.org/10.48550/arXiv.2501.11223 (2025).

Johnson, R. et al. ClinVec: unified embeddings of clinical codes enable knowledge-grounded AI in medicine. Preprint at medRxiv https://doi.org/10.1101/2024.12.03.24318322 (2025).

Wallace, E. et al. Managing patients with multimorbidity in primary care. BMJ 350, h176 (2015).

Spillmann, R. C. et al. A window into living with an undiagnosed disease: illness narratives from the Undiagnosed Diseases Network. Orphanet J. Rare Dis. 12, 1–11 (2017).

Wei, J. et al. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Proc. Syst. 35, 24824–24837 (2022).

Rafailov, R. et al. Direct preference optimization: your language model is secretly a reward model. Adv. Neural Inf. Proc. Syst. 36, 53728–53741 (2023).

Nathani, D. et al. MLGym: a new framework and benchmark for advancing AI research agents. Preprint at https://doi.org/10.48550/arXiv.2502.14499(2025).

Jiang, Y. et al. MedAgentBench: a virtual EHR environment to benchmark medical LLM agents. NEJM AI 2, AIdbp2500144 (2025).

Kazemi, M. et al. BIG-bench extra hard. In Proc. 63rd Annual Meeting of the Association for Computational Linguistics (Vol. 1: Long Papers) 26473–26501 (ACL, 2025).

Liang, P. et al. Holistic evaluation of language models. Preprint at https://doi.org/10.48550/arXiv.2211.09110 (2023).

Choi, H. K., Khanov, M., Wei, H. & Li, Y. How contaminated is your benchmark? Measuring dataset leakage in large language models with kernel divergence. In 13th International Conference on Machine Learning (ICLR, 2025).

Ektefaie, Y. et al. Evaluating generalizability of artificial intelligence models for molecular datasets. Nat. Mach. Intell. 6, 1512–1524 (2024).

Bourlon, M. T. et al. Envisioning academic global oncologists: proposed competencies for global oncology training from ASCO. JCO Glob. Oncol. 10, e2300157 (2024).

Johnson-Peretz, J. et al. Geographical, social, and political contexts of tuberculosis control and intervention, as reported by mid-level health managers in Uganda: ‘the activity around town’. Soc. Sci. Med. 338, 116363 (2023).

Ning, Y. et al. An ethics assessment tool for artificial intelligence implementation in healthcare: CARE-AI. Nat. Med. 30, 3038–3039 (2024).

Boverhof, B. -J. et al. Radiology AI Deployment and Assessment Rubric (RADAR) to bring value-based AI into radiological practice. Insights Imaging 15, 34 (2024).

Dagan, N. et al. Evaluation of AI solutions in health care organizations — the OPTICA tool. NEJM AI 1, AIcs2300269 (2024).

Borja, N. A. et al. Advancing equity in rare disease research: insights from the undiagnosed disease network. Am. J. Med. Genet. A 197, e63904 (2025).

Williams, J. S., Walker, R. J. & Egede, L. E. Achieving equity in an evolving healthcare system: opportunities and challenges. Am. J. Med. Sci. 351, 33–43 (2016).

Pool, J., Indulska, M. & Sadiq, S. Large language models and generative AI in telehealth: a responsible use lens. J. Am. Med. Inform. Assoc. 31, 2125–2136 (2024).

Yu, K. -H., Healey, E., Leong, T. -Y., Kohane, I. S. & Manrai, A. K. Medical artificial intelligence and human values. N. Engl. J. Med. 390, 1895–1904 (2024).

Lewis, P. et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. Adv. Neural Inf. Process. Syst. 33, 9459–9474 (2020).

Wei, J. et al. Finetuned language models are zero-shot learners. In 10th International Conference on Learning Representations (ICLR, 2022).

Ouyang, L. et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 35, 27730–27744 (2022).

Gururangan, S. et al. Don’t stop pretraining: adapt language models to domains and tasks. In Proc. 58th Annual Meeting of the Association for Computational Linguistics 8342–8360 (ACL, 2020).

Schick, T. et al. Toolformer: language models can teach themselves to use tools. Adv. Neural Inf. Process. Syst. 36, 68539–68551 (2023).