This systematic review synthesized economic evidence from 19 diverse clinical AI interventions across multiple specialties, demonstrating consistent clinical improvements, measurable cost savings, and generally favorable incremental cost-effectiveness outcomes. Key determinants influencing economic viability included diagnostic performance, technology and implementation costs, workflow integration, and regional healthcare financing structures. Despite overall positive economic outcomes, methodological gaps remain, including incomplete cost evaluations, static modeling approaches, and limited consideration of equity. However, it is critical to differentiate clearly between findings derived from full economic evaluations and those from budget impact analyses, as these methods offer distinct yet complementary information. Full economic evaluations provide evidence regarding value for money and optimal resource allocation by simultaneously assessing incremental costs alongside clinical outcomes or quality-of-life measures, typically utilizing longer time horizons and broad societal perspectives. Conversely, budget impact analyses exclusively assess short-term affordability and immediate financial consequences for healthcare payers, facilitating near-term budgetary decision-making but without consideration of clinical effectiveness or broader societal benefits.

A central insight from our review is that AI interventions can generate economic benefits primarily by enhancing clinical performance. Many studies reported improvements in diagnostic accuracy and reductions in unnecessary procedures that translate directly into cost savings. For instance, evaluations in diabetic retinopathy screening and atrial fibrillation detection have shown that even modest improvements in sensitivity and specificity can result in lower per-patient screening costs and improved QALYs. This finding is consistent with Khanna et al., which underscores AI’s dual role in healthcare—enhancing clinical outcomes through improved diagnostic accuracy and personalized treatment while simultaneously reducing costs by optimizing resource allocation and streamlining care pathways7. Nonetheless, while these studies underscore AI’s potential, they often focus solely on operational savings, leaving out important elements like upfront capital expenditures, integration costs, and ongoing maintenance expenses. Without a complete net present value (NPV) analysis, estimates of economic benefit may be overly optimistic. It is important to note that the ICERs reported in many studies do not imply uniform economic benefits across all clinical applications. Instead, these findings underscore that the cost-effectiveness of AI is contingent upon specific contextual factors, including local healthcare settings, implementation costs, and diagnostic performance metrics.

Our review shows that nearly 79% of studies relied on decision-analytic models—primarily Markov models and decision trees—to simulate long-term outcomes. While these models provide a structured approach for economic evaluations, most of them used fixed transition probabilities that do not reflect the evolving performance of AI systems. Given that AI is designed to improve with exposure to larger datasets, there is a clear need for dynamic modeling approaches that can capture the adaptive learning curve inherent to these applications. Recent external literature emphasizes that models incorporating time-dependent parameters and learning curves provide a more accurate projection of long-term cost-effectiveness39.

A recurring limitation identified in our analysis is the incomplete accounting of costs. Many studies reported favorable ICERs or net savings primarily based on reduced diagnostic errors and streamlined workflows. However, these analyses often omitted substantial expenses such as initial investments in AI infrastructure, costs for software integration, and ongoing maintenance fees. Economic evaluations in fields like remote patient monitoring (RPM) and precision medicine often consider both upfront and recurring costs. While some full economic evaluations incorporate long-term cost-effectiveness analyses, including cost-utility and cost-benefit models, not all studies explicitly apply full net present value (NPV) calculations. Short-term studies may underestimate long-term financial sustainability, highlighting the need for broader economic perspectives and extended time horizons in future research40. A comprehensive cost evaluation is essential for decision-makers to assess whether the long-term savings from AI justify the initial expenditures. Incorporating all cost components into economic models would provide a more realistic picture of AI’s value proposition, enabling healthcare systems to allocate resources more judiciously.

In addition, while our analysis has focused on the direct costs associated with implementing clinical AI interventions, it is important to recognize that these estimates may underestimate the true cost burden of AI technologies. Recent evidence suggests that AI, particularly generative models, can be extraordinarily energy- and water-intensive, thereby incurring significant environmental costs41. This environmental impact not only contributes to the overall cost but may also have broader implications for sustainability and resource allocation in healthcare. Moreover, although several studies omit indirect costs and upfront investments—such as infrastructure and integration expenses—they simultaneously present many AI interventions as ‘dominant’ or cost-saving. This discrepancy suggests that the reported economic benefits may be overestimated.

Nearly all studies in our review incorporated sensitivity analyses, yet most focused only on parameter uncertainty and neglected structural uncertainty. While the majority of included studies conducted extensive sensitivity analyses to address parameter uncertainty, a significant limitation remains in the underexploration of structural uncertainty. Many evaluations rely on static models with fixed transition probabilities, which do not capture the dynamic learning and improvement of AI systems over time. This methodological shortcoming may result in overconfident estimates of cost savings, as the models do not account for potential shifts in clinical performance or unexpected cost escalations. Consequently, decision-makers should interpret these findings with caution, recognizing that the apparent economic benefits may be sensitive to untested structural assumptions. A recent review advocates for the use of advanced uncertainty analysis methods—such as Monte Carlo simulations and scenario analyses—that address both parameter and structural uncertainties42. By testing a range of assumptions and scenarios, these approaches provide decision-makers with a spectrum of potential outcomes and help identify the conditions under which AI interventions remain cost-effective. Enhancing uncertainty analysis will not only improve the credibility of individual studies but also strengthen the overall evidence base needed to guide investment in AI technologies.

One of the most concerning gaps in literature is the insufficient consideration of equity in economic evaluations. A notable shortcoming in the current literature is the limited disaggregation of economic outcomes by key demographic variables. This oversight raises concerns that the aggregate favorable findings may mask significant disparities in benefit distribution across different populations. Without subgroup-specific analyses, the potential for algorithmic bias remains unaddressed, and the risk is that cost-effectiveness results could disproportionately favor well-resourced groups. AI systems are highly dependent on the quality and representativeness of the data used for training. AI algorithms trained on non-representative datasets can amplify biases, leading to disparities in care and unequal model performance across populations. Models optimized for majority groups often perform poorly for underrepresented patients, increasing misdiagnoses and inequitable treatment recommendations43,44. Future evaluations should integrate equity metrics—such as subgroup-specific QALY gains and cost savings—to ensure that AI interventions deliver benefits equitably and that potential biases are identified and mitigated.

The economic evaluations in our review generally demonstrated high methodological rigor as evidenced by CHEERS and Drummond checklist scores, with clear study objectives, robust sensitivity analyses, and well-defined cost components. However, several limitations persist, including inconsistent reporting of indirect costs. It is important to note that many of the included studies omit indirect cost components and rely on static decision-analytic models. Such methodological choices may lead to an overestimation of the economic benefits of clinical AI interventions, as static models do not capture the dynamic improvements—such as learning curves and time-dependent performance enhancements—inherent to AI systems over time. The studies reviewed exhibit considerable heterogeneity in terms of methodologies, designs, and settings. This heterogeneity may limit the generalizability of our overall findings. Differences in study designs, population characteristics, and analytic methods necessitate a cautious interpretation of aggregated outcomes, as such variability can influence reported cost-effectiveness estimates. While the majority of studies report favorable economic outcomes for clinical AI interventions, the reliance on optimistic assumptions and methodological limitations in several studies suggests that these benefits may be overestimated. It is crucial that our conclusions adopt a more cautious tone, explicitly acknowledging that the true economic value of AI interventions remains uncertain until future evaluations address these limitations. These gaps underscore the need for future evaluations to adopt more comprehensive and adaptive methodologies to capture the evolving performance of AI systems and enhance the transparency and generalizability of findings across diverse healthcare settings.

Our study builds upon and extends the work of Voets et al.18 and Vithlani et al.19 by broadening the scope to encompass clinical AI interventions across multiple healthcare settings and specialties. While Voets et al. primarily examined automated imaging applications—highlighting limitations inherent to cost-minimization approaches—and Vithlani et al. emphasized the drawbacks of static decision-analytic models and incomplete reporting, our review confirms the generally favorable economic outcomes associated with AI while also revealing critical gaps. Notably, our findings underscore the need for dynamic modeling frameworks that capture the evolving performance of AI systems, comprehensive cost evaluations that account for implementation, integration, and maintenance expenditures, and robust equity analyses to ensure that economic benefits are equitably distributed. This integrated perspective not only reinforces the promise of AI in enhancing clinical performance and reducing costs but also provides a more nuanced understanding of its long-term economic viability.

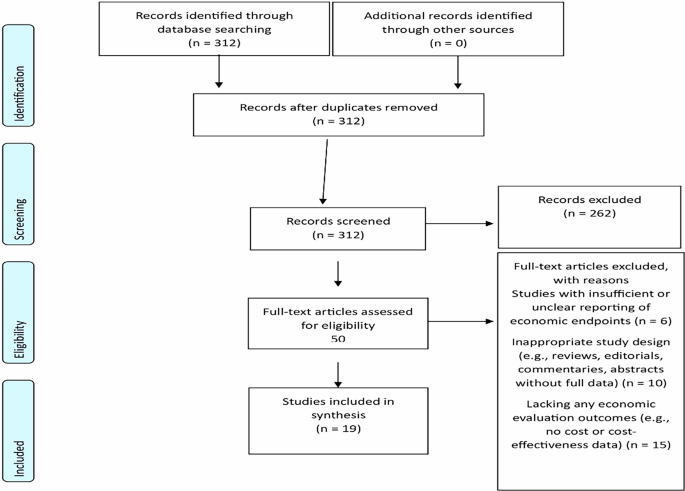

The review’s strengths lie in its clear scope, rigorous adherence to PRISMA 2020 guidelines, and comprehensive search strategy across multiple databases, coupled with dual quality assessments using the CHEERS 2022 and Drummond checklists, which enhance its transparency and credibility. However, its weaknesses include variability in the methodologies and reporting standards of the included studies, a potential language bias from excluding non-English publications, and study heterogeneity. Although we used the Consolidated Health Economic Evaluation Reporting Standards (CHEERS 2022) to assess the quality of reporting across included studies, we recognize the subsequent release of the CHEERS-AI checklist45 in the third quarter of 2024 as a significant advancement. CHEERS-AI introduces 10 AI-specific reporting items and 8 elaborations on existing CHEERS 2022 domains, specifically addressing critical features such as AI learning over time, algorithmic bias, user autonomy, implementation requirements, and uncertainty arising from AI-specific factors. We believe its adoption in future reviews will meaningfully enhance the evaluation of AI-specific nuances in cost-effectiveness research. The emergence of CHEERS-AI also highlights the need for ongoing refinement of reporting standards to remain responsive to rapidly evolving digital health technologies. Although we assessed quality using the Drummond checklist, we acknowledge that it is somewhat dated and has been critiqued in recent literature. Newer alternatives, such as the CHEQUE checklist46, have been proposed; however, no single quality checklist for cost-effectiveness analyses is universally accepted.

Future research in the economic evaluation of clinical AI interventions should prioritize several key areas. Dynamic adaptive modeling techniques are essential for accurately capturing AI’s evolving clinical and economic performance over time. However, implementing these approaches faces obstacles such as limited availability of longitudinal data, computational complexity, and resource constraints, particularly in lower-resource settings47. These barriers can be overcome by promoting international collaborative research networks, investing in robust data-sharing infrastructures, and providing specialized methodological training to build analytical capacity globally.

A further priority is adopting comprehensive standardized cost evaluation frameworks. Such frameworks should include thorough NPV analyses, clearly accounting for initial investments, integration costs, and ongoing maintenance expenses. Potential challenges here include inconsistent definitions and cost-reporting practices, variability in regional standards, and resistance to standardization48. Strategies to mitigate these obstacles include encouraging global adherence to standards such as CHEERS 202249 and CHEERS-AI45, facilitating international consensus-building forums, and standardizing reporting practices to enhance transparency and comparability across healthcare systems.

Researchers should also adopt advanced uncertainty analysis techniques, such as Monte Carlo simulations and comprehensive scenario analyses, addressing both parameter and structural uncertainties. Implementation barriers, such as methodological complexity and limited technical expertise, especially in resource-limited contexts, can be overcome by developing international methodological training programs, sharing analytical resources and software through global collaborations, and providing detailed methodological guidelines to support consistent application worldwide.

Future research should integrate a distributional cost-effectiveness analysis framework to explicitly assess how the costs and benefits of clinical AI interventions are allocated across different population subgroups. This approach enables decision-makers to weigh tradeoffs between efficiency and equity, ensuring that AI-driven innovations deliver benefits that are shared fairly50,51.

It is also critical to embed equity considerations throughout the AI development process—from initial model design and data curation to clinical integration and post-deployment monitoring. By proactively integrating equity metrics at each phase, researchers can identify and mitigate biases, thereby promoting equitable outcomes across diverse demographic groups52.

Moreover, future evaluations must incorporate assessments of algorithmic bias and explicitly examine AI’s impact on various demographic populations53,54. Addressing challenges such as the scarcity of representative datasets and the lack of standardized equity frameworks will require establishing international standards for inclusive data collection, mandating equity audits and bias-aware frameworks, and promoting policy-driven initiatives to encourage equitable implementation globally.

Finally, conducting context-specific economic evaluations tailored to local healthcare infrastructures, reimbursement mechanisms, and regional cost variations is crucial. Obstacles such as variations in regional healthcare financing, infrastructural disparities, and lack of suitable evaluation frameworks must be tackled through strategies like creating adaptable economic evaluation models, facilitating regional benchmarking studies, and developing localized policy guidance to support context-specific decision-making.

Dynamic and adaptive modeling, coupled with comprehensive cost analyses, will yield more accurate and realistic long-term projections that truly reflect the evolving nature of AI technologies. By integrating clinical and economic outcomes and prioritizing equity, future studies will ensure that AI advancements not only enhance patient care but also deliver sustainable and fair economic benefits.

Tailored, context-specific evaluations will empower healthcare policymakers to make informed, regionally relevant decisions, fostering efficient resource allocation and smoother technology adoption. Moreover, standardized methodologies and interdisciplinary collaborations will strengthen the evidence base, ensuring that the economic evaluations of clinical AI are both robust and actionable. Ultimately, these strategies will drive investments and policy decisions that not only elevate the quality of patient care but also secure long-term economic sustainability and equitable access across global healthcare systems.

The feasibility and implications of these strategies vary substantially across healthcare systems and geographical regions. In high-resource settings where advanced healthcare infrastructures, sophisticated technological capabilities, and established regulatory frameworks exist, obstacles to implementation primarily include complex integration processes, high initial AI technology costs, and regulatory approval complexities. These regions can overcome these challenges by leveraging public-private partnerships, enhancing regulatory clarity and guidelines, and developing clear reimbursement policies incentivizing quality-driven AI adoption.

Conversely, in low-or -middle resource settings significant barriers include insufficient data infrastructures, limited financial resources, and scarce local technical expertise. These challenges can be addressed through targeted initiatives, including international support for capacity-building, investment in scalable, low-cost AI technologies, establishment of global resource-sharing networks, and development of tailored evaluation frameworks sensitive to local economic contexts.

Therefore, tailored strategies and international collaboration remain crucial to overcoming region-specific barriers, ensuring clinical AI delivers substantial, equitable, and sustainable health-economic benefits across diverse global healthcare environments.

In conclusion, this review finds that clinical AI interventions are generally associated with improved diagnostic accuracy, measurable gains in quality-adjusted life years, and the potential for meaningful cost savings compared to conventional care. However, the strength of this evidence is constrained by notable methodological limitations across literature, most significantly, the frequent omission of essential cost components such as infrastructure investment, system integration, workforce training, and long-term maintenance. These omissions introduce uncertainty and suggest that the reported economic value may, in some cases, be overstated. To generate more accurate and policy-relevant insights, future evaluations must adopt dynamic, longitudinal, and fully transparent modeling frameworks that comprehensively account for both direct and indirect costs over time.